Arthur C. Clarke’s third law states “any sufficiently advanced technology is indistinguishable magic.”

One magic show that I really like is Penn and Teller’s “Fool Us,” where magicians present their latest techniques and try to fool the hosts, Penn Jillette and Raymond Teller. If Penn and Teller can determine how a trick was done, they try to explain it to the magician using rather cryptic terminology.

NVIDIA’s Jetson AGX Xavier (Fig. 1) isn’t a magical device, but it is advanced technology that can do some amazing things. In particular, it can handle advanced machine-learning (ML) inference chores such as doing object recognition on video streams from multiple cameras. To many this may seem like magic, but it’s simply a matter of taking advantage of the company’s advanced hardware and plethora of software.

1. NVIDIA’s Jetson AGX Xavier module (right) sits inside the development system (left).

The first part of this series, “NVIDIA Jetson AGX Xavier Part 1: Hardware,” examined the details of the Jetson AGX Xavier hardware. The 350-mm2 system-on-chip has over 9 billion transistors. This includes quad, 64-bit Arm cores and a Volta GPU with 512 CUDA Tensor cores and eight Volta stream processors. There’s also a deep-learning accelerator (DLA) and programmable vision accelerator (PVA).

Software support for the AGX Xavier is the same as for the earlier Jetson TX1 and TX2. This includes NVIDIA JetPack and DeepStream SDKs plus support for the company’s CUDA, cuDNN, and TensorRT software libraries. These support ML frameworks like TensorFlow and Caffe.

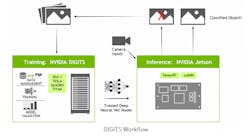

I started exploring NVIDIA’s software using its “Two Days to a Demo” (Fig. 2). This is a step-by-step guide to using the software that runs on the Jetson AGX Xavier, as well as training software that can run on your workstation or in the cloud. I did my training locally running on the NVIDIA GeForce RTX 2080 Ti.

2. “Two Days to a Demo” ties together the DIGITS training system with the inference engine applications running on the Jetson AGX Xavier.

The process does take two days, but you’re learning things all along the way. The host software runs the same Ubuntu 16.04 Linux that runs on the Jetson AGX Xavier. Training can be done on the latter; however, the amount of storage and processing power available makes this a very long and tedious process. I picked up a 2-TB M.2 solid-state drive for my Core i7 PC to handle the large data sets used for training.

The Jetson AGX Xavier comes with JetPack installed, but I had to install it on my PC. Doing so with a multiple boot and secure-boot support actually took more effort than going through the training guide.

The first step after the software is installed is to run some preconfigured demo applications. One takes advantage of the camera that can be plugged into the Jetson AGX Xavier to highlight the real-time processing power of system running the TensorRT support. It is a good way to make sure that all of the hardware is working properly.

Next comes the setup of the DIGITS training framework (Fig. 3) that runs on the host or in the cloud. This web-based system, written in Python, is designed for training deep-neural-network (DNN) models. DIGITS uses Docker, a container-based processing engine commonly employed in the enterprise. The NVIDIA GPU Cloud (NGC) provides Docker containers for DIGITS training that require minimal configuration. Consequently, a new user can actually take advantage of the system within the two-day timeframe.

3. NVIDIA’s DIGITS is a web-based interface to a Docker-based AI training system.

The exercises in the guide are progressively more complex and ambitious, thereby providing a good overview of the capability of DIGITS. By the time I made it through the guide, I was able to take new training data and add it to an existing model. It’s also possible to exploit the different types of machine-learning acceleration within the system. One example is the ability to enable and disable the DLA.

The examples in the guide support image-recognition classification, object-detection localization, and segmentation free space (Fig. 4). They use 2D images as well as processing video streams. Of course, the machine-learning support that can be provided by the Jetson AGX Xavier isn’t limited to these types of inputs.

4. The Jetson AGX Xavier can identify and segment images using machine-learning models.

The child in the presentation does a good job of introducing DIGITS and how to train neural-network models. It doesn’t go into detail on how to create applications that incorporate the inference engines used in sample applications. This is an area where developers will have to delve deeper into tools like TensorRT. One reason for not including this demo is that different programming languages can be used.

NVIDIA has additional tutorials on GitHub, including Deep Reinforcement Learning in Robotics.

Overall, the two-day demo is a great starting point and reveals some of the magic behind deep neural networks running on the Jetson AGX Xavier.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.