Intel will start selling a new chip for on-device artificial intelligence in 2020, looking to gain ground in the market for chips capable of running AI inside drones, cameras and other devices without connecting to data centers over the cloud. The company also continued to roll out programming tools and other software for running AI, giving it more ammunition to compete with rivals Nvidia and Qualcomm.

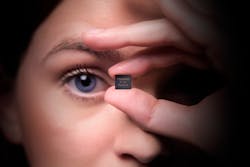

The Santa Clara, California-based company said its new chip, code named Keem Bay, can handle trillions of operations per second without burning through battery life. The chip is part of Intel's line of vision processing units (VPUs), which are designed for on-device deep learning. Intel said it offers more than 5 times the energy efficiency of graphics processing units (GPUs) and other chips eyeing edge AI.

The chip could advance Intel's ambitions to become one of the core players in AI. Nvidia's Volta GPUs are the gold standard for training deep learning in data centers, which means running through massive amounts of data. Intel is the world's largest vendor of server processors used in inferencing, the running of the resulting AI. Intel is selling billions of dollars of its Xeon Scalable CPUs for inferencing per year.

Most technology giants are currently computing AI in the cloud, where Intel is also targeting its Nervana ASICs for training and inferencing with the building blocks of deep learning, called neural networks. Intel has also started selling its Myriad line of Movidius VPUs for running inference on embedded devices instead of the cloud. That helps reduce delays—more commonly called latency—and bolster privacy.

Jonathan Ballon, vice president of Intel's Internet of Things group, said its new chip is based on a "groundbreaking" architecture specifically for embedded AI. It offers more performance at a fraction of the power, cost and area of more general purpose chips, like GPUs. "Performance is important at the edge, but customers also care about power, size and latency," he said at Intel's AI Summit in San Francisco.

Intel said the new chip will have 10 times more performance than its previous generation, the Myriad X, which can perform 1 trillion operations per second (TOPS) at 1 watt using a hardware accelerator called the neural compute engine. The new chip contains more on-chip memory than the Myriad X to cut down on redundant communications. The faster memory bandwidth speeds up data transfers and curbs power.

Intel also offers development tools and other software for speeding up inferencing. The company sells a set of programming tools, called OpenVINO, that can be used for building and running AI inside its CPUs, VPUs, FPGAs and ASICs. The tools, which support Tensorflow, PyTorch and other popular AI software suites, can be used to bolster the performance of the new Movidius VPU by another 50%, Ballon said.

Intel also introduced a program, called the Edge AI DevCloud, for customers to test out the company's broad range of AI processors and accelerators before buying. Intel said more than 2,500 customers are taking advantage of the service, which lets them test out applications on Intel's Xeon CPUs, Movidius VPUs, Nervana ASICs, and other chips in the cloud at no charge. That helps cut development costs.

Intel is fighting over the inferencing market with Nvidia, Qualcomm, and startups ranging from Mythic in the United States and Horizon Robotics in China, among others. Nvidia released a range of small supercomputers for AI, called Jetson, based on its Tegra SoCs. Jensen Huang, Nvidia's CEO, said more than 1,500 customers use the sub-$1000 supercomputers, from the flagship Jetson Xavier to the tiny Nano.

Intel said its new chip has more performance than Nvidia's Jetson TX2 chip at one-third of the power and performance on par with Xavier at one-fifth the power consumption. "It's a workhorse," Ballon said. “Rather than taking products designed for a different purpose, we are purpose-building products and software that the data explosion demands." Intel is aiming to launch Keem Bay in the first half of 2020.

Qualcomm, long the leading designer of chips used in smartphones, offers chips that can carry out more than 2 trillion AI operations per second using its Kryo CPUs, Adreno GPUs and Hexagon DSPs at 1 watt. The 10-nanometer chips can sever the connection of cameras, drones and other edge devices to the cloud. The company said it sold more than $1 billion of Internet of Things chips in 2018.

AI is increasingly important to Intel's chip business. Intel said sales of AI processors more than doubled over the last year to $3.5 billion, or around 5% of its total estimated sales in 2019. Intel has started supplying its Nervana ASICs to Baidu and Facebook for use in data centers. It is also selling FPGAs to Microsoft and other cloud-computing giants that can programmed for so-called real-time AI inferencing.

"Intel already has huge market share," Naveen Rao, general manager of Intel's artificial intelligence products, said at Intel's AI Summit. “Right now our biggest customers are actually hitting hardware constraints," he said. “There's a trend where the industry is headed to build ASICs for AI. That's because the growth of demand is actually outpacing what we can build in some of our other product lines.”

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.