AI Dev Kit Targets Autonomous Robots and Cars

What you’ll learn:

- What is Jetson Thor?

- What are its capabilities?

- What are Jetson Thor’s target applications?

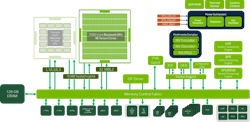

NVIDIA announced the Jetson Thor for automotive applications several years ago. At the Hot Chips 2025 conference, the company unveiled an expansion to its availability and functionality with the Jetson Thor Developer kit (Fig. 1). The kit, priced at $3,499, is based on the NVIDIA Jetson T5000 that incorporates a Blackwell GPU.

The NVIDIA DRIVE AGX Thor Developer Kit (Fig. 2) for automotive applications was also announced. It provides automotive-grade hardware and a suite of SDKs and libraries targeting self-driving vehicles. Two Thor modules can be connected using NVLink-C2C interconnect technology.

The carrier board exposes all of the interfaces and adds a 1-TB solid-state drive and Wi-Fi 6E support. There’s also a 5G Ethernet port along with a QSFP28 socket, dual USB Type-A and Type-C interfaces, a DisplayPort, and HDMI 2.1 connection. The kit provides a hefty cooling system, including a fan and heatsink.

The Jetson Thor module is designed to handle lots of video (Fig. 3). Designers can connect up to 20 cameras. It supports video encode up to 6x 4Kp60 for H.265 and H.264 as well as video decode for streams up to 4x 8Kp30 for H.265 and 4x 4Kp60 for H.264. The module also includes NVIDIA’s Programmable Vision Accelerator (PVA). The system can drive up to four HDMI 2.1 or VESA DisplayPort connections.

Choose Your Jetson Thor Version

The Jetson Thor module comes in two flavors. The T5000 is included in the developer kit, while the lower-cost T4000 targets embedded applications that need less processing power (see table). The T4000 also requires less power when running full tilt. Both are supported by JetPack 7, the latest Jetson toolkit. It supports NVIDIA’s Server Base System Architecture (SBSA) and CUDA 13.0.

Two versions of the Jetson Thor module are available: the T4000 and T5000. (Source: NVIDIA)

Significant performance improvements provided by the Jetson Thor module include 7.5X the performance of the previous generation while delivering 3.5X improvement in energy efficiency. It’s compatible with NVIDIA’s Isaac robotics simulation and development platform and works with the Isaac GR00T humanoid robot foundation models. Furthermore, it supports NVIDIA AI vision system, Metropolis, and NVIDIA’s Holoscan framework for real-time sensor processing.

NVFP4 and Jetson Thor

One feature that adds functionality and boosts performance is NVFP4 — NVIDIA’s 4-bit floating-point format. This differs from FP4 supported by other systems. The encoding differences enable the implementation of NVFP4 to higher throughput.

In general, 4-bit floating point diminishes computational overhead as well as reduces the size of a model. Often models are trained using larger floating-point encodings like FP16, FP8, BLOAT16, and BFLOAT8.

Integer-based models like INT4 and INT8 also provide more compact and efficient artificial-intelligence (AI) models, but floating point provides a wider value range. This typically improves the accuracy of large language models (LLMs) and vision language models (VLMs) targeted by Jetson Thor. With its compact size, Jetson Thor can handle LLMs and VLMs with billions of parameters processing trillions of tokens.

Where Will Jetson Thor Be Used?

The NVIDIA DRIVE AGX Thor obviously focuses on the automotive space, including self-driving cars. Jetson Thor will be used in a range of applications that want to implement LLMs and VLMs on the edge, where connectivity to the cloud may be impossible or infrequent. It can handle very large models with lots of input.

Autonomous robots are another area where Jetson Thor will find homes. Also in the mix is video security. Many non-video-related AI applications can take advantage of Jetson Thor’s compute power and AI acceleration, too.

The advantage for developers is that the rest of the Jetson family, like the Jetson Nano, are also potentially targets for aI applications depending on the performance, power, and storage requirements and limitations. Its less powerful siblings will not be able to keep up as input speeds and channels increase, but the programming environment remains the same. This is the key to NVIDIA’s success — having the biggest or smallest solution alone is insufficient to garner wide developer support.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.