Explainable AI for Anomaly Detection

This article is part of the TechXchange: AI on the Edge.

What you’ll learn:

- What is explainable AI (XAI)?

- What are some of the use cases for XAI?

- What are the technology requirements for implementing XAI?

Anomaly detection is the process of identifying when something deviates from the usual and expected. If an anomaly can be detected early enough, relevant corrective action can be taken to avoid serious consequences. As children, we have played the game of who can identify the oddities in a cleverly composed picture. This is anomaly detection at play.

Engineers, scientists, and technologists have historically counted on anomaly detection to prevent industrial accidents, stop financial fraud, intervene early to address health risks, etc. Traditionally, anomaly-detection systems have relied on statistical techniques, predefined rules, and/or human expertise. But these approaches have their limitations in terms of scalability, adaptability, and accuracy.

The number of real-life, high-value use cases for AI anomaly detection have grown a lot over the years and are expected to continue to rise. Advances in artificial intelligence (AI) are revolutionizing the field of anomaly detection. The result is improved accuracy, faster detection, reduced false positives, scalability, and cost-effectiveness. This article will identify what’s needed to implement such solutions and will touch on some use cases for illustrative purposes.

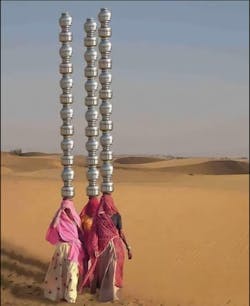

These days, many of the anomalies we come across may not be just happenstance but rather human created with malicious intent. For example, social media is rife with images and videos that are fake and deepfake. Advanced graphics and video production and manipulation technologies have made this possible. Let’s take a simple example and play the game of spotting the oddities on a modern day picture that was found on social media.

Study the figure below carefully and note down things that are anomalous.

Will traditional techniques for AI anomaly detection work on this picture? Maybe. One’s knowledge of the place referenced in the caption, common sense, knowledge of physics principles, practicality perspective, etc., could help identify the anomalies in the picture. Based on that, one could declare the picture to be genuine or fakery.

However, that’s not enough for others to believe your conclusion. You need to be able to explain how you came to that conclusion. This is the explainability part and it’s essential.

What is Explainable Artificial Intelligence (XAI)?

XAI is a subset of AI that emphasizes the transparency and interpretability of machine-learning models. With XAI, the output or decision of the AI model can be explained and understood by humans, making it easier to trust and utilize the results.

Anomaly detection using XAI can help identify and understand the cause of anomalies, leading to better countermeasure decision-making and improved system performance. The key benefits of XAI for anomaly detection are its ability to handle complex datasets, improve accuracy, and reduce false positives and false negatives.

What are the Different Use Cases for XAI?

AI anomaly-detection systems may make decisions that have significant impacts on individuals and the social collective at large. Many use cases are on the edge AI applications that relate to security, safety, production-line longevity, and customer-friendly service.

Furthermore, bottom-line decisions without reasoning are somewhat useless for use cases that will penetrate the judicial system and medical prognosis services in the future. XAI provides a way for systems to illustrate the decision-making process, as well as learn and continually improve for the benefit of industry and society.

Use Case: Access Control (Facial Recognition)

Authentication by facial-recognition applications is widely used for access control purposes and relies on computer vision and AI. While a system may be very good at detecting faces, many unexpected factors may cause the system to fail to recognize a face.

Given the purpose of this application, it’s tuned to prevent false-positives and alert if a malicious breaching attempt is taking place. But for a user who knows that his face should be recognized, it’s very annoying to say the least. Perhaps the application was running into difficulty near the mouth area or around the eyes. XAI can communicate accordingly so the user can retry after removing the mask or the glasses.

AI systems are learning-oriented and count on feedback for continual improvements to their algorithms. The system may be able to improve itself and recognize this user the next time around, even with partial occlusion of the face. On the other hand, XAI is enabling the system to distinguish at all times between recognition failure and intentional breaching attempt and alert accordingly.

Use Case: Autonomous Driving TSR (Traffic Signs Recognition)

Autonomous driving uses computer vision and AI to recognize speed limits and act accordingly. What if a malicious actor or natural deterioration were to alter a sign to change it from say 60 mph to 600 mph or to 80 mph? This use case can be more challenging than the facial-recognition case.

The 600-mph sign could be easily ignored as a tampered sign as no road-based vehicle of current times can even come close to 600 mph. But the 80-mph sign is easily achievable by many vehicles and it’s a legitimate speed limit in many places on many roads.

However, if the sign was fake, acting accordingly may not only be illegal, but also dangerous due to special road conditions. XAI could determine the validity of a sign, provide a probability level to the conclusion, and explain how that was arrived at. The autonomous-vehicle system could then take a safe action based on that input.

Use Case: Monitoring the Health of a Factory Machine

A factory machine that stops working unexpectedly and abruptly could be economically very expensive for a business. To avoid such a situation, sensors placed in the machine would continuously monitor the sounds emanating from the machine and run AI network models to analyze and detect abnormalities.

If out-of-order sounds or irregular frequencies are detected, which may lead to mechanical problems, the technician would be alerted to take preventative action before an abrupt breakdown of the machine.

Futuristic Use Case: Enabling Legal Hearings and Sentencing

There’s talk about using AI to analyze legal cases and issue verdicts. By analyzing legal documents and historical data, AI can identify anomalies in the data that may be relevant to the case.

With human judges, the most important aspect is the reasoning found in the opinion piece written after the case has been heard. By using XAI, the system can explain the anomalies identified, which can lead to better decision-making and improved overall system performance. For example, the use of XAI can help to identify the reasons why a particular legal precedent wasn’t used in a case.

What are the Technology Requirements for Implementing XAI?

Most XAI-driven anomaly-detection use cases require excellent computer vision, sound analysis, sensor-fusion capabilities, and multiple AI network models. For example, in the facial-recognition use case, parallel to the main AI network model that detects and authenticates specific individuals it’s trained to recognize, a relatively lean network will run only over a minimal region of interest (ROI) that was detected and authenticated positive.

The purpose of the secondary network is to run anomaly detection and genuine check to prevent a system breach through DeepFake and others. This process overhead must be optimized to minimize power consumption, and therefore will run mixed precision network on selective frames, ROI, and only over selective input/output layers from the main network.

The latter flow is somewhat different from traditional use cases, thus requiring a flexible memory architecture and adaptive data flow between the different AI processor blocks. When authentication negative or a genuine issue is detected, a larger XAI model will come forward to identify the issues that caused the fail and pass that information to the application layer for further action.

How is AXI Implemented?

Most of the AI anomaly-detection use cases are typically on edge AI applications. Anomalies need to be quickly detected, and then identify the cause and report it accordingly to take appropriate corrective actions in real-time.

An AI processor that can run multiple AI networks in parallel and perform computer-vision analysis, as well within the processor itself, is key. Depending on the use case and application, part of an AI network may have to be run, followed by statistical analysis on the subject image or sensing element. This may be followed by manipulation of the image. Then the rest of the first network or another AI network is run to explain the anomaly reason.

Thus, the implementation of XAI requires a powerful processor that offers the following:

- Highly flexible AI processor to implement diverse XAI/ML processing flows: memory architecture, multi-engine structure.

- High-performance computing capabilities: support for multiple data types including low-precision arithmetic operations; highly parallel processing capabilities; advanced quantization capabilities.

- Support the latest neural-network architectures.

- High utilization for efficient power consumption.

- Built-in computer-vision/DSP processing capabilities.

- Support mixed precision inferencing for data and weights.

CEVA Processor for Implementing XAI

One example of a platform designed to run XAI networks is CEVA’s NeuPro-M AI processor. The NeuPro-M processor core can be chosen in configurations of up to eight engines, each with its own vision DSP processor. As a result, there’s no need for the application to reach for an external DSP, GPU, or CPU for image processing.

Depending on the application need, the configuration with the appropriate number of engines can be selected to enable that many different AI networks to be run. The engines support various neural-network architectures and support quantization capabilities.

An application can implement some network layers in 16-bit, some in 8-bit, etc. In certain situations, even 2-bit implementations are possible. This flexibility not only saves power and area, but also accelerates performance, all of which are critical for edge AI use cases. NeuPro-M supports mixed precision as well, e.g., data and weight.

Read more articles in the TechXchange: AI on the Edge.

About the Author

Gil Abraham

Business Development Director and Product Marketing, CEVA Artificial Intelligence and Vision

Gil Abraham graduated from the Ben-Gurion university with a B.Sc. in electrical engineering. During the last 20 years, Gil worked at several international corporations, where he held various R&D positions from real-time embedded programming to system architect, and was an integral part of the successful imaging product, marketing, and BD groups at Zoran, a world leader in Imaging SoC and Corephotonics that developed innovative computational camera technologies.