Can We Trust AI in Circuit Design and Embedded Systems?

What you'll learn:

- Insight into AI and its use as a tool to design circuits and embedded systems that are safe and secure, and why it struggles.

- How timing unpredictability, GPU‑vs.‑FPGA tradeoffs, and security holes make current AI tools risky for safety‑critical embedded systems.

- Why AI, hypervisor isolation, and human oversight remain critical safeguards until smarter, safer models are produced.

Artificial intelligence permeates just about every industry these days. It’s being adopted as a tool for applications that range from automating design processes to code generation, and utilization is expected to grow exponentially. Recent reports show that AI is eliminating thousands of tech jobs from major companies such as Duolingo, Google, Intel, Microsoft, NVIDIA, TikTok, and hundreds more.

While the utilization of AI has been described as a beneficial tool for engineers and developers, the latest purge across the tech sector raises the question of whether those AI tools are good enough to replace workers. Can we trust AI in the design or coding processes?

AI assistants, such as ChatGPT, Copilot, and Gemini, are known to be inaccurate, with 51% of responses having significant problems, including 19% that contain factual errors. The same is true for AI search tools, translation apps, image recognition, healthcare, and even the criminal justice system.

So, where does that leave AI in the tech sector, which has relied heavily on human innovation and skill levels? Engineers and developers have used AI-based electronic design automation (EDA) tools for PCB and circuit design. However, it still falls short of human capabilities due to the complexity of those designs and the decision-making processes, as it lacks judgment skills.

AI: A Powerful But Concerning Tool

A recent paper from the Department of Excellence in Robotics & AI explores the topic of the trustworthiness of AI in safety-critical real-time embedded systems, especially those used in autonomous vehicles, robotics, and medical devices. The researchers state that while AI, in this case deep neural networks, provides powerful capabilities, it raises serious concerns related to timing unpredictability, security vulnerabilities, and transparent decision-making, which the researchers feel must be addressed before deployment.

>>Check out more content on AI and its trustworthiness in a product's lifecycle

With that said, modern embedded platforms are continually evolving to take advantage of heterogeneous architectures, which are equipped with integrated CPUs, GPUs, FPGAs, and specialized accelerators to meet the increasing demands of AI workloads. Still, managing resources (such as processes and threads), maintaining timing predictability, and optimizing tasks remain significant hurdles to overcome.

GPUs, which take advantage of complex AI frameworks, suffer from non-preemptive scheduling, high power consumption, and large size, limiting their use in embedded applications. FPGAs, on the other hand, offer a better alternative due to increased predictability, lower power consumption, and smaller form factors. But they also come with tradeoffs, including programming difficulties and limited resources.

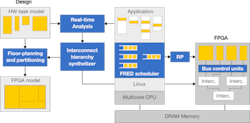

Recent advances, including FPGA virtualization techniques such as the FRED framework (Fig. 1), help mitigate some of those challenges by enabling dynamic partial reconfiguration and better resource management.

What About Security and Reliability?

Security is another major aspect of why AI can’t be trusted in designing circuits and embedded systems. Most AI systems today are built on top of general-purpose operating systems, such as Linux, which are vulnerable to cyber threats. The Jeep Cherokee hack is a case in point, as hackers Charlie Miller and Chris Valasek managed to take control of the vehicle’s wipers, radio, steering, and engine, bringing the vehicle to a complete stop.

To avoid this, some researchers have turned to hypervisor-based architectures, including commercial off-the-shelf (COTS) hypervisors and real-time (RT) hypervisors (Fig. 2). They partition AI and safety-critical functions into separate domains with built-in hardware security features, such as Arm’s TrustZone, to prevent one system from taking down the other.

Of course, there’s the issue of AI reliability itself. Deep-learning systems can be easily misled by incorrect inputs, subtle data tweaks that deceive the model, or by out-of-distribution (OOD) inputs that the AI has never encountered before. They can lead systems to make incorrect decisions that could result in disaster. When it comes to embedded systems, engineers need to know why AI made certain decisions, not just what it decided.

Solutions such as explainable AI (XAI) can help shed light on otherwise black-box incidents and behaviors and have the capability of revealing hidden biases in training data. Invaluable insight for any toolbox, but again, it’s not a catch-all solution—none of them are. However, when used together, they could help train AI on how to design circuits and embedded systems safely and with utmost transparency.

As any AI researcher will tell you, the technology simply isn’t up to the task yet, not that it would pass any ethics board at any rate. But that could all change in the next decade or two, when more advanced training models, architectures, and security precautions become available.

>>Check out more content on AI and its trustworthiness in a product's lifecycle

About the Author

Cabe Atwell

Technology Editor, Electronic Design

Cabe is a Technology Editor for Electronic Design.

Engineer, Machinist, Maker, Writer. A graduate Electrical Engineer actively plying his expertise in the industry and at his company, Gunhead. When not designing/building, he creates a steady torrent of projects and content in the media world. Many of his projects and articles are online at element14 & SolidSmack, industry-focused work at EETimes & EDN, and offbeat articles at Make Magazine. Currently, you can find him hosting webinars and contributing to Electronic Design and Machine Design.

Cabe is an electrical engineer, design consultant and author with 25 years’ experience. His most recent book is “Essential 555 IC: Design, Configure, and Create Clever Circuits”

Cabe writes the Engineering on Friday blog on Electronic Design.