Advanced AI SoC Powers Self-Driving Car

Check out Electronic Design's coverage of CES 2024.

What you’ll learn:

- How Ambarella’s system improves radar sensing.

- How the CV3 family addresses automotive applications like self-driving cars.

- What’s inside the CV3 system-on-chip (SoC)?

Advanced driver-assistance systems (ADAS) need high-performance computing engines to analyze the array of sensors around the car. The number of sensors and compute requirements continue to grow as self-driving cars put more demands on the hardware and software to provide a safe ride.

The Society of Automotive Engineers (SAE) specified six levels of driving automation. Level 0 is all manual while Level 5 is fully autonomous. Many companies are expanding the boundaries toward fully autonomous, but we’re not quite there yet. Companies such as Ambarella are pushing that envelope, though.

I recently had a chance to check out Ambarella’s self-driving technology, riding along with Alberto Broggi, General Manager at VisLab srl and Ambarella (watch the video above). They equipped a car with Ambarella’s CV3 chip and custom radar sensors plus additional sensors (Fig. 1). The self-driving software runs on the CV3, which incorporates a bunch of Arm cores and artificial-intelligence (AI) acceleration cores. As with most self-driving solutions today, this is a testbed that requires a live driver at the wheel to take over if necessary.

The CV3 is found in a system that’s housed in the trunk of the car (Fig. 2). The system has other boards and peripheral and network interfaces as the chip itself is a bit smaller.

The standard digital cameras and radar sensors ring the car (Fig. 3). These are connected to the automotive-grade CV3. The 4D imaging radar is from Oculii, which was recently acquired by Ambarella.

Its virtual aperture imaging software enhances the angular resolution of the radar system to provide a 3D point cloud. This is accomplished with a much simpler antenna and transmitter/receiver system that would normally be required to provide comparable point-cloud resolution. It uses dynamic waveform generation that’s performed by AI models. The approach can scale to higher resolution.

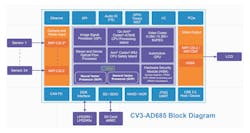

At the heart of the system is Ambarella’s ASIL-D-capable CV3-AD685, which includes a dozen, 64-bit Arm Cortex-A78AE cores and an Arm Cortex-R52 for real-time support (Fig. 4). The two dozen MIPI CSI-2 sensors feed the image-signal-processing (ISP) unit. The ISP supports multi-exposure, line-interleaved high-dynamic-range (HDR) inputs. It can handle real-time multi-scale, multi-field of view (FoV) generation and has hardware dewarping engine support.

There’s also a stereo and dense optical flow processor for advanced video monitoring. It addresses obstacle detection, terrain modeling and ego-motion from monocular video.

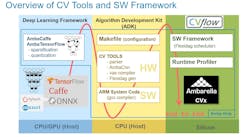

The Neural Vector Processor and a General Vector Processor accelerate a range of machine-learning (ML) models. This makes up CVflow, Ambarella’s AI/ML computer-vision processing system (Fig. 5). It specifically targets ADAS and self-driving car software chores.

The GPU is designed to handle surround-view rendering in addition to regular 3D graphics tasks. This enables a single system to handle user interaction as well as control and sensing work.

The processing islands are divided to allow for the safety-oriented island to take on ASIL D work while the other handles ASIL B, which includes the video output. Error correcting code (ECC) is used on all memory, such as external DRAM, and a central error handling unit (CEHU) provides advanced error management.

The chip targets a range of applications including driver monitoring systems (DMS) and multichannel, electronic mirrors with blind-spot detection.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.