The Path to Useful Quantum Computing Needs a QEC Roadmap

This article is part of Electronic Design’s 2024 Technology Forecast series. It's also part of the TechXchange: Basic Quantum Computing.

What you’ll learn:

- Why is error detection so critical to enabling quantum computing to scale?

- What is decoding and how must it progress to address the error correction challenges?

- What lies ahead on the QEC roadmap?

Today’s quantum computers are noisy. They can only perform a few hundred operations before this noise overcomes their quantum bits (qubits) and errors render their calculations useless.

To do something useful with quantum computers, we need closer to one trillion error-free quantum operations (a so-called TeraQuop). We can’t do this with physical improvements to the qubits alone. We also need to perfect a series of tools and technologies called quantum error correction (QEC).

Quantum error correction, effectively, uses many noisy (physical) qubits to build a more reliable (logical) qubit. The more qubits we use, the more reliable (higher fidelity) the logical qubit.

This process generates a continuous stream of data (called syndrome data). Therefore, a sophisticated algorithmic process called “decoding” is needed to process this data and keep the qubits running, error-free.

Decoding is one of the core technologies in the wider Quantum Error Correction Stack, where we also need to develop control systems in tandem to control those error-prone qubits.

Here’s the problem: If the decoder can’t keep up, a data backlog builds up and the quantum computer runs exponentially slower, eventually grinding to a halt.

Work is now underway to build a fast, scalable, and resource-efficient quantum decoder. We’re not there yet, but we do know the steps required to get that point and, in turn, unlock useful quantum computing, sooner.

The Path to QEC

The quantum-computing industry has already made its first important steps towards QEC. In the last couple of years, organizations including Google and ETH Zurich have made several landmark QEC demonstrations.

These experiments relied on decoding offline. In other words, the measurement results were collected, and decoding happened much later (possibly days or weeks later), rather than in real-time with data collection.

These are important early findings, demonstrating that we can build the qubit components of logical quantum memory. But we also need the capability to perform logical operations between logical qubits as the calculation runs. This means we need real-time decoding.

Decoding in real-time is a huge challenge for quantum computers. There are multiple qubit types and each one processes this data at different speeds. Superconducting qubits, for example, produce approximately a million rounds of measurement results (syndrome data) every second. That’s equivalent to Netflix’s global data streams.

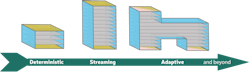

If we don’t decode fast enough, we encounter an exponentially growing backlog of syndrome data. To overcome this, three key steps must be followed on the roadmap to real-time quantum decoding (Fig. 1):

- Deterministic: The decoder needs to respond promptly at well-determined times (which sounds simple, but many computer architectures like CPUs are liable to respond at their leisure).

- Streaming: The decoder must process continuous streams of measurement results as they arrive, not after an experiment is finished.

- Adaptive: The decoder must respond to earlier decoding decisions through tight integration with the control systems, which are a fundamental component of the Quantum Error Correction Stack.

Let’s unpack each of these steps.

The good news is that we’ve already achieved this first step: Creating a decoder that enables quantum-memory demonstrations which unlock the first real-time feature, deterministic decoding.

For this, a QEC code—the Surface Code—is widely regarded as the leader of the QEC pack. It’s also the code that the Google and Zurich teams used in their demonstrations.

But other potential codes could steal the Surface Code’s QEC crown.

At Riverlane, for example, we developed the Collision Clustering algorithm, which works by growing clusters of errors and quickly evaluating whether they collide or not. This code not only provides a speed advantage, but also balances the speed, accuracy, cost, hardware, and power requirements to provide a practical route to error-corrected quantum computing.

To implement Collision Clustering, we coded in Verilog, which enables it to load onto an FPGA. While lots of excellent software packages are available for decoding, they’re coded in higher-level languages suitable for CPUs and aren’t necessarily going to behave deterministically.

FPGAs are a useful platform as every quantum computer in the world currently uses them to generate pulses to control qubits. So, this means the decoder can easily slot in alongside existing infrastructures.

Indeed, you could use such a decoder for up to 1,000 qubits and it would still consume less than 6% of the resources on an FPGA (based on a Xilinx). It could even share space with an FPGA without needing any new hardware purchases.

In the longer term, the cost and bulk of FPGAs means that large quantum computers will need to shift to using application-specific integration circuits (ASICs) for their decoders and control systems. An ASIC looks a lot like the CPU inside your laptop or phone, but it’s tailored for a specific task and behaves more deterministically like an FPGA.

Compared to an FPGA, an ASIC is faster, much cheaper (pennies per unit rather than tens of thousands of pounds), and much lower in power consumption. The catch is that building an ASIC requires a foundry to tapeout your design onto a silicon chip. Every new generation or update to your decoder needs a new tapeout. So, they aren’t as quickly deployable since most current quantum computers use FPGAs for their control systems.

In summary, FPGAs are convenient now, but ASICs are the future.

What Next?

Riverlane’s current decoder takes the output of a whole QEC experiment (including preparing the logical qubit and finally reading it out) and decodes it as a single batch of all syndrome data generated by that experiment (Fig. 2). It completes this task quickly and in a reliable amount of time (the decoder behaves deterministically). But it isn’t continuously decoding a stream of data.

The next step is to develop a streaming decoder. This must break up the syndrome data into batches, called windows. We can start decoding a window once we’ve performed all of the relevant measurements. Then, once this window is decoded, we can move onto the next window of data and start decoding it.

However, decoding is a complex holistic problem and can’t neatly be parceled up into a completely independent window. Therefore, we need each of the windows to overlap and we must build extra functionality in our decoder to reconcile what happens when these windows overlap.

If your decoder is fast enough, we can keep pace with the syndrome data by only using one decoder instance at a time, a so-called sliding window.

But what if a single decoder instance alone isn’t fast enough? We showed in a recent Nature Comms paper how parallel decoding of many windows, using many decoder instances, can always enable us to keep pace with the syndrome data. (Although this comes at the cost of slowing the logical clock rate of the quantum computer.)

Once we can continuously decode a logical quantum memory, the next step forward is to demonstrate decoding of a logical quantum computer. The fundamental differences here are that:

- The decoder must support decoding while performing logical operations.

- The decoder needs to be adaptive so that it can feedback decisions about what to do before the next logical operation.

In other words, we need an integrated network of decoders working in concert to decode multiple logical qubits while they’re performing computations.

It’s a long path but I’m confident we’ll get there. And with advances across the Quantum Error Correction Stack, we’ll unlock useful quantum computing much sooner.

Check out more articles from Electronic Design’s 2024 Technology Forecast series and more articles in the TechXchange: Basic Quantum Computing.

About the Author

Earl Campbell

VP, Quantum Science, Riverlane

Earl Campbell is a world expert in quantum error correction with nearly two decades of experience in creating fresh design concepts for fault-tolerant quantum-computing architectures. During his career, Earl has made significant contributions to quantum error correction, fault-tolerant quantum logic and compilation, and quantum algorithms with 80+ publications. He authored the premier review on quantum error correction in Nature.

Before joining Riverlane, Earl worked as a senior architect for Amazon Web Services' quantum-computing team. He has a PhD in Quantum Computing from University of Oxford and is a senior lecturer and EPSRC research fellowship holder at University of Sheffield. Earl continues to accelerate our quantum error correction efforts, establishing a pipeline of research scientific publications and leading our scientific teams.