The pressure to build energy saving servers and data centers is tremendous. As a result, aggressive targets have been set by regulatory agencies like EPA and by technologists. After nearly two years of collaboration with the industry, the EPA recently drafted the first Energy Star spec for standalone servers having one to four processor sockets. According to EPA, a second tier to the specification will cover servers with more than four processor sockets, blade servers, and fault-tolerant machines, among other things, and is expected to be released in October 2010.

As this energy savings mania spreads, IT professionals worldwide are considering total cost of ownership of servers, storage area networks, routers and switches for their data centers. Presently, the cost to operate such equipment over its lifetime is about three times larger than the original hardware cost.

Consequently, it's no longer enough to measure the performance of the data center by throughput, such as millions of instructions per second (MIPS) or by performance density (MIPS / ft2). The key metric moving forward is to measure efficiency based on performance or MIPS-per-Watt (MIPS/W). Leading makers of data center equipment have set aggressive goals to boost MIPS/W by a factor of ten in the next generation, while reducing overall power consumption by 20%.

Traditionally, however, a rise in MIPS has resulted in proportionate escalation of dissipated power. Successfully developing and executing strategies to decouple MIPS from Watts lets higher processing power be crammed into smaller enclosures.

In essence, there are two main sources of power consumption in the servers or data centers. While servers themselves are primary contributors, the second is the infrastructure needed to cool and protect them. The energy usage from each is about equal and they are directly related. Therefore, for every dollar saved in server energy consumption, an additional dollar can be saved in infrastructure energy costs.

Likewise, there are three main elements that contribute to server energy consumption. While the electronic loads such as microprocessors and memory banks consume 60 to 70% of energy, power supplies devour 25 to 30%, and fans 5%. Multi-core processors and virtualization has significantly reduced the load's power profile, but further drastic improvements can take place in all major energy consumers by adopting a holistic approach to system power management.

A Platform Approach

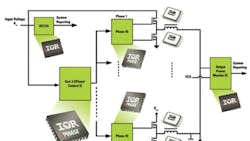

New smart power management systems include the co-design of several critical components of the power supplies that are integrated into the platform. The key elements of the power system are highly-efficient and dense power stages, advanced highly responsive power controllers, digital interfaces for programmability and diagnostics, accurate power monitors, system controllers, voltage regulators and sequencing.

Advanced and dense power stages reduce power loss in power supplies by up to one-third compared to traditional designs. To accomplish that goal, power stages are tapping advances in leading edge MOSFET technologies with advanced packaging that exhibit nearly zero package resistance and inductance with lowest industry thermal impedance. Compared to standard plastic discrete packages, the metal can construction of these benchmark MOSFETs enables dual-sided cooling to effectively double the current handling capacity of high frequency dc-dc buck converters. This dramatically cuts energy losses while shrinking the design footprint of the circuit board.

Optimized driver ICs co-designed with these DirectFET MOSFETs can deliver benchmark efficiency over heavy and light loads. By using efficient power devices coupled with innovative control schemes, it is possible to combine efficiency and electrical performance. Together, these technology enhancements can improve server efficiency by about 5 to 6% with more density.

Advanced power systems can have an even larger impact on the power dissipation of the load. High-power loads in the system - such as microprocessors and memory banks - have unpredictable power profiles because their performance demands can change rapidly. Under severe requirements, these loads can exceed their thermal limits and undergo thermal throttling, or stepping back in performance to allow the silicon and the package to cool. Once sufficiently cooled, the load must crank back up again. This results in a highly inefficient thermal and power cycle.

Submitting high-performance silicon to this sort of cycling is both an enormous waste of energy and performance. However, employing a holistic approach to system power management eliminates thermal throttling. By dynamically monitoring instantaneous power, recording its trend over time, and understanding the thermal impedance of the load, the power system can accurately predict thermals in the system at any point. Armed with this data, the power system can then alter the load's electrical qualities to limit power dissipation -- like dynamically changing its core voltage or reducing the processor's clock speed. This action keeps the load within the limits of its thermal envelope and can cut total power dissipation by 15 to 20%.

To see how such benefits take shape, consider blade servers as an example. Their modularity, low price, and small size make them a widely used way to expand capacity as needed within the already crowded confines of the data center. So the trend is to add racks containing high-density blades.

The problem is the large amount of heat that such racks generate. The latest blades may have up to four processors per board, and their power requirements are significant. This creates thermal problems that limit the computing density advantages the blades offer.

In practice, data centers often leave slots empty to provide more cooling and keep systems within thermal specifications. A mainstream dual-processor blade can consume between 600 W and 1 kW on its own while the data center will dissipate an equal amount in infrastructure and cooling. If we assume total data center power consumption for each blade at 1.6 kW, this equates to over 14,000 kWhr and almost $1,300 per blade per year in operating costs.

Taking an advanced power systems approach can help reduce the total power consumed in the blade. The first method is to employ highly-efficient on-board power supplies. Approximately 80% of the power drawn by the blade is consumed through the on-board supplies, so the efficiency of the power supplies has a large impact on the system efficiency. Microprocessors and memory consume much of this power. For example, a typical high-performance microprocessor will consume 130 A at 1.1 V, or 146 W. Today, it is typical for on-board power supplies to have about 85% efficiency, or 15% losses.

With advanced power control and conversion technology, such as International Rectifier's XPhase scalable multi-phase architecture and DirectFET power MOSFETs, it is possible to boost system efficiency to over 91%. It's possible to realize a savings of $95 per year through improving the supply's power loss by 40% (from 15% to 9% losses) or about 900 kWhr.

Similarly, by employing accurate real-time power monitoring ICs, such as the IR3725 and IR3720 that feature TruePower technology, advanced power systems can further slash dynamic power loss in the blade. In other words, an additional 2,100 kWhr or $220 per year per blade can be saved.