The move toward cloud computing has brought data centers into the headlines. Pundits claim the primary rationale for companies to go into the cloud is to cut IT support costs. But cloud computing has an energy efficiency angle as well: At least in theory, concentrating enterprise data in a few heavily used data centers makes operations more energy efficient.

It is easy to see why this is so from the fact that power consumption in a data center doesn't scale linearly with its computing load. A server operating at 100% capacity consumes 100% of its maximum rated power, but an idle server does not consume 0% of its total potential power consumption. In fact, most modern servers consume about 60% of their total potential power consumption when they are simply idling. So it makes sense from the standpoint of energy efficiency to collect operations into a minimum number of servers, then run the servers flat out.

Still, even server farms with optimized resources consume a lot of energy. No wonder, then, that server farm operators are taking steps to curb their appetite for electrical power. Industry experts, for example, estimate that a 100-rack server farm could save $270,000 to $570,000 annually and cut its square footage simply by using the most efficient power supplies available today, which run at about 95% efficiency. They also estimate that use of more efficient supplies could reduce cooling loads by up to 20% and allow a downsizing of mechanical cooling equipment in new structures.

Though it has long been known that server farms are power hogs, the most recent statistics are still eye opening. A typical data center can use 30 times more energy per square foot of space than an ordinary office area. Power usage by these facilities is still growing at an alarming rate of more than 20% annually and is on a pace to double within the next few years. The most recent statistics from EPA show that data centers consumed about 60 billion kWh of electricity in 2006, roughly 1.5% of total U.S. electricity consumption.

You might think that with this sort of energy use, data centers would be low-hanging fruit for energy efficiency initiatives. But though the Environmental Protection Agency (EPA) in 2007 took the first steps toward the Energy Star Label program for data centers, that effort is off to what seems like a slow start. Few data centers have applied for the Energy Star designation. EPA insiders say interest in the program is growing, however. To get an Energy Star label, a data center facility must be in the top 25% of its peers in energy efficiency, as measured by an energy performance scale devised by EPA called the Power Usage Effectiveness (PUE) metric.

Much of the cost of operating a data center goes into managing the generated heat. Even within well-designed data centers, the cooling and managing of air flow with equipment like chillers and heating, ventilating and air-conditioning (HVAC) systems can account for more than a third of data center electricity costs.

EPA took these factors into consideration when it developed an interactive energy management on-line tool called the Portfolio Manager. The Manager program helps collect data on data centers (as well as on other kinds of buildings) applying for Energy Star label certification. The Portfolio Manager, for example, lets a data center operator track and assess energy and water consumption across an entire portfolio of buildings. It can help set investment priorities, identify under-performing buildings, verify efficiency improvements, and receive EPA recognition for superior energy performance.

To rate data centers, the EPA assigns a numerical rating between 1 to 100 for label applicants. Data centers need a rating of 75 or greater to qualify for the Energy Star label. And this system works great, in theory.

Page 2 of 4

The Green Grid, a consortium that provides imput for the development of the data-center Energy Star Label, at its March forum talked about how the program is going. There it was reported that 600 data centers have entered their particulars into the Portfolio Manager. However, only about 125 have provided enough information to generate a rating between 1 and 100. In addition, most facilities have not provided the 12 months' worth of data the EPA wants for a rating, and many of the submissions incorrectly classify telecommunications facilities as data centers.

Nevertheless, the EPA remains upbeat about its data center efforts. “The EPA is very pleased with the Energy Star 1-to-100 energy performance scale for data centers,” explains Michael Zatz, Chief of the Market Sectors Group for the Energy Star Commercial & Industrial Branch. “Operators of data centers have embraced the scale, and it is exciting to see more and more learning how to use it, pursuing improvement, and earning Energy Star certification.”

In keeping with EPA policy, Zatz explains that the EPA can't provide information on applications for Energy Star Labeling that have been submitted and may be under review. It is possible to read between the lines on this point, however. Undoubtedly, that list is likely to include such industry heavyweights as Intel, IBM, Google, Yahoo and others that have been in the forefront of promoting energy efficiency efforts and are pushing for “greener” operating approaches.

For example, IBM pats itself on the back for its data center efficiency efforts in its latest Corporate Responsibility Report. The Report says IBM ran 290 projects at 90 existing data center locations that reduced energy use by over 32,000 MWh, saving more than $3.2 million. Big Blue also says it upgraded data center equipment and implemented data center best practices, including blocking cable openings, rebalancing air flow and shutting down air conditioning units, all of which generated 16,800 MWh of savings.

Also being brought to bear at IBM is a special thermal monitoring management system which produces a real-time three dimensional thermal map of the detailed heat sources and sinks within a data center. It helps identify hot spots and adjust cooling delivery systems.

“Saving millions in electricity expenses takes more than turning off the lights” says Wayne Balta, IBM's Vice President for Environmental Affairs and Product Safety. “It takes the combined effort of IBM experts working in data center operation, manufacturing, hardware, software, R&D and real estate management,” he adds.

The PUE

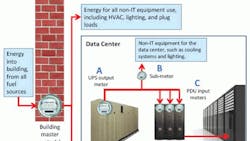

The data center metric enjoying the most widespread use these days is PUE which gauges the electrical efficiency of a server environment by focusing on its electrical overhead. Created by the Green Grid in 2006, PUE divides a facility's total power draw by the amount of power used solely by the data center IT equipment: PUE = Total Facility Power/IT Equipment Power

Page 3 of 4

IT equipment power includes that consumed by servers, networking devices, storage units and peripheral items such as monitors or workstation machines — any equipment used to manage, process, store or route data in the data center. Total facility power includes that IT equipment plus all data center-related primary electrical systems (power distribution units and electrical switching gear), standby power systems (uninterruptible power sources or UPSs), air-conditioning components (chillers, air handlers, water pumps, and cooling towers), and other infrastructure such as lighting and keyboard, video, and mouse (KVM) equipment.

The lower a data center's PUE, the more efficient it is. That's because a lower PUE indicates a greater portion of power going into the primary mission: processing and storing information. For example, a PUE of 1.5 implies that a data center needs 50% more power to operate than needed just for the IT equipment. A PUE of 3.0 means that data center needs twice as much power for non-IT elements as for IT hardware. A perfect (and in reality, unattainable) PUE score is 1.0, which represents a server environment in which all the power goes toward IT equipment.

“The EPA believes that the PUE represents the best available metric for assessing the energy efficiency of data centers today,” says Zatz. “We agree with many in the industry, however, that the ultimate goal should be to assess data center energy efficiency with a metric relating energy use to data center productivity. However, as there is currently no consensus in the industry concerning the most appropriate way to measure productivity across the range of data center types, we believe that PUE provides a valuable and accurate assessment of data center energy efficiency,” he adds.

Since 2008, Europe has had its own metric for assessing the energy efficiency of data centers. Dubbed the European Code of Conduct, it is more complex than the PUE, though it, too, divides operations into an IT load and a facilities load. The C of C asks participants to record not just IT and facilities power, but also such factors as a target inlet temperature for IT equipment as well as outside monthly average ambient temperature and average dew point temperature. It also asks applicants to record data that includes the average and peak IT utilization and details about physical facilities such as the type of cooling used and the number of equipment racks in the building.

By asking for these sorts of details, some experts see the European C of C being more accurate than Energy Star labeling. More accurate, maybe; more intrusive, certainly. But indications are that EPA is beefing up its own requirements along the same lines.

The first data center to earn Energy Star Label certification only did so last year. It was a facility in Research Triangle Park, N.C., run by cloud computing infrastructure provider NetApp. The facility earned 99 out of 100 possible points, considered near-perfect. The 125,000-ft.2 facility (33,000 ft.2 for the IT equipment) hit a PUE of 1.35 and hopes to get this number down to 1.18 this year.

“We use differential static pressure measurements for cooling purposes, which uses about a third of the amount of energy required by conventional techniques in typical data centers,” explains Mark Skiff, NetApp Senior Director of Worldwide Resources. Modulating fans, based on NetApp's proprietary technology, help implement pressure-controlled rooms and regulate the volume of air to avoid over-supplying air and wasting energy.

Other features factor into the data center's energy efficiency. These include cooling the data center from outside air (free air cooling) with an average air supply temperature of 74°F being used 67% of the time. Another practice is cold-aisle containment where the cold area is enclosed to separate it from hot air returning from the racks. Instead of pumping cold air up through raised floors, the usual technique in cold aisle systems, NetApp uses overhead air distribution and thus avoid obstructions from cabling below the raised floor which can limit cool air flow.

The facility's 74°F operating temperature is within the guidelines issued by the American Society of Refrigeration and Air Conditioning Engineers (ASHRAE) for computer and communication centers and can range as high as 77°F. These temperatures are higher than those found at many other data centers, which are forced to cool air to a chilly 55°F to 60°F simply because their cooling scheme is inefficient.

Page 4 of 4

Oracle Corp. has also applied for Energy Star Label certification. It has taken what is considered to be a novel approach toward data center heat containment and air-flow management at one of its data centers. To prevent the mixing of hot air into the cold air at the inlet of the IT equipment, there's a physical barrier between the hot and cold air. Individual rack containment is used wherein hot air is discharged directly to an overhead return air plenum. This approach provides more efficient cooling from warmer supply air because no data center personnel are exposed to hot air discharge. There is also flexibility in how the scheme can be deployed. Oracle believes that its work debunks many industry myths associated with the use of variable air flow in data centers and clearly demonstrates the technique is economical when it comes to data center energy efficiency.

Thinking Outside The Box

But Energy Star might not be the best metric for data center efficiency. William Kosik, Energy and Sustainability Director for Hewlett Packard Co. emphasizes that it is important to look at the entire power delivery chain for a data center, not just the watts/ft2 metric generally used when considering a new IT technology development and energy efficiency. Kosik suggests that a program like LEED (Leadership in Energy & Environmental Design), developed by the U.S. Green Building Council (USGBC) may well be more suitable when it comes to data centers.

LEED is an internationally recognized green building certification system. Among other things, it uses third parties to verify that a building or community is designed and built using strategies intended to improve energy savings, water efficiency, CO2 emissions reduction, indoor environmental quality, and stewardship of resources and sensitivity to their impacts.

Electrical usage often gets top billing in data center efficiency discussions, but water management might be more important for saving energy. Kosik points out that data centers consume a lot of water for cooling and discharge significant volumes of it into municipal sewer systems. Use of a LEED metric factors in the chemicals used for water treatment, water storage facilities, and their effects on the environment. No question such factors can be significant. A 2005 California Water Energy Commission report (“California's Water-Energy Relationship”) found the state's water-related energy accounted for 19% of the state's electricity, 30% of its natural gas, and 88 billion gallons of diesel fuel annually.

Kosik also says that it is impractical to balance competing needs of different resources without a formal process for optimizing the tradeoffs among different factors. That's the function of an Energy Optimization Scorecard which Kosik uses. There he aggregates four primary over-arching levels that apply to data centers: operational continuity, lifecycle cost, environmental impact, and IT effectiveness.

In the same vein, software simulation of data center operation, even well before the data center is built, is increasingly used for energy efficiency purposes. As an example of the trend, U. K.-based Romonet recently introduced Prognose, two years in development, which it claims to be the first predictive modeling tool for efficient data center design. “Our Prognose allows one to simulate an IT facility well before a single dollar is spent on a new data center,” says Romonet Chief Technology Officer and cofounder Liam Newcombe.

“We try to give IT folks a means of figuring out their actual costs,” he says. “There's a lack of cost transparency and accountability by data center operators, costs which Prognose clearly spells out,” he adds. “Being able to accurately predict energy consumption cost and reduce risk is crucial to understanding and optimizing performance both today and in the future,” says Romonet Chief Executive Officer Zahl Limbuwala.

Newcombe and Limbuwala are both leading industry contributors in the international harmonization of data center metrics within the Green Grid, the European Commission, and U.S. EPA and DoE.

RESOURCES

The Green Grid consortium, www.thegreengrid.org

EPA Energy Star Program, www.energystar.gov

The European Union's Code of Conduct, http://re.jrc.ec.europa.eu/energyefficiency/html/standby_initiative_data_centers.htm

The American Society of Heating, Refrigeration and Air Conditioning Engineers (ASHRAE), www.ashrae.org

“Data Center Retrofit,” by Mukesh k. Khattar, Oracle Corp., ASHRAE Journal, December 2010, bookstore.ashrae.biz/journal/download.php?file=ASHRAE-D-AJ10Dec03-20101201.pdf

“The New Reality of Balance and Optimization In Planning Green Data Centers,” by William Kosik, Hewlett Packard Corp., www.businesslist.com/toolbox/white-papers/index.php?m=whitepaper&d=attachment&id=728

Want More?

http://eetweb.com/applications/greening-server-farms-20091001/

About the Author

Roger Allan

Roger Allan is an electronics journalism veteran, and served as Electronic Design's Executive Editor for 15 of those years. He has covered just about every technology beat from semiconductors, components, packaging and power devices, to communications, test and measurement, automotive electronics, robotics, medical electronics, military electronics, robotics, and industrial electronics. His specialties include MEMS and nanoelectronics technologies. He is a contributor to the McGraw Hill Annual Encyclopedia of Science and Technology. He is also a Life Senior Member of the IEEE and holds a BSEE from New York University's School of Engineering and Science. Roger has worked for major electronics magazines besides Electronic Design, including the IEEE Spectrum, Electronics, EDN, Electronic Products, and the British New Scientist. He also has working experience in the electronics industry as a design engineer in filters, power supplies and control systems.

After his retirement from Electronic Design Magazine, He has been extensively contributing articles for Penton’s Electronic Design, Power Electronics Technology, Energy Efficiency and Technology (EE&T) and Microwaves RF Magazine, covering all of the aforementioned electronics segments as well as energy efficiency, harvesting and related technologies. He has also contributed articles to other electronics technology magazines worldwide.

He is a “jack of all trades and a master in leading-edge technologies” like MEMS, nanolectronics, autonomous vehicles, artificial intelligence, military electronics, biometrics, implantable medical devices, and energy harvesting and related technologies.