Machine Learning Speeds Battery Charging, Testing Across Multiple Variables

A major issue associated with investigating battery improvements for high-capacity applications such as automobiles is the harsh reality that simultaneously optimizing the many design parameters, including driving range, charging time, and lifetime, requires many time-consuming experiments. For example, testing lithium-ion batteries across factors such as materials selection, cell manufacturing, and operation to maximize battery lifetime can take months to years.

Designing batteries that can accept ultra-fast charging is difficult because, among other factors, it tends to significantly shorten the battery’s overall lifetime due to additional strain placed on the battery. To minimize this problem, battery engineers typically perform exhaustive tests of charging methods to find the ones that work best.

Now, a team based at Stanford University, collaborating with researchers at MIT and the Toyota Research Institute, has developed an approach based on artificial intelligence (AI) and machine learning (ML) that cuts testing times by up to 98%. Their optimal experimental design (OED) ML goal: Find the best method for charging a lithium-ion battery, such as in an electric vehicle (EV), in under 10 minutes while also maximizing overall battery lifetime.

Instead of testing every possible charging method equally, or relying on intuition, the computer learned from its experiences to quickly find the best protocols to test. The researchers wrote a program that predicted how batteries would respond to different charging approaches, and did so based on only a few charging cycles. The software also decided in real time which charging approaches to focus on or ignore.

By reducing both the length and number of trials, the researchers cut the testing process from almost two years to 16 days. Although the group limited their method on battery-charge speed, they said it can be applied to numerous other parts of battery development, and even to non-energy technologies.

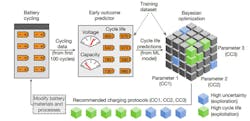

The team implemented an ML methodology to efficiently optimize the parameter space by specifying the current and voltage profiles of six-step, ten-minute fast-charging protocols for maximizing battery cycle life and overall lifetime. They combined two key elements to reduce what is called “optimization cost” (Fig. 1):

- An early-prediction model that reduced the time per experiment by predicting the final cycle life using data from the first few cycles

- A Bayesian-optimization algorithm that reduced the number of experiments by balancing two elements—exploration and exploitation—to efficiently probe the parameter space of charging protocols.

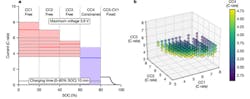

The team reported that, using their approach, they were able to rapidly identify high-cycle-life charging protocols among 224 candidates in 16 days (Fig. 2). In contrast, an exhaustive search without early prediction would have taken over 500 days.

Their closed-loop methodology automatically incorporates feedback from past experiments to improve future decisions. Equally important, they were able to validate the accuracy and efficiency of their approach. They further assert that their approach can be generalized to other applications in battery design, and even applied to other scientific domains that involve time-intensive experiments and multi-dimensional design spaces.

In addition to dramatically speeding up the testing process, the computer’s solution was also better and much more unusual than what a battery scientist would likely have devised, said Stanford Professor Stefano Ermon, a team co-leader. “It gave us this surprisingly simple charging protocol – something we didn’t expect.” Instead of charging at the highest current at the beginning of the charge, the algorithm’s solution uses the highest current in the middle of the charge. Ermon added, “That’s the difference between a human and a machine. The machine is not biased by human intuition, which is powerful but sometimes misleading.”

The full results of this research can’t be summarized by a few numbers, words, or metrics, but they are available in their detailed paper “Closed-loop optimization of fast-charging protocols for batteries with machine learning,” published in Nature (an unlocked version is posted here; be sure to scroll down). Beyond that basic paper, their Supplementary Information provides additional detailed graphs and charts, discussion of the machine-learning algorithm, perspectives on the results, and more specifics of the investigative arrangement. This work was supported by Stanford University, the Toyota Research Institute, the National Science Foundation, the U.S. Department of Energy, and Microsoft Corp.