Virtual Switch Offloading Maximizes Data-Center Utilization

What you’ll learn:

- Saving costs in the data center.

- Using a vSwitch to increase ROI.

- How offloading the vSwitch to a SmartNIC frees up cores to improve efficiency.

Data-center operators and service providers are increasingly deploying compute resources at the network edge, spurred by use cases that rely on low latency, such as virtual RAN, IoT, secure access service edge (SASE), and multi-access edge computing (MEC). These resources face common constraints in terms of hardware footprint and power consumption, whether they're located in converged cable access platforms (CCAPs), purpose-built micro data centers, or traditional telco points of presence (PoPs). Concurrently, operators are under pressure to ensure their data centers are as cost-effective as possible given that thousands of edge data centers are being deployed.

One way to meet these challenges of power, footprint, and cost in edge data centers is the process of offloading virtual switching from server CPUs onto programmable smart network interface cards (SmartNICs).

What’s a vSwitch?

In a standard data center, virtual switches (vSwitches) connect virtual machines (VMs) with both virtual and physical networks. In many use cases, a vSwitch also is required to connect “East-West” traffic between the VMs themselves, supporting applications such as advanced security, video processing, or CDN.

Various vSwitch implementations are available. OVS-DPDK is probably being the most widely used, due to the optimized performance that it achieves by using data-plane development kit (DPDK) software libraries, as well as its availability as a standard open-source project.

The vSwitch, as a software-based function, must run on the same server CPUs as the VMs, which is a challenge for solution architects. But it’s only the VMs running applications and services that ultimately generate revenue for the operator—no one gets paid for just switching network traffic. Thus, there’s a strong business incentive to minimize the number of cores consumed by the vSwitch in order to maximize the number of cores available for VMs.

This isn’t difficult for low-bandwidth use cases: The most recent versions of OVS-DPDK can switch approximately 8 million packets per second (Mpps) of bidirectional network traffic, assuming 64-byte packets. So, if a VM only requires 1 Mpps, then a single vSwitch core can switch enough traffic to support eight VM cores, and the switching overhead isn’t too bad. Assuming a 16-core CPU, two vSwitch cores are able to support 13 VM cores (assuming that one core is dedicated to management functions).

However, should the VM need 10 Mpps, more than one vSwitch core is needed to support each VM core and more than half the CPU is being used for switching. In the 16-core scenario, eight cores must be configured to run the vSwitch while only six (consuming 60 Mpps) are running VMs. And the problem worsens as the bandwidth requirements increase for each VM.

When a data center uses a high percentage of CPU cores to run virtual switching, more servers are required to support a given number of subscribers, or, conversely, the number of subscribers supported by a given data-center footprint is unnecessarily constrained. Power also is being consumed running functions that generate no revenue. As a result, there’s strong incentive to minimize the number of CPU cores required for virtual switching, especially for high-throughput use cases.

SmartNIC Solution

By offloading the OVS-DPDK fast path, programmable SmartNICs can address that problem. Only a single CPU core is consumed to support this offload, performing the “slow path” classification of the initial packet in a flow before the remaining packets are processed in the SmartNIC. This frees up the remaining CPU cores to run VMs, which significantly improves the VM density (the number of VM cores per CPU).

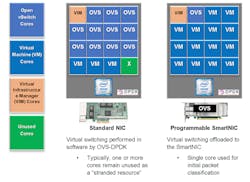

One use case involves a 16-core CPU connected to a standard NIC, where 11 vSwitch cores are required to switch the traffic consumed by three VM cores (see figure). In this situation, one CPU core remains unused as a stranded resource because there aren’t enough vSwitch cores available to switch the traffic to a fourth VM core. This waste is typical for use cases where the bandwidth required for each VM is high.

Offloading the vSwitch function to a programmable SmartNIC frees up 10 additional cores that are now available to run VMs. Thus, the VM density in this case increases from three to 10, an improvement of 3.3X. In both the standard NIC and SmartNIC examples, a single CPU core is reserved to run the Virtual Infrastructure Manager (VIM), which represents a typical configuration for virtualized use cases.

Data-center operators can now calculate the improvement achievable in VM density by offloading the vSwitch to a programmable SmartNIC. It’s then straightforward to estimate the resulting cost savings over whatever timeframe is interesting to your CFO. All you need to do is start with the total number of VMs that need to be hosted in the data center, and subsequently factor in some basic assumptions about cost and power for the servers and the NICs, OPEX-vs.-CAPEX metrics, data-center power utilization efficiency (PUE), and server refresh cycles.

One of the most significant costs in an edge data center is the server CPUs. Such data centers will increase in number to provide a foundation for innovative new services and applications. Therefore, it’s crucial to optimize usage of the CPUs to get the best return on investment (ROI). Offloading the vSwitch to a programmable SmartNIC can yield substantial cost savings and efficiency improvements, so take time to assess your needs, calculate your ROI, and see how much you could save with this strategy.

About the Author

Charlie Ashton

Senior Director of Business Development, Napatech

Charlie Ashton is senior director of business development at Napatech. He has held leadership roles in both engineering and marketing at software, semiconductor, and systems companies, including 6WIND, Green Hills Software, Timesys, Motorola (now Freescale Semiconductor), AMCC (now AppliedMicro), AMD, Dell, and Wind River.