A Test-Driven Approach to Developing Embedded Software

The growing complexity of embedded software development requires a new unified, test-driven approach, one that adopts early developer testing practices and implements automated software verification to prevent and detect more defects sooner. The objective is to have all developers and integrators create tests that can be reused and automated throughout the development cycle. This strategy maximizes automated testing to produce a more effective, scalable software process that can handle the increasing complexities of today's embedded software efforts.

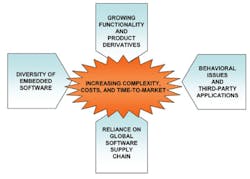

In the past 10 years, semiconductor technology and processes have continually improved to produce more densely integrated and feature-packed silicon. In response, embedded software development has increased rapidly to support all of the new functionality. The seemingly exponential growth of embedded software has introduced additional complexities and challenges to software engineering organizations (Figure 1):● Development teams now often include hundreds of engineers creating and delivering countless software components.● Software now is sourced from a global ecosystem of distributed engineers, outsourcers, chipset partners, and third-party technology suppliers. Even the open-source community introduces challenges of managing or mitigating different time zones, cultures, software practices, release schedules, and tools.● Companies often are concurrently developing and delivering multiple product lines or product derivatives. These challenges involve managing software changes across the product variants, ensuring that modifications are effectively verified on all hardware-software combinations.● The diverse nature of embedded software requires a variety of testing methods. The way engineers approach validating a protocol stack differs from how they might test device drivers or kernel-level operating system code or an embedded database. Some components are real-time sensitive, others might be data-centric, and still other functionality may require graphical verification. ● Embedded applications execute in complex multithreaded environments, and developers often contend with concurrency and timing issues. For this reason, engineers must verify the behavior of components and features across various permutations. ● Today's embedded systems often are not merely a fixed-function environment but rich, sophisticated software platforms for creating and adding third-party applications. API-level testing becomes critical, and engineering organizations cannot simply rely on black-box testing. The Impact on Software Development

These new complexities have caught the industry off guard. Companies have not had the opportunity to adequately revamp their software processes and methods, throwing the industry into an embedded software crisis. Some telltale symptoms and patterns have emerged, highlighting the seriousness of the problem.

Disparate Testing

Different functional teams create their own test tools or approaches that are tailored for specific components or functionality. The resulting plethora of tools serves only the needs of the individual teams and has very limited value elsewhere.

Too Many Late Defects

At many companies, integration testing consists of manually executing a rudimentary set of tests. Even when integration testing is more extensive, the test coverage is limited by the time-consuming nature of manual testing. For this reason, QA or product testing often is the first time all embedded software components are extensively tested as an integrated whole. As a result, QA engineers usually uncover large volumes of defects. By catching defects late, bugs are being fixed when they re the most difficult, time-consuming, and expensive to resolve.

Unpredictable Delivery

Development teams generally have no metrics or visibility into the health of their software until late, during the integration or QA phases. With the large numbers of defects, especially those found during product testing, software managers inevitably not only miss their delivery schedule, but also find it difficult to predict new delivery dates.

Major New Verification Strategy Objectives

The embedded industry is overdue for a more scalable and effective software testing and integration strategy. Taking into account today's complexities and challenges, any efforts to evolve or improve software integration and verification should address four key objectives.

First, manual testing by developers or integrators must be minimized. Manual testing is too tedious, time-consuming, and error-prone and not a good use of valuable engineers. Also, for companies building multiple products concurrently from the same code base, engineers do not have access to all hardware-software permutations.

Second, achieving phase containment will prevent or catch defects early, before QA. Important metrics of a new process will be significantly fewer defects escaping into QA and reducing the time and resources required in product testing.

Third, processes and infrastructure that are truly scalable and capable of supporting integration and testing of many components for multiple product lines concurrently must be implemented.

Fourth, improving the predictability of releases and providing earlier visibility into the software health of releases are important. This will enable managers to pinpoint trouble spots and make decisions based on real data or metrics.

A Unified Test-Driven Strategy

Automation is the key to achieving these major objectives. The strategy is to unify all software developers and integrators in delivering reusable, automated tests for a common test framework. Then, you can reuse and automate the tests whenever code has changed or at various integration points throughout the development cycle. This strategy sounds conceptually simple but it does require a process change nonetheless, involving adoption of new methods, implementation of automated infrastructure, and a change in mentality regarding the importance of developer testing.

An Embedded Software Verification Platform

Due to the complexity of today's embedded software, developers need to actively participate in the verification of their code. As the creators and architects of software components, only developers truly understand the inner workings of their code.

Following a best practice from test-driven development (TDD), ideally developers would create tests upfront, before implementing code. Developing tests in advance fleshes out the design and especially aids in designing for testability. By thinking about testing upfront, you ll take into account and implement facilities to access internal APIs, data structures, or other information that aid in testing.

The first role of an embedded software verification platform is to facilitate creation of reusable, automated tests quickly and easily by providing specialized tools and techniques. For example, the verification platform might enable developers to quickly break dependencies by simulating missing code with a GUI, simple scripts, or C/C++ code. Or perhaps the verification platform would support recording and playback to automate a series of manual test operations.

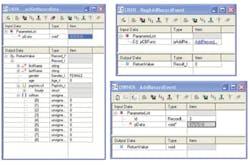

An embedded software verification platform also provides value at this stage by enabling developers to validate their tests before code is available. For API-level testing, a software verification platform like STRIDE• can execute and verify tests by simulating or modeling APIs through scripts, C/C++ code, or a GUI.

This provides developers with very simple, quick means to execute tests and feed them canned responses to validate them. For example, if code-under-test depends on the return values of another application interface, the developer can dynamically mock-up the desired return values.

Code-Test-Refactor

Following another TDD practice, developers would exercise or test their code as they implement it vs. testing it after completion. This incremental code-test-refactor approach allows developers to make frequent corrections and implementation adjustments along the way. Even for developers who do not practice TDD, there are occasions when they would like to quickly test or exercise code under development.

To exercise the software, an engineer might quickly implement some ad hoc code to drive the component. If a developer already has implemented tests, then he often would need to compile, link, and invoke them.

There are several potential impediments in this process. For example, the developer might resort to writing throwaway test code just to exercise some code under development. Long compile times also can become a hindrance, especially when builds can take anywhere from 30 minutes to a few hours. Long build times can be very frustrating and a deterrent for developers to test frequently or extensively.

Another barrier can be unavailable or missing code for which there are dependencies. To get around this, the developer would need to implement stub code for the unavailable services or module.

In this scenario, an embedded software verification platform can significantly improve productivity by optimizing the code-test-refactor cycle. For example, the verification platform could enable developers to exercise code through a GUI on the host without writing any test code, as depicted in Figure 2. In place of writing stub code, developers would use the verification platform to quickly simulate missing dependencies via a GUI, C/C++ code, or scripts.

Unifying Verification

This new approach succeeds only if developers and integrators create and deliver compatible, reusable automated tests. This requires that all developers and integrators be unified in key aspects of test development.

The first key is the software verification platform, which serves as the common test framework that supports, manages, and automates tests from all of the developers and integrators. With the diversity of embedded software components, the test framework should be flexible enough to support various testing strategies.

Hard real-time code may require tests written in native code to be directly built into the target. Soft real-time applications can be exercised remotely from the host, possibly using a scripting language. Network protocols might have internal state machines that should be verified through white-box techniques. Data-centric APIs may require facilities to efficiently enter complex data.

Second, the development team must conform to a level of uniformity when it creates tests. For example, guidelines might require that tests be self-contained, that is, not dependent on the preceding execution of other tests. Standard entry and exit criteria would guarantee that tests enter and leave the target in a consistent, known state, enabling tests to be executed in any sequence.

All tests would leverage the same error-handling and recovery mechanisms. Internal policies and conventions would establish naming conventions, archiving and maintenance policies, and the standard languages for implementing tests.

Continually Leveraging Automated TestingThere are many opportunities to leverage the automated tests: ● Developers can perform regression testing of their own code whenever they modify the software.● Tests can be re-executed at integration points or whenever developers integrate their code with other software components.● Developers• tests can be aggregated and automatically executed with an automated complete target build at regularly scheduled intervals. This practice is referred to as continuous integration and very effective for achieving and maintaining software stability because defects are uncovered earlier and frequently, providing continual visibility into and metrics relating to the software's overall health. ● The collection of developers• and integrators• tests also can be executed by the QA team to complement black-box testing.

In these cases, the software verification platform provides management, report generation, and automation by aggregating, organizing, controlling, and executing tests, and then collecting, analyzing, and displaying results.

Other Infrastructure and Considerations

To implement and integrate this unified, automated verification strategy into a development process, there are a few other important requirements to consider:

Component-Based Architecture

Software functionality must be cleanly partitioned into manageable, well-defined components or modules or it is very difficult to test, integrate, and maintain.

Scalable, Automated Build Infrastructure

For companies that concurrently develop multiple products from the same code base, a robust automated software build environment is important. As new code is submitted for integration, an automated build system is integral to creating all of the different product software derivatives to test the new code. Optionally, a testing infrastructure would automate loading the different software builds onto various target hardware.

Often these processes can be effectively managed through software tools.

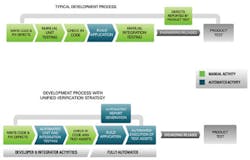

A Transformed Development ProcessImplementing this unified verification approach will indeed transform the software development process (Figure 3):● Tests from all developers will be managed and automated from a single common framework.● Anyone can reuse and execute any test for any component at any time.● A growing portfolio of developers• tests can be automated for regression or verification at various integration points or whenever code is modified.● Metrics for software health and completeness can be collected much earlier in the development cycle.Figure 3. An Embedded Software Development Process With and Without Automated Tests

Even if this strategy is applied incrementally or selectively to certain development teams, the impact can be dramatic: Greater volume of defects caught and prevented before product test, shorter cycles and fewer resources required in product test, increased visibility and predictability into software health and delivery schedules, and higher quality product on time. These are all tangible benefits that can transform a company's embedded software engineering into a true competitive advantage.

About the Author

Mark Underseth is CEO, president, and founder of S2 Technologies. He has more than 20 years of experience in the design of embedded communications devices, including mobile satellite, automotive, and digital cellular products. Mr. Underseth received a B.A. and an M.S. in computer science and has been awarded three patents and has five patents pending in the area of embedded systems. S2 Technologies, 2037 San Elijo Ave., Cardiff, CA 92007-1726, 760-635-2345, e-mail: [email protected]

FOR MORE INFORMATION

on an embedded software

verification platform

www.rsleads.com/704ee-176