Big data analytics becomes strategic test tool

Applications ranging from semiconductor test to large cyberphysical system monitoring are generating huge amounts of data. Indeed, we are living with a Cambrian explosion of data, according to Dave Wilson, vice president of product marketing, academic, National Instruments. Speaking at NIDays Boston last November, he said NI and its customers have generated 22 exabytes of data over the past three decades.

At the same event, Rahul Gadkari, NI principle regional marketing manager, U.S. East, said that unfortunately, companies analyze only 5% of the critical data they are capturing. Frost & Sullivan confirms that figure but adds that companies are beginning to see big data analytics as a strategic tool to improve efficiency.

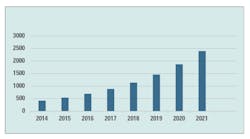

Source: Frost & Sullivan

In a new report titled Global Big Data Analytics Market for Test and Measurement, the market research firm projects market revenues for big data analytics in the test-and-measurement space to grow from $421.0 million in 2014 to $2,401.7 million in 2021 at a compound annual growth rate of 28.24%. The company said automotive OEMs are looking to utilize big data analytics to optimize products in the production line and keep track of maintenance and repair requirements while the aerospace industry is employing big-data analytics for component manufacturing, preventive maintenance services, and real-time structural health monitoring.

“Research and development, risk management, and asset management are the key applications in test and measurement where big data analytics is gaining traction,” said Frost & Sullivan Manufacturing 4.0 research analyst Apoorva Ravikrishnan in a press release. “Real-time monitoring and preventive maintenance, too, are racing to the top of investment priorities across industries.”

Moving beyond 5%

Outperforming the 5% figure cited by Gadkari and Frost & Sullivan was the powertrain controller R&D group at Jaguar Land Rover. Speaking at NIWeek last August, Pablo Abad, powertrain simulation and tools research leader at the company, said the group was analyzing about 10% of the 500 GB of data per day it was capturing. That wasn’t enough, though, and the group embarked on a year-long automation project using NI DIAdem and NI DataFinder Server Edition to help analyze 95% of the group’s data and avoid repeating tests—thereby saving costs. In addition, the automated approach proved to be 20 times faster than the previous manual approach.

DIAdem is software specifically designed to help engineers and scientists quickly locate, inspect, analyze, and report on measurement data using one software tool. DIAdem 2015 is NI’s 64-bit release, enabling users to load and analyze more data within the DIAdem environment. The 2015 version also offers improved search capabilities to globally find specific data sets for analysis, and it includes new data-visualization and analysis functions.

The NI DataFinder Server Edition has several features and capabilities that make it a suitable data-management tool for large groups in which multiple engineers need to access large amounts of data possibly stored in multiple locations.

Despite the inroads big data analytics is making into the test and measurement space, Frost & Sullivan reported that high initial costs will slow down the large-scale adoption to some extent. Some firms prefer in-house systems managed by their IT departments, and some OEMs remain skeptical on the reliability of data analytics.

“Transitioning from rigid analog systems to digitized, smart, and automated technologies will be the need of the hour for big data analytic vendors striving to strengthen test and measurement capabilities,” said Ravikrishnan. “Further, acquiring industry-specific expertise will differentiate analytic providers from the competition and quicken their rise to the top of the global big data analytics market for test and measurement.”

Big data for IC test

The semiconductor industry has accepted the need for data analytics, based on the experiences of Optimal+ (which changed its name from OptimalTest in 2014). At successive International Test Conferences extending back to at least 2011, the company has invited customers including AMD, NVIDIA, Broadcom, and Qualcomm to describe their experiences with Optimal+ tools to ITC attendees.

The presenter for the 2015 event, held last October in Anaheim, was Marc Jacobs, vice president of operations at Marvell Semiconductor. Marvell ships about 1 billion chips per year, he said, with a supply chain extending from wafer manufacturing to IC assembly and test, with test providers including ASE Group, STATSChipPAC, KYEC, SPIL, and Marvell itself. Systems in place in 2013 were working but labor-intensive, and the Marvell operations team wanted faster access to manufacturing data, the capability to drill down across product and test domains, and automated data analytics with alerts. In addition, the team wanted better data-driven internal communications across company sites and external communications with subcons.

Marvell implemented Optimal+ in 2013, Jacobs said, achieving improvement in yield, throughput, and capacity. In addition, consistent data could be communicated internally and externally among engineering, operations, planners, finance, and management.

Optimal+ last June announced the availability of Release 6.0 of its Semiconductor Operations Platform. This latest release includes a new tool called EXACT (EXtreme Analytics and CharacTerization). Based on the growing demand for greater data collection and analytic performance, EXACT delivers big-data performance to semiconductor manufacturing operations, leveraging the power of the HPE Vertica Analytics Platform (called HP Vertica before the recent split of HP and Hewlett Packard Enterprise) to enable customers to take advantage of all of the data that is generated across their global distributed supply chain, including new product introductions and high-volume manufacturing.

And Optimal+ is looking to expand. In September, KKR, the investment firm, announced it is leading a $42 million growth equity investment in Optimal+. KKR made its investment alongside the existing lead investors Carmel Ventures and Pitango, two Israel-based venture capital funds. KKR will support the global expansion plans of Optimal+ with primary capital as well as access to its global network of companies and technology experts.

Expanding the cloud

As this article went to press, ADLINK Technology announced availability of the latest version of its remote management middleware tool, SEMA 3.0 (for Smart Embedded Management Agent), which is able to monitor and collect system performance and status information from distributed devices. SEMA 3.0 is supported on a spectrum of hardware platforms; most of ADLINK´s computer-on-modules, single-board computers, and embedded computer systems are SEMA-enabled.

In addition, ADLINK introduced a new platform as a service SEMA Cloud application enablement platform, which offers a comprehensive industrial IoT cloud solution from a single source. SEMA Cloud comprises a cloud server architecture hosting the SEMA Cloud IoT Service, which can be managed and administered from the web-based SEMA Cloud Management Portal. The cloud solution includes gateway software with an IoT stack on top of intelligent SEMA middleware, enabling embedded devices to connect securely to the cloud using state-of-the-art encryption technologies without additional design requirements. SEMA Cloud is a flexible platform that is application-ready or open for further development of customer-specific applications.

As cloud computing and big-data analytics technologies expand in applications ranging from electronics test to medical diagnostics (see “Boston Children’s Hospital to leverage IBM Watson”), many details will need to be worked out. As Karim Arabi, vice president of engineering at Qualcomm, put it during an ITC keynote address last October, big data and abundant computing power are tending to push computation into the cloud, but with the deployment of the Internet of Things, we can’t afford to send all the data into the cloud. Consequently, demand for processing horsepower at the edge is increasing. And for efficiency, we need the right machine for the right task.

Wilson at the NIDays event made a similar point: Cloud-based solutions can lack context and can’t handle every application. A robot, for example, doesn’t have time to consult the cloud to avoid stepping on your foot, he noted. Computation must be in the loop with interaction at the edge device—in this case, the robot’s limb. It will take innovations on the hardware side as well as software side to achieve optimum results.

For more information

Boston Children’s Hospital to leverage IBM Watson

Courtesy of Boston Children’s Hospital

IBM has over the past year been pursuing cloud computing for medical applications, having launched IBM Watson Health and the Watson Health Cloud platform last April. IBM said the average person is likely to generate more than a million gigabytes of health-related data over a lifetime.

In a more recent initiative announced in November, IBM and Boston Children’s Hospital announced that they intend to collaborate to apply IBM’s Watson cognitive platform to help clinicians identify possible options for the diagnosis and treatment of rare pediatric diseases.

In an initial project focused on kidney disease, Watson will analyze the massive volumes of scientific literature and clinical databases on the Watson Health Cloud to match genetic mutations to diseases and help uncover insights that could help clinicians identify treatment options.

The goal is to create a cognitive system that can help clinicians interpret a child’s genome sequencing data, compare this with medical literature, and quickly identify anomalies that may be responsible for the unexplained symptoms.

“Coping with an undiagnosed illness is a tremendous challenge for many of the children and families we see,” said Christopher Walsh, M.D., Ph.D., director of the Division of Genetics and Genomics at Boston Children’s Hospital, in a press release. “Watson can help us ensure we’ve left no stone unturned in our search to diagnose and cure these rare diseases so we can uncover all relevant insights from the patient’s clinical history, DNA data, supporting evidence, and population health data.”

“One of Watson’s talents is quickly finding hidden insights and connecting patterns in massive volumes of data,” added Deborah DiSanzo, general manager, IBM Watson Health. “Rare disease diagnosis is a fitting application for cognitive technology that can assimilate different types and sources of data to help doctors solve medical mysteries. For the kids and their families suffering without a diagnosis, our goal is to team with the world’s leading experts to create a cognitive tool that will make it easier for doctors to find the needle in the haystack, uncovering all relevant medical advances to support effective care for the child.”

Boston Children’s Hospital is part of the Undiagnosed Diseases Network, a NIH program that aims to solve medical mysteries by integrating genetics, genomics, and rare disease expertise.