San Francisco, CA. BrainChip Holdings Ltd., a neuromorphic computing company, has announced the availability of the Akida Development Environment—a machine learning framework for the creation, training, and testing of spiking neural networks (SNNs), supporting the development of systems for edge and enterprise products on the company’s Akida Neuromorphic System-on-Chip (NSoC).

Akida is the flagship product in BrainChip’s mission to become the leading neuromorphic computing company that solves complex problems to make worldwide industry more productive and improve the human condition, the company reported. Applications that benefit from the Akida solution include public safety, transportation, agricultural productivity, financial security, cybersecurity, and healthcare. These large growth markets represent a $4.5 billion opportunity by 2025.

“This development environment is the first phase in the commercialization of neuromorphic computing based on BrainChip’s ground-breaking Akida neuron design,” said Bob Beachler, SVP of marketing and business development. “It provides everything a user needs to develop, train, and run inference for spiking neural networks. Akida is targeted at high-growth markets that provide a multi-billion-dollar opportunity, and we are already engaged with leading companies in major market segments.”

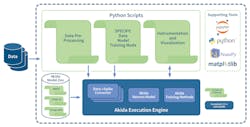

The Akida Execution Engine is at the center of the framework and contains a software simulation of the Akida neuron, synapses, and the multiple supported training methodologies. The engine is easily accessed through API calls in a Python script, so users can specify their neural network topologies, training method, and datasets for execution. Based on the structure of the Akida neuron, the execution engine supports multiple training methods, including unsupervised training and unsupervised training with a labelled final layer.

Spiking neural networks work on spike patterns. The development environment natively accepts spiking data created by dynamic vision sensors (DVS). However, there are many other types of data that can be used with SNNs. Embedded in the Akida Execution Engine are data-to-spike converters, which convert common data formats such as image information (pixels) into the spikes required for an SNN. The development environment will initially ship with a pixel-to-spike data converter, to be followed by converters for audio and big-data requirements in cybersecurity, financial information, and the Internet-of-Things data. Users are also able to create their own proprietary data to spike converters to be used within the development environment.

The Akida Development Environment includes pre-created SNN models. Currently available models include a multilayer perceptron implementation for MNIST in DVS format, a 7-layer network optimized for the CIFAR-10 dataset, and a 22-layer network optimized for the ImageNet dataset. These models can be the basis for users to modify, or to create their own custom SNN models.

“Akida is the Greek word for ‘spike.’ The Akida NSoC is the culmination of over a decade of development and is the first spiking neural network acceleration device for production environments,” said Peter van der Made, founder and CTO of BrainChip. Additional information on the Akida NSoC will be made available in the third quarter of 2018.

The Akida Development Environment is currently available on an early-access program to approved customers. For more information or to request the Akida Development Environment, contact https://www.brainchipinc.com/contact.