Interview: Dr. Raik Brinkmann Comments On EDA Verification Trends

As the number of transistors on a chip continues to grow the importance of correct designs and verification of those designs becomes of paramount importance. EDA verification continues to rapidly evolve but formal verification is here now.

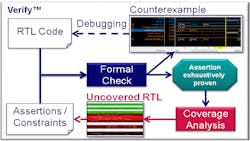

I recently asked Dr. Raik Brinkmann, a co-founder of OneSpin Solutions, about what he thinks the current state of affairs is in the verification space as well as what trends he sees. OneSpin Solutions provides a range of verification products like 360 DV-Verify (Fig. 1) and “It brings years of formal verification technology development and application service experience to enable design teams to avoid costly redesigns and respins, while dramatically cutting their verification effort, costs and time-to-market pressures.”

Wong: What trends are you seeing in verification?

Brinkmann: As ever, verification continues to rapidly evolve. Coverage analysis (checking that the design has been fully tested or “covered”) is becoming more and more important as we move toward environments where coverage will drive the verification process. Formal verification is being leveraged more and more, both directly in flows and indirectly as part of other tools and solutions. We are seeing standards applied, such as Universal Verification Methodology (UVM) and SystemVerilog, and engineers are working to understand to what level they need to be leveraged to provide value in their design solutions. Simulation-only solutions are not scaling to suit modern design sizes and complexities, so environments are now using formal verification and emulation technologies to supplement basic simulation.

Related Articles

- Surveying The Verification Landscape

- Interview: Forte's John Sanguinetti Tackles Trends In SoC Synthesis

- Generate An Interface Rule For Low-Power Consumer Devices

- Development Tools Move To The Cloud

Wong: What are today’s verification challenges?

Brinkmann: Well, there are the obvious challenges, which have been with us for a while. For example, there never seems to be enough time or resources to perform as much verification as some managers would like.

However, other challenges have become more pronounced, given modern design flows. They include understanding if enough verification has been performed to provide an acceptable level of risk of a bug remaining in the design, namely producing verification coverage metrics. Drawing together coverage information from a variety of different sources to produce an overall image of verification closure is another challenge.

Producing tests that find complicated, corner-case style bugs and exercising every state the design can reach in practice using simulation and efficiently debugging apparent faults are two more challenges. Two final challenges are generating simulation stimulus from the design specification that may be easily reused, and creating assertions efficiently enough to mimic a design specification, given the state of the assertion language standards.

Formal verification can help address many of these issues.

Wong: What is formal verification, and how does it fit in today’s simulation-based methodologies?

Brinkmann: Every simulation-based solution attempts to drive the design under verification into as many usage scenarios and states as possible, observe the design behavior, and judge whether or not this behavior matches the expectation. Formal verification takes the opposite approach. Instead of thinking about how to drive the design into specific states to observe some relevant behavior, formal verification lets the engineer directly or indirectly specify what they want to observe. The formal verification tool determines whether or not the design will always meet this expectation, or if it can reach states where the expectation is not met.

The description of the expectation can take several different forms. For example, the engineer may want a design to behave the same way as another design (formal equivalence), or they want it to conform to some specification written as a set of formal properties (assertions). In some cases, the engineer needs to exclude certain input scenarios that are unrealistic in practice, by specifying constraints. If the design conforms to the expectation according to the formal verification tool, the engineer can be sure this is holds under all circumstances, a fact that can not be established using simulation-based technology. We call this the exhaustive nature of formal verification.

Wong: Why is formal verification becoming important and what is OneSpin’s role?

Brinkmann: Digital technology is changing all our lives as computing devices are becoming ubiquitous. We all trust that these devices work properly. However, the logic involved to implement each such device is mind bogglingly complex. OneSpin Solutions provides software tools that help engineers manage this complexity and verify that their logic is correct. Our software frees the engineer from the impossible task of thinking through all the possible scenarios under which the logic must operate upfront and then write tests for them. Instead, the engineer simply states the intention and our software proves that these intentions are met under all conditions. This is achieved by using formal verification technology. We believe that formal verification technology will fundamentally change the way digital computing devices are designed and verified. Every engineer creating them, every business selling them, and ultimately everyone using them will benefit from formal technology, as these devices become Verified Beyond Doubt!

Wong: Why would a verification engineer want to use formal verification, and what are its big advantages?

Brinkmann: The big advantage is the exhaustive nature of formal verification. If a property (equivalence or assertion) is applied to a formal verification tool with no constraints applied, the property will be absolutely proved or disproved, given every state the design can possibly get into. With simulation, the states that a design can reach have to be exercised using stimulus and it is easy to miss some. With formal verification, no stimulus is required and every state is automatically included, although states unnecessary to check may be constrained out. This means that a verification engineer does not have to think through every scenario that requires testing; the tool does it for them. Formal verification results are far more reliable than simulation, and the technology does not require hours spent creating simulation stimulus files. In many cases, it is more convenient and productive to state the expectation than thinking of all relevant scenarios to consider, as the number of such scenarios quickly becomes huge for current SoC designs.

Wong: Why has the technology not caught on sooner and what is different now?

Brinkmann: The number of states the formal verification tool has to consider is huge and are impossible to consider in a plain form. Therefore, formal verification tools employ clever mathematical proof techniques to compute the set of states in some symbolic way, allowing a huge number of states to be evaluated without ever considering them individually. However, in early formal verification tools, the capacity and performance limitations were significant and the tools gained a reputation that they could only handle small designs. In addition, creating properties for the tools was complex, which made them hard to use.

Today, many enhancements have been made, improving the ability to consider larger state spaces, leading to a new generation of formal verification tools that are far more efficient both in terms of performance and capacity. Standards such as IEEE’s Property Specification Language (PSL) and SystemVerilog Assertions have emerged that make it easier to create assertions for the formal verification tools. In addition, a focus of OneSpin Solutions has been to provide automated solutions, including assertion synthesis and pre-packaged methods, which allows formal verification technologies to be applied in a powerful manner without the need to manually create any assertions. Formal verification is both easier and more efficient to use today and this has propelled the technology into modern flows.

Wong: What has prevented formal verification from more widespread use?

Brinkmann: Primarily, the difficult use model of the original formal verifications tools prevented their general adoption. Today, a large proportion of the formal verification tools in use are leveraged by verification engineers, usually in larger companies, who have mastered assertion creation. We are now seeing this change, as more companies employ automated methods to create and apply assertions, as well as go through the assertion learning curve. Formal verification technology is far easier to use and provides an effective mechanism standalone and when combined with simulation for advanced verification. We are witnessing accelerated adoption and expect 2014 to be seen as the year of formal verification!

Wong: For what applications is formal verification used for today?

Brinkmann: It is surprising to see how many applications make use of formal verification technologies. Formal has been applied as a direct property evaluation mechanism used to check the equivalence of two versions of a design and as a mechanism to test for issues that lead to X-propagation. It’s been used to test for connectivity and register mapping, advanced linting and design static analysis, clock domain crossing (CDC), power domain analysis, and various coverage techniques. This makes the technology pervasive across modern design flows and this will expand over the next year and beyond.

Wong: What advice would you give Electronic Design readers who want to adopt Formal Verification?

Brinkmann: You can do it! Formal verification has arrived and the time is now to explore how it can accelerate your verification flows. Waiting now delays the value that the technology can provide in your flow, while your competitors may be jumping on the solutions. It’s easy to start without an extensive learning curve, working with automated assertions to expand an existing simulation-based verification environment and receive immediate value with little effort. You can balance the value you receive versus the investment you want to make, and the tools are designed to suit many different environments. 2014 will be the year of formal verification.

Why not be part of it?

About the Author

Raik Brinkmann

CEO

Dr. Raik Brinkmann, a co-founder of OneSpin Solutions, was named president and CEO in 2012, with overall responsibility for leading the company and its business growth worldwide. He brings to this role more than 15 years of broad expertise in management, verification and industrial R&D, having served at companies in both Europe and the U.S. Dr. Brinkmann holds a Diplom Informatiker from the Clausthal Technical University, Germany and a Dr.-Ing. from the Department of Electrical Engineering at the University of Kaiserslautern, Germany.

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.