Ray tracing has evolved significantly since it was first introduced over 30 years ago. Until recently, the term “ray tracing” described a broad class of computational, graphics, and rendering methods based on the physics of light, with a wide array of continually evolving and improving features and performance for cross-industry and personal uses. Of course, when the techniques are fully utilized, amazingly realistic digital images result!

However, with the growing market presence of ray-tracing technologies, I see a “perception” being perpetuated that this inherent “breadth” can be effectively delivered as a single, narrowly focused “One Size Fits All” spot solution.

That’s not to say adding targeted support to better accelerate “parts” of fundamental ray-tracing techniques is a bad thing. Today, that support is only a small piece of a much larger puzzle needed to deliver the stunningly real, high-fidelity graphics that ray-tracing methods offer—such as photoreal path tracing as a key example. The bottom line is that “one size does NOT fit all” when it comes to ray tracing.

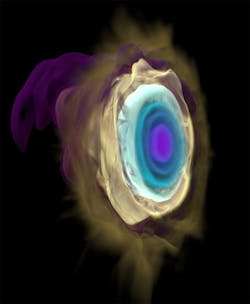

For example, researchers at the University of California, Santa Barbara and Argonne National Laboratory with my team’s help have used a ray-tracing method called “Volumetric Path Tracing” to visualize the magnetism, heat, and other radiation phenomena of stars (Fig. 1). The data they generated is almost 7 terabytes (TB) in size with many thousands of individual “3D timesteps” (frames) that show the evolution of the radiated elements from the star’s core.

Using open-source software, several connected servers, with large random access plus persistent memory totaling over 3 TB each, they were able to load and interact (zoom, pan, tilt) with this data at over 20 frames/s to better understand it. It would take 125 GPUs with 24 GB of memory to match the memory footprint of just a single server, not to mention the performance limitations of transferring the 3D data over the PCIe bus. Check out this video:

Taking a Platform Approach

Now I admit the use case above is a relatively extreme, but, in fact, real-world example of how taking a “platform” view to ray-tracing solutions is needed. Ray-tracing methods are quite varied and continue to rapidly expand and evolve. Thus, a combination of technologies, working in concert, best addresses the needed combination of data size and scene object complexity that drives image fidelity, plus the performance required to deliver the best user experience. Delivering just one piece of the solution will indeed only solve a very limited set of user demands, hence the need to take a platform approach that includes:

- Proven, powerful, and feature-rich processors: With added “software-coordinated” ray tracing and AI acceleration, they allow for maximum benefit across many uses and viewers.

- A large memory footprint/persistent memory: Offers better data control, reduced restart times, crash loss, and downtime. Eliminates slow device I/O, adding leaps in performance; provides large accessible memory to manage the ever-growing amount of geometric and volumetric data required for enabling animated and motion effects interactively and in real-time for movies, games, product design, scientific data and more.

- Denoising solution capability: While technically not a part of ray tracing as a technique, denoising solutions—both AI-based and traditional—can be added to the rendering pipeline to improve time to “good” picture, often allowing up to real-time frame rates on both GPUs and CPUs.

With a complete and effective ray-tracing solution in place, you should be able to utilize available multiple processing elements (e.g., CPUs, GPUs, specialty accelerators, etc.) and the platform’s collective volatile and persistent-memory capabilities in concert, maximizing users’/viewers’ experiences on their selected platform. The experience, visual fidelity, and interactivity level should be delivered with automatic, application, and user-selectable control to match the user’s needs.

From a broad computing market perspective, from smartphones to cloud data centers, be aware that ray tracing continues to rapidly evolve from relatively simple effects like shadows to photoreal imagery (Fig. 2). Unless you have “money to burn,” don’t just use one factor in your decision-making. My recommendation for the growing number of improved visual capabilities that ray tracing-based solutions will deliver in the coming months and years is don’t just look at one component or one benchmark or one application workflow—look at the total picture.

Key Factors to Consider

Here are some key considerations when looking for a robust ray-tracing platform:

What and how many features and primitives will the ray tracing hardware and software support?

- Triangles only?

- Triangle meshes, quad meshes?

- Curves, hair, fur?

- Motion blur? (linear, time segmented objects, quaternion)

- Volume rendering? (explosions, fog, clouds)

- Volume formats? (structured, unstructured, VDB, AMR)

- Path tracing, ambient occlusion?

Can the platform deliver what you need now and in the future?

- Typical memory sizes needed for your usage? (10s of GBs? 100s of GBs? TBs?); e.g., animated, multi-frame sequences significantly increase memory needs for interactive and real-time uses (n GBs per frame * y frames)

- CPU and GPU functional capabilities? Are you striking the right balance in the platform?

- Number of applications supporting the type and quality of ray tracing you need?

- What underlying ray-tracing technology is used? Is it tried, true, actively improving?

- Need to mix standard 3D graphics with ray-traced graphics?

It’s my hope and even a career goal that ray tracing someday soon will be available and expected in all its forms and capabilities on every computing platform without much thought. However, we’re only in the midst of that journey. As an interested user in ray tracing’s visual benefits, remember to “do your homework” so that you’re as thrilled with ray tracing’s visual impact as those who are creating the solutions for you. For now, at least, “One Size Does NOT fit all.”

Jim Jeffers is Sr. Principal Engineer and Sr. Director of the Advanced Rendering and Visualization team at Intel.

About the Author

Jim Jeffers

Sr. Principal Engineer and Sr. Director of the Advanced Rendering and Visualization team, Intel

Jim Jeffers, Sr. Principal Engineer and Sr. Director of Intel’s Advanced Rendering and Visualization team, leads the design and development of the open-source rendering library family known as the Intel oneAPI Rendering Toolkit. Render Kit is used for generating animated movies, film special effects, game development, automobile design, and scientific visualization. Jim's experience includes software design and technical leadership in high-performance computing, graphics, digital television, and data communications.