Why Are NVDIMMs Suddenly Hot?

Download this article in PDF format.

It seems like nonvolatile dual-inline memory modules (NVDIMMs) have suddenly lurched into the limelight after several years of being relegated to small niches. This article, excerpted from a new report by Objective Analysis, will cover the basics of NVDIMMs, reveal why they’ve spiked in popularity of late, and show how they will change the face of computer storage.

An NVDIMM Primer

If you’re already knee-deep in NVDIMMs, you can skip this part. However, we find that many members of the computing community, no matter what their technical level, have never come across NVDIMM technology, or don’t understand either how an NVDIMM is made or why it’s used. We’ll provide answers to those questions.

The NVDIMM exists to leverage the speed of the DRAM bus and of memory accesses. This is because the memory channel is the fastest bus in the system. It’s the bus that the memory modules, DIMMs, plug into, and so far it’s been used exclusively for the processor to communicate with DRAM. But DRAM loses its contents when power is removed, and that’s why computers need storage—storage is where the computer keeps bits in the event of a power loss.

The conventional way to add nonvolatile, or “persistent1” memory to a system is to use the hard-drive interface either for an hard-disk drive (HDD), or more recently, for a solid-state disk (SSD). Both the hardware and the software that communicate through this path are slow since they were designed to operate at speeds that are sufficient for hard drives. Although a lot of work has been done over the past 10 years to improve the speed of the I/O channel, it’s still a burden to flash memory, and promises to be even more of a burden for tomorrow’s high-speed nonvolatile memories.

Fortunately, some creative people devised ways to add new memory technologies to the memory channel that maintain their contents even when power is lost for long periods of time. Because of their nonvolatile nature, these DIMMs have been given the name “NVDIMMs.”

One straightforward way to make a DIMM persistent is to keep the DIMM’s data in DRAM, just like any standard DIMM, but then add a NAND flash chip to copy the contents of the DRAM when power fails. This is the architecture used for today’s most prevalent NVDIMM type and is manufactured by a number of companies, including Cypress’ AgigA, Micron Technology, SMART Modular, and Viking Technology. A microcontroller is added to the DIMM to move the data from the DRAM to the NAND flash, which it does when power is lost. The controller also moves the NAND chip’s contents back into the DRAM when power is restored.

Since this data migration occurs after the system’s power has failed, the NVDIMM must have a source of power to support the backup process. This type of NVDIMM design includes its own small backup power supply, which is nothing more than a small bank of supercapacitors that are often bolted inside the server’s chassis and connected to the NVDIMM with a wire. Finally, the NVDIMM must isolate itself from the dying server’s memory bus when the data is being backed up, so buffers are added between the memory channel and the DRAM chips.

All of these additional chips and supercapacitors add significant cost to the NVDIMM. Still, many users are willing to pay for these more expensive DIMMs because a system that uses them reboots extremely quickly once power is restored.

A second kind of NVDIMM simply ties multiple SSDs to the memory channel through a bridge in a manner similar to the way PCIe RAID cards or HBAs (Host Bus Adapters) have been used tie a number of SSDs or HDDs to the PCIe bus. The memory bus provides the system architect with persistence at lower latency and higher bandwidth than is possible with SATA or PCIe SSDs, but the lack of an interrupt pin on the memory channel slows the processor’s communications with the NVDIMM, and appears to have limited this configuration’s success. This kind of NVDIMM was sold by SanDisk and IBM a few years ago under the names ULLtraDIMM and eXFlash DIMM, based on a bridge chip designed by Diablo Technology.

Yet another kind of NVDIMM is scheduled to be introduced by Intel in late 2018. Intel’s “Optane DIMM” is based on the Intel-Micron 3D XPoint Memory, which is persistent and is said to perform at near-DRAM speeds.

The definitions above describe three very different NVDIMM configurations, and the differences between them could cause confusion. Consequently, the JEDEC standards body, an organization that standardizes interfaces for DIMMs and DRAM chips, took the time to create a name for each of these kinds of NVDIMMs. JEDEC’s name for the first kind of NVDIMM described in this article is NVDIMM-N, and the second has been named an NVDIMM-F. The third may be called an NVDIMM-P, but Intel and JEDEC haven’t yet been able to agree on a common standard, so there may be some other definition for this upcoming product.

JEDEC’s current NVDIMM-P definition was created to support more vendors than only Intel, and to support other emerging memories in addition to the Intel/Micron 3D XPoint (including FRAM, RRAM, MRAM, and others). Since Intel isn’t yet ready to disclose its Optane DIMM interface, the NVDIMM-P may be defined in a way that doesn’t support Intel’s Optane DIMM. The table provides the current JEDEC NVDIMM taxonomy.

The NVDIMM-N is available today from a number of sources. The NVDIMM-F, which raised a lot of interest two years ago, has fallen out of favor and is no longer available.

Intel has promised to ship its Optane DIMM late in 2018. Everspin currently supplies a low-density (1 GB) MRAM NVDIMM, which may or may not comply the upcoming JEDEC NVDIMM-P definition.

Understanding Today’s Sudden NVDIMM Popularity

The report this article is excerpted from details the markets that have already embraced the use of NVDIMM-Ns. These are applications that are so adversely impacted by power outages that an NVDIMM’s fast recovery makes it worth more than twice the price of a standard DRAM DIMM. For the sake of brevity, we won’t detail these markets here, but suffice it to say that the bulk of current sales are into slow-growing sectors, with some very exciting prospects in new markets in the near term.

NVDIMM-Ns are also being used to develop applications software for tomorrow’s NVDIMM-Ps. Although the software-development market doesn’t demand very high volume, it’s a wonderful way for developers to use currently available technology to develop software that will be ready for Intel’s Optane DIMM or other NVDIMM-P products once these technologies become generally available.

But the key reason for today’s big interest in NVDIMM-Ns is that NAND-flash chip densities have grown to 16-32 times the density of DRAM chips. Eight or more DRAMs worth of data can be backed up by a single NAND-flash chip. This makes the NVDIMM fit easily within a DIMM’s mechanical form factor without obstructing other DIMM slots. No special packaging is needed to accomplish this.

Software Changes to Support NVDIMMs

Over the past 40+ years, very clear delineations have been drawn between memory and storage. NVDIMMs blur this line.

Memory has always been fast and storage slow, but storage has always been persistent, a feature that memory simply didn’t offer. Memory also has always been orders of magnitude more expensive than storage, and memory’s power consumption is significantly higher than that of storage. An NVDIMM changes this by providing fast, persistent memory while storage is slow and persistent. The power consumption of memory is still high, and the cost difference between memory and storage remains significant. But now that persistent memory has been introduced, system architectures will soon undergo radical changes!

Today’s application programs can’t reap the full benefit of fast storage thanks to legacy operating-system I/O stacks, which have only recently been redesigned to take advantage of the higher speed of flash SSD, but they’re still burdensome to storage that runs at memory speeds. The operating system’s I/O stack has always been configured to support very slow storage.

Before the rise of SSDs, programmers and their management just didn’t care about the speed of the storage stack since the HDD was always considerably slower than this part of the system software. Suddenly, SSDs revealed the speed limitations of legacy storage stacks, a situation that became even more of an issue with NVDIMMs. Fast memory performance is dramatically impeded by storage stacks.

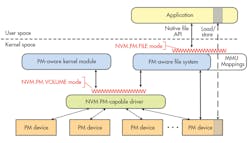

Shown is the SNIA Persistent Memory Programming Model, which targets operating systems and applications programs. (Source: SNIA, Used with Permission)

To address this issue, the Storage Networking Industry Association (SNIA) developed and promotes a new programming model for operating systems and applications programs that’s optimized for persistent memory. The SNIA Persistent Memory Programming model appears in the figure.

This programming model’s finer details are explained both in the Objective Analysis NVDIMM report and on SNIA’s website. Look for the persistent memory page.

The SNIA Persistent Memory Programming Model allows an application program to bypass the I/O stack when accessing the persistent memory, but the program can also access persistent memory through a more conventional storage stack if speed isn’t an issue. The model provides sufficient abstraction whereby the application can automatically adapt to whatever amount of persistent memory exists in the system (if it’s even there at all), in whatever address or DIMM slot into which the persistent memory is installed. This is all automatically managed by the operating system.

In the near term, NVDIMMs will mainly be used to accelerate in-memory databases, since they bypass most of the intricate routines currently used to assure persistence in database writes. With an NVDIMM, the program will simply need to perform a single write to a persistent memory address, resulting in a dramatic speed improvement.

How NVDIMMs Will Change Storage Architecture

Now that storage will no longer continue on with the role it has always played, and now that persistent data will be stored in memory, will SSDs and HDDs become obsolete?

Not in the slightest! HDD and SSD pricing will always be significantly lower than memory pricing, and these technologies will continue to be used for bulk storage. Hot data will be managed into the costliest, fastest tier, just as it is with today’s caching software. The next-lower tier will still consist of SSDs, since they are a good choice for “warm” storage, which in turn is faster, but more costly, than an HDD.

This storage hierarchy is similar to the memory hierarchy in which the first, second, and third-level CPU caches are fast, but costly, places to put the data that’s provided by the cheapest and slowest tier—the DRAM main memory. Storage is also now moving to a multi-level hierarchy, and can range from tape to capacity HDDs, then SSDs, and now NVDIMMs. These different levels don’t compete against one another, but will be used together in systems to provide better cost/performance ratios than systems that don’t have as many levels in their storage hierarchies.

What surprises many industry observers is that the addition of a new level in the storage hierarchy does more to displace a portion of the next-more-costly technology than it does to displace the cheaper, slower technology. Objective Analysis detailed this phenomenon with hundreds of benchmarks in our report titled "How PC NAND Will Undermine DRAM."

Objective Analysis’ NVDIMM Report

If you would like a deeper understanding of the NVDIMM market, you may want to consider reading our report "Profiting from the NVDIMM Market," which details the what, how, why, and when of NVDIMMs.

The report illustrates how the market for NVDIMMs should grow at an average annual rate of 105% to nearly 12 million units by 2021, and includes a forecast of the different markets for NVDIMMs. It explains the technology and its market in depth using 42 figures and 14 tables to clearly define the product and its applications. Different sections outline NVDIMM technology, its use, and its future, and detail how and why it’s suddenly becoming a force in the market.

This in-depth report analyzes all leading NVDIMM types and forecasts their unit and revenue shipments through 2021. Its 80 pages explain the technology, applications, and standards, while highlighting the software upgrades being developed in support of NVDIMMs. In addition, it defines the different varieties of NVDIMM being produced and developed, and profiles 13 key NVDIMM suppliers.

The study is based on rigorous research into NVDIMM technology, including the basis for its development, in addition to user and manufacturer interviews and participation in standards bodies. The report’s forecasts are based on Objective Analysis’ unique forecasting methodology, which produces high-accuracy results.

The report can be purchased for immediate download at http://Objective-Analysis.com/Reports.html#NVDIMM.

Reference:

1. The Storage Networking Industry Association (SNIA) has determined that the term “nonvolatile” is confusing to many prospective NVDIMM users, and now encourage the use of the term “persistent,” which is in common use in the storage business. I’ll use “persistent” for the remainder of this article.

About the Author