How PCIe Fabrics and RAID Can Unlock the Full Potential of GPUDirect Storage

What you'll learn:

- What is GPUDirect Storage?

- Adding RAID to bolster data redundancy and fault tolerance.

- How adding a PCIe fabric switch helps minimize the complexity of multi-host multi-switch configurations.

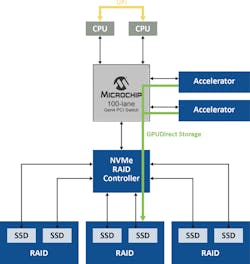

With faster GPUs capable of having significantly higher computational capacity, the bottleneck in the data path between storage and GPU memory has become untenable to realize optimum application performance. On that front, NVIDIA’s Magnum IO GPUDirect Storage solution goes a long way toward solving this problem by enabling a direct path between storage devices and GPU memory.

However, it is equally important to optimize its abilities with a fault-tolerant system to ensure critical data is backed up in case of a catastrophic failure. The solution is to connect logical RAID (redundant array of inexpensive disks) volumes over PCIe fabrics, which can increase data rates up to 26 GB/s under the PCIe 4.0 specification.

To understand how these benefits can be achieved, it’s first necessary to examine the solution’s key components and how they work together to deliver their results.

Magnum IO GPUDirect Storage

The key benefit of Magnum IO GPUDirect Storage is its ability to remove one of the major bottlenecks to performance: It doesn’t use the system memory in the CPU to load data from storage into GPUs for processing. Data is normally moved into the host’s memory and transferred out to the GPU, relying on an area in CPU system memory called a bounce buffer, where multiple copies of the data are created before being transferred to the GPU.

However, the considerable amount of data movement in that route introduces latency, reduces GPU performance, and uses many CPU cycles in the host. Magnum IO GPUDirect Storage removes the need to access the CPU and eliminates inefficiencies in the bounce buffer (Fig. 1).

Performance increases in step with the amount of data to be transferred, which grows exponentially with the large distributed datasets required by artificial intelligence (AI), machine learning (ML), deep learning (DL), and other data-intensive applications. These benefits can be achieved when the data is stored locally or remotely, allowing access to petabytes of remote storage faster than even the page cache in CPU memory.

Optimizing RAID Performance

The next element in the solution is to include RAID capabilities to maintain data redundancy and fault tolerance. Although software RAID can provide data redundancy, the underlying software RAID engine still utilizes the Reduced Instruction Set Computer (RISC) architecture for operations such as parity calculations.

When comparing write I/O latency for advanced RAID levels such as RAID 5 and 6, hardware RAID is still significantly faster than software RAID because a dedicated processor is available for these operations and write-back caching. In streaming applications, software RAID’s long I/O response times pile up data in cache. Hardware RAID solutions don’t exhibit cache data pile-up and have dedicated battery backup to prevent data loss when catastrophic system power failures occur.

Standard hardware RAID relieves the parity-management burden from the host, but a lot of data must still pass through the RAID controller before it’s sent to the NVMe drive, resulting in more complex data paths. The solution to this problem is NVMe-optimized hardware RAID, which provides a streamlined data path unencumbered by firmware or the RAID-on-chip controller. It also makes it possible to maintain hardware-based protection and encryption services.

PCIe Fabrics in the Mix

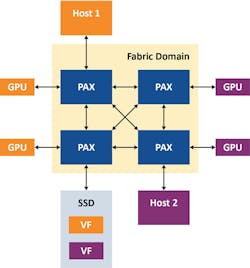

PCIe Gen 4 is now the fundamental system interconnect within storage subsystems, but a standard PCIe switch has the same basic tree-based hierarchy as in earlier generations. This means that host-to-host communications require non-transparent bridging (NTB) to cross partitions, making it complex, especially in multi-host multi-switch configurations.

Solutions such as Microchip’s PAX PCIe advanced fabric switch help overcome those limitations. They support redundant paths and loops, which is impossible using traditional PCIe.

A fabric switch has two discrete domains, the host virtual domain dedicated to each physical host, and the fabric domain that contains all endpoints and fabric links. Transactions from the host domains are translated into ID and addresses in the fabric domain and vice versa, with the non-hierarchical routing of the traffic in the fabric domain. This allows the fabric links connecting to the switches and endpoints to be shared by all hosts in the system.

Fabric firmware running on an embedded CPU virtualizes the PCIe-compliant switch with a configurable number of downstream ports. Consequently, the switch always appears as a standard single-layer PCIe device with direct-attached endpoints regardless of the location of the endpoints in the fabric. It can achieve this because the fabric switch intercepts all configuration plane traffic from the host, including the PCIe enumeration process, and chooses the best path. As a result, endpoints such as GPUs can bind to any host within the domain (Fig. 2).

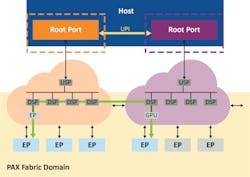

In the following example (Fig. 3), we present a two-host PCIe fabric engine setup. Here, we can see that fabric virtualization allows each host to see a transparent PCIe topology with one upstream port, three downstream ports, and three endpoints connected to them, and enumerate them properly.

One interesting point in Figure 3 is that we have a single root I/O virtualization (SR-IOV) SSD with two virtual functions. With Microchip’s PCIe advanced fabric switch, the virtual functions of the same drive can be shared to different hosts.

This PAX fabric switch solution also supports cross-domain peer-to-peer transfers directly across the fabric. Therefore, root port congestion is decreased, and CPU performance bottlenecks can be further mitigated (Fig. 4).

Performance Optimization

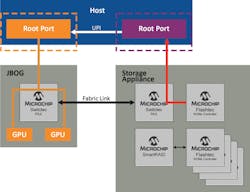

Having explored all of the components involved in optimizing the performance of data transfers between NVMe drives and GPUs, they can now be combined to achieve the desired result. The best way to illustrate this stage is to demonstrate the various steps that show the host CPUs and their root ports and various configurations that lead to the best result (Fig. 5).

Even with a high-performance NVMe controller, the maximum data rate of PCI Gen 4 × 4 (4.5 Gb/s) is limited to 3.5 Gb/s because of overhead through the root ports (Fig. 5, top). However, by simultaneously aggregating multiple drives via RAID (logical volumes) (Fig. 5, bottom right), a SmartRAID controller creates two RAID volumes each for four NVMe drives and conventional PCIe peer-to-peer routing through the root ports. This increases the data rate to 9.5 Gb/s.

However, by employing cross-domain peer-to-peer (Fig. 5, bottom left), traffic is routed through the fabric link instead of the root port, making it possible to achieve 26 Gb/s, the highest speed possible using the SmartROC 3200 RAID controller. In the last scenario, the switch supplies a straight data path that’s not burdened by firmware and still maintains the hardware-based protection and encryption services of RAID while exploiting the full potential of GPUDirect Storage.

Summary

High-performance PCIe fabric switches such as Microchip’s PAX allow for multi-host sharing of drives that support SR-IOV, as well as dynamically partitioning a pool of GPUs and NVMe SSDs that can be shared among multiple hosts. Microchip’s PAX fabric switch can dynamically reallocate endpoint resources to any host that needs them.

This solution also eliminates the need for custom drivers because it uses SmartPQI drivers supported by the SmartROC 3200 RAID controller family. According to Microchip, the SmartROC 3200 is currently the only controller with the ability to deliver the highest possible transfer rate, 26 GB/s. It has very low latency, offers up to 16 lanes of PCIe Gen 4 to the host, and is backward compatible to PCIe Gen 2.

Together with Microchip’s Flashtec family based NVMe SSDs, the full potential of PCIe and Magnum IO GPUDirect Storage is attainable in a multi-host system. Collectively, they make it possible to build a formidable system that can meet the demands of AI, ML, DL, and other high-performance computing applications in real-time.

About the Author

Tam Do

Staff Technical Marketing, Microchip Technology

Tam Do is a member of the technical product marketing team within the Data Center Solutions business unit at Microchip, where he is responsible for PCIe-related products. Previously, he worked on video codec and high-speed interface products for the consumer and multimedia markets. Tam earned a Bachelor of Science in Electrical Engineering from the University of Nevada-Reno and an MBA from the University of Phoenix.