Download this article in PDF format.

The eye has often been thought of as the window into the human soul, and the iris—the ring of colored fibrous tissue controlling the aperture (pupil) at the front of the eye—seen as an external indicator of one’s hidden depths.

Since ancient Egyptian times, observation of human iris patterns has been used to divine all kinds of things, persisting today in the “new age” practice of iridology. However, the path that led to today’s biometric iris recognition started with Frenchman Alphonse Bertillon, often dubbed the father of forensic science. Dissatisfied with ad hoc methods then used to identify criminals, he set about researching a whole system of anthropometric measurements. Amongst other things, in 1893 he reported on nuances he found in the human iris. Much of his other work also bears on present human biometric identification techniques.

Later in 1949, observations about human iris variation were published by British ophthalmologist J.H. Doggart, and referred to in a 1953 textbook Physiology of the Human Eye (still the basis of current ophthalmic study). In the text, F.H. Adler, a German scientist, wrote: "In fact, the markings of the iris are so distinctive that it has been proposed to use photographs as a means of identification, instead of fingerprints."

In fact, the fibrous texture of the human iris develops randomly in the womb, and is stable within a few months after birth. It’s this high degree of randomness that gives iris recognition its potential discrimination power. While its color is genetically determined, its structure is not.

This was picked up by two American ophthalmologists, Leonard Flom and Arun Safir, who in 1986 managed to patent the concept of using a digital image of the eye as a human identifier. The first algorithms were developed in the early 1990s by John Daugman, a computer scientist at the University of Cambridge.

Today’s Iris Biometric Technology

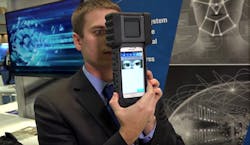

Fast forward to the current decade, and many companies now offer iris biometric products. FotoNation owns and offers MIRLIN technology, based on algorithms originally developed by Smart Sensors Limited. It’s used on products like Northrop Grumman’s BioSled multimodal, biometric capture device (see figure).

Northrop Grumman’s BioSled is a rugged, multimodal, biometric capture device designed to obtain images, fingerprints, and iris scans.

With iris biometrics offering the strongest biometric identification technique, it’s beginning to find favor in applications such as authenticating financial transactions and enterprise log-in. In such applications, automated identity verification and strong credentials are the most important considerations now that many of the concerns around ease of use of the technology have been addressed.

In essence, the concept of iris biometric recognition is straightforward: Take a digital photograph of the eye; detect and separate out which part comprises the iris; use image analytic methods on this image portion to extract features and create a binary template; and then match this template against a previously stored template from the same eye. If the templates show a close match, they’re from the same person with a high degree of confidence. If they don’t match, there’s a high degree of confidence that the template wasn’t created from the same eye.

Because of the random nature of human iris texture, a match between irises can be detected with great confidence if a simple test of statistical independence between the two templates fails. The most common technique is to calculate Hamming Distance, a procedure based on an exclusive OR comparison between the binary digits of the templates.

The entropy of variation in the human eye offers potentially more than 250 degrees of freedom.

So, What Are These Myths?

Iris-recognition technology has several times been used in films with a science fiction content, in particular Minority Report, Angels and Demons, and more recently, I Origins. As often happens with technology in the public “eye,” myths and misperceptions are prone to grow up around it:

1. For iris recognition to work, you have to “scan” the eye with a laser.

In movies, iris-recognition technology is often depicted by a thin, sharp line of light, or a laser, scanned across the eye. This simply doesn’t happen in the real thing. The technique is to take a photo of the front of the eye in near infrared light (NIR), which to the user is more or less invisible, or merely seen as a dim red glow.

The light is not scanned in any way. However, it may be pulsed, though at a frequency too high for the user to notice or be affected by it.

2. Iris recognition intrusively images the back of the eye.

Much publicity, including some produced by people who should know better, refers to iris recognition as retinal recognition. The iris is not the retina!

Inside the eye, on the back surface, lies the retina, the sensitive tissue that detects light coming in through the pupil and translates it into neural impulses.

Some early identification techniques did indeed use the retina, which has a rich pattern of veins that are also a rich source of unique information in humans. Retinal imaging is quite difficult, and needs close proximity to the subject. Most of us who have regular eye tests will have experienced retinal photography, where the vein structure can also reveal a lot about health of the individual.

3. Iris recognition uses lasers that could be harmful to the eye.

Iris cameras illuminate the eyes for a very short period of time with eye-safe LEDs, using NIR—just below the visible band at typically 750-900 nm. Lasers aren’t used in iris imaging.

Other than the depiction of laser light scanning the eye in movies, I’m not sure where this myth comes from. It’s true that many years ago, LEDs used to be classified along with lasers for international-standard eye-safety purposes—they now have their own classification since ISO 62471:2006 and later.

4. The unique characteristics of the iris are genetically determined.

The iris texture has been shown physiologically to develop randomly, leading to the very strong likelihood that all irises in the world’s population are unique, and the iris patterns of every eye statistically different.

In the Universal Identity scheme (UID, Aadhaar) in India, where iris data from over 1 billion individuals has now been collected, the iris has proven to be the single strongest method of ensuring that enrollments into the UID database are unique and not duplicated. Technology isn’t perfect of course, and poor imaging quality and human error can still interfere.

5. Identical twins have identical irises.

Actually, not at all. The iris can be used to uniquely differentiate between identical twins—whose DNA is the same—and tell them apart with complete certainty. Left and right eyes in the same person are also completely different.

6. The iris can reveal or help diagnose medical conditions.

There’s no proven link between medical conditions and the patterned structure of the iris. Studies have shown that the iris structure is stable within a few months of birth, although the colors develop more slowly. Iridology, which in alternative health practice claims to be able to recognize illness from the iris, hasn’t been proven in any medical studies, and moreover relates to the iris color. For iris recognition, a monochrome grey-scale image is used.

7. Someone could gouge my eye out and thus steal my ID.

This is the premise of Minority Report and Angels and Demons. However, as soon as the eye is cut off from its blood supply, the iris muscles relax and the pupil opens, leading to a severe restriction in the amount of iris texture available for identification. This means that any attempted recognition would have a very low confidence factor. It doesn’t take very long after that for the corneal surface to cloud over.

For those of a morbid disposition, there was a recent study published by academics in Poland who investigated how long an iris might be recognizable after death. Their conclusions were that at best, it would be a few minutes with a low degree of confidence.

8. Taking eye-dilation drops can let me register with a different identity.

When the United Arab Emirates, one of the largest users of iris recognition for border control, implemented a watch-list system to exclude illegal workers, an effect came to light: Some individuals found that if they took dilation drops before passing the border control, they would be let in—that’s because the iris texture with the pupil heavily dilated was an insufficient match to the “normal” eye. This was countered by checking the ratio of iris-to-pupil diameter.

9. Lenses and sensors needed for iris recognition are too big to make it viable in mobile devices.

This comes about from the use of large lenses and sensors in commercially available iris-recognition cameras, used in airports and access control. Cutting-edge developments in micro lenses and sensor performance mean that even with the small apertures and form factors mandated by mobile devices, adequate optical-system performance can be obtained for accurate iris recognition.

10. The accuracy of iris recognition changes depending on eye color and age.

NIR illumination is used to make sure that iris recognition can work accurately on all eye colors. That’s because the melanin pigment protecting the iris tissue in darker-colored eyes isn’t penetrated by light in the visible spectrum. As for aging effects, the iris is protected by the cornea, so unlike fingerprints, it doesn’t wear.

Physiological studies have shown the iris to be remarkably stable. Despite recent studies showing small changes in iris-recognition performance over several years, a large combined study by NIST in the U.S., taking evidence from several systems used over more than a decade, showed the effects on true recognition performance to be minimal. Furthermore, small variations observed in Hamming Distance scores could be accounted for by environmental and equipment effects.

11. CPU and memory needed to drive the complex algorithms used in iris recognition are too power-hungry or compute-intensive to work, even in today’s smartphones.

Not true: Mobile computing power has come a long way and is still evolving. Reducing power demand and reconfiguring imaging algorithms for small CPU and memory demand are critical.

About the Author

Martin George

Biometrics Consultant

Martin George is currently Biometrics Consultant at FotoNation Limited, and an entrepreneur and pioneer in identification technologies and systems. A research spin-out from the University of Bath, his original company Smart Sensors’ MIRLIN iris biometrics technology is deployed worldwide in applications ranging from physical access control, to military force protection, gun control in law enforcement, and automated border gates.

Now part of FotoNation Limited, a Tessera company, the biometrics team is working on products and security strategies for the smartphone world. The goal is to provide easy-to-use “payer present” authentication in m-commerce, a key issue for fraud reduction, while protecting privacy of the user.

George holds a Master’s in Engineering from the University of Cambridge (UK), and has worked in a range of technical and business development roles involving the incubation and licensing of intellectual property.