“Sicherheit” is the German word for “safety,” but it also means “security.” When you think about protecting your valuables, you may consider purchasing a “safe” where you can keep your things secure, but are they also safe (e.g., protected from fire, flood, radiation, etc.)?

As products in both general and healthcare-related technologies have evolved over time, the way in which we assess their safety has become much more complex. Just over the last few decades, the process of managing medical-device risks has shifted focus. Initially, it involved semi-prescriptively addressing hazardous domains such as energy hazards, biological hazards, environmental hazards, hazards related to the use of a product, and hazards arising from functional failure, maintenance, and aging.

Now the focus is on recognizing that product lifecycles and data lifecycles (for data associated with the product, and with a potentially independent lifecycle) need to be vetted through a structured risk-management process to address nuanced issues that have moved beyond “reasonably foreseeable misuse” and into domains such as “malicious use,” where the problem has shifted from being an individual “product” problem to being a “network” problem. For example, a malicious user could enter a network through a “low risk” (i.e., less secure) target and pivot to a higher-value asset that may be on that same network or even on a “hidden” network without direct public access, except indirectly through the “low risk” targeted device.

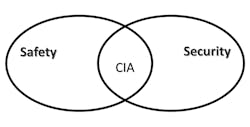

In today’s world of botnets commandeering the Internet of Things (IoT) for nefarious purposes such as cryptomining to finance terrorist activities, we have come to better appreciate the precarious position of our critical infrastructure. Where once we had only to mainly focus on “integrity” (I) and “availability” (A) to assure safety, we now also have the added burden of needing to focus on “confidentiality” (C), bringing together the worlds of “safety” and “security” through the well-known security triad of “CIA” (Fig. 1).

1. Safety and security are linked through the CIA security triad.

How Do Safety and Security Relate?

It’s this fundamental relationship between “safety” and “security” that we will be examining now. To better understand this relationship, let’s first look at some tools that have been at our disposal for many years, beginning with Hazard Based Safety Engineering (HBSE). The basic premise of Hazard Based Safety Engineering can be represented very simply with a three-block model (Fig. 2).

2. This is the Hazard Based Safety Engineering model.

The hazardous source is typically energy, like electricity or radiation or a substance, like a toxic or caustic chemical. The susceptible part is typically a human anatomical structure such as the heart, skin, or eye, and the transfer mechanism is the process or sequence of events by which the susceptible part is negatively impacted (e.g., disruption of normal physiological processes) by the hazardous source.

When thinking about “safety” from this perspective, we can see that there’s a direct corollary for security as illustrated in Figure 3.

3. This is the security corollary for the HBSE model.

Here we can see that data, like energy or chemicals, can be potentially harmful in that it could be used to compromise an “asset,” which is the item whose confidentiality, integrity, and/or availability need to be maintained. So now we see that from a product design perspective, we have a few options:

- We can remove the hazardous source (or data) or reduce it to a level that minimizes or negates the impact on the susceptible part.

- We can reduce the susceptibility of the susceptible part, such as by using personal protective equipment (or minimizing open ports/services).

- We can control (e.g., block) the transfer mechanism (such as by using intrusion detection and protection systems [IDS/IPS]).

Most often, controlling the transfer mechanism is the approach that’s most directly within the purview of an mIoT product developer, so we will focus primarily on that aspect of protection. To better understand the transfer mechanism, we can use tools with which many product developers are already very familiar. By diligently combining a “top-down” analysis technique such as fault tree analysis (FTA) with a “bottom-up” technique such as failure mode and effects analysis (FMEA), we can gain significant insights into approaches for controlling the transfer mechanism.

Analysis from Top to Bottom

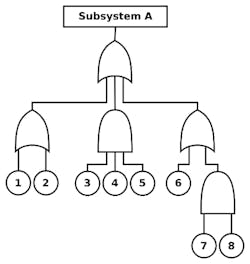

When conducting a “top-down” analysis such as FTA, it’s particularly important, for application in the security domain, to bear in mind that an mIoT product is a “subsystem” that will operate within the context of a larger “system.” Therefore, it will be important to understand how root-causes of failure or security compromise (designated 1-8 in Figure 4) could potentially impact other subsystems within the system as a function of their “top level” impact on Subsystem A.

4. This fault tree analysis shows how subsystems within the system impact the “top level,” Subsystem A.

One way to ensure that these considerations follow through is to model them into a “bottom-up” analysis technique such as FMEA (Fig. 5).

5. FMEA approach for considering connected device impacts.

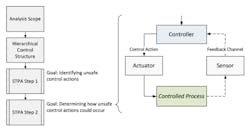

The benefits of combining these two approaches has been seen more recently, formally unified through techniques such as system theoretic process analysis (STPA) (Fig. 6).

6. System Theoretic Process Analysis (from “Hazard and Risk Analysis,” Swiss Universities)

At the end of our analyses, the key is to demonstrate, through the use of objective evidence, that yet another meaning of “Sicherheit” has been addressed. This aspect is “assurance.”

While it is relatively easy to conduct an analysis and claim that your product is safe and secure, it may be much more difficult to convince stakeholders such as regulators and customers that these claims are true. Fortunately, there now exists U.S. National Standards such as UL 2900-1 Standard for Safety, Software Cybersecurity for Network-Connectable Products, Part 1: General Requirement, and UL 2900-2-1 Standard for Safety, Software Cybersecurity for Network-Connectable Products, Part 2-1: Particular Requirements for Network Connectable Components of Healthcare and Wellness Systems, which are also Recognized Consensus Standards of the U.S. Food and Drug Administration (FDA).

These standards focus on providing objective evidence of “Sicherheit” through review of processes that support product development, such as Quality Management, Risk Management, and Software Lifecycle Processes (including post-market processes). They then use repeatable and reproducible testing as a foundation to determine the composition of the software (i.e., software bill of materials), identify known vulnerabilities with exposure (if any exist in the software), identify common software weaknesses that could potentially be exploited, and verify that the security controls intended to protect against these things are properly implemented via structured penetration testing. In addition, because there’s always some residual risk associated with “unknown unknowns,” malformed input testing (a.k.a. “fuzz” testing) is conducted to further stress the communication interfaces.

This kind of testing can result in product certification, such as per UL’s Cybersecurity Assurance Program, which was part of the initial inception of the U.S. Cybersecurity National Action Plan (CNAP). The information generated through such testing can also help to formulate an Assurance Case, such as that shown in the illustration from U.S. CERT (Fig. 7).

7. US CERT Security Assurance Case Pattern (from “Arguing Security—Creating Security Assurance Cases”)

So now we see how analysis tools, some of which have been used by product developers for many decades, can be coupled with newer tools and standards to help us improve the cybersecurity posture of our critical infrastructure, such as in the medical Internet of Things. The term “Sicherheit” fully embodies the key principles for achieving this: Safety, Security, and Assurance.

Anura Fernando is Chief Innovation Architect at Underwriters Laboratories (UL).

About the Author

Anura Fernando

Chief Innovation Architect

Anura Fernando is UL’s Chief Innovation Architect for Medical Systems Interoperability & Security. His multidisciplinary academic background includes degrees in electrical engineering, software engineering, biology, and chemistry, as well as practical experience in clinical medicine. His 20+ year career at UL has taken him from testing for product safety to research and predictive modeling of the safety of products that use software, lasers, nanotechnology, traditional and alternative energy sources, energy-storage technologies, and alternative fuels.

More recently, he has been involved in translating his many years of experience in root-cause analysis of software-based system failures into UL’s Cybersecurity Assurance Program (CAP), based on the UL 2900 family of standards, with applications in data analytics, cloud computing, edge computing (IoT), machine learning, and artificial intelligence. He has global responsibility for all UL medical-device software-related certification programs and is a member of numerous domestic and international standardization committees and government advisory committees, such as MDICC, FDASIA, HHS HCIC Task Force, and NIH QMDI.