Large Language Models in the Car

What you’ll learn:

- How generative AI and large language models can be used in a car.

- How Ambarella’s CV3 family handles multi-sensor perception, fusion, and path-planning support.

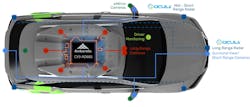

Technology Editor Cabe Atwell and I got a chance to ride with Ambarella’s General Manager, Alberto Broggi, (watch the video above) to check out the company’s latest software running on Ambarella’s CV3 family of system-on-chips (see figure). The car was the same one we took a ride in at the 2024 Consumer Electronics Show (CES), watch “Advanced AI SoC Powers Self-Driving Car.”

This time around, we got to see generative AI in action with a vision language model (VLM), which is a form of large language model (LLM) that runs on a single CV3 chip. It was tied into the cameras and sensors. The CV3 provided multi-sensor perception, fusion, and path-planning support for the advanced driver-assistance system (ADAS).

In the demonstration, the VLM identifies objects and their state, such as a red light reporting the details in text like a conventional LLM. Typically, this information would be provided to the path-planning support to provide feedback to the driver.

The CV3 includes a dozen Arm Cortex-A78AE and six R52 cores. The latter operate in lockstep pairs. It integrates an advanced image signal processor (ISP) and a dense stereo and optical flow engine to help with sensor fusion. The ISP is designed to handle low-light conditions. It can drive displays directly with an onboard GPU. The CVflow AI neural vector processor (NVP) engine handles the VLM support.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.