Packing Large AI into Small Embedded Systems

What you’ll learn:

- What is Femtosense’s SPU-001 AI accelerator.

- How sparsity and small-footprint accelerators reduce space and power requirements.

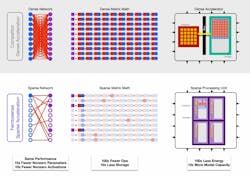

Not every microcontroller can handle artificial-intelligence and machine-learning (AI/ML) chores. Simplifying the models is one way to squeeze algorithms into a more compact embedded compute engine. Another way is to pair it with an AI accelerator like Femtosense’s Sparse Processing Unit (SPU) SPU-001 and take advantage of sparsity in AI/ML models (see figure).

In this episode, I get the facts from Sam Fok, CEO of Femtosense, about AI/ML on the edge, the company’s dual-sparsity design, and how the small, low power SPU-001 can augment a host processor.

SHOW NOTES

01:26 – SPU-001 NPU

05:17 – Model Support

08:26 – Memory

09:55 – Audio Inputs

10:55 – ADAM-100

13:37 – Audio Applications

15:04 – Future of Functionality

>>Check out these TechXchanges for more podcasts, and similar articles and videos

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.

Marie Darty

Group Multimedia Director, Engineering & Manufacturing

Marie Darty is a digital media professional currently serving as the Group Multimedia Director for the Manufacturing & Engineering Group at Endeavor Business Media. A graduate of Jacksonville State University, she earned her Bachelor of Arts in Digital Communication with a concentration in Digital Journalism in December 2016. In her current role, she leads the strategy and production of multimedia content, overseeing video series planning and editing. Additionally, she oversees podcast production and marketing of multimedia content.