Achieving Safety Through Sensing

Members can download this article in PDF format.

What you’ll learn:

- Why there is a pressing need to improve road safety.

- Resulting evolution of ADAS through the SAE levels.

- The pros and cons of various sensor technologies.

- Considerations for underlying sensing system architecture.

Every year, approximately 1.2 million people are killed due to road traffic accidents and countless others suffer life-changing injuries. With this in mind, the World Health Organization and the United Nations General Assembly collaborated to create a plan called the Decade of Action for Road Safety. The plan seeks to reduce road traffic deaths and injuries by at least 50% by 2030.

But lawmakers have their work cut out. Studies cited in a recent New York Times article note that during the pandemic, the number of crashes in the U.S. increased 16%, and road fatalities in 2021 were at their highest in 15 years at over 42,000. And while the scenario varies globally, this reversal in previous road safety improvements in the U.S. unfortunately appears to continue today.

Legislation and enforcement will play a key role in achieving the 50% fatalities and injuries reduction goals. But additional impact will come from automakers implementing advanced driver-assistance systems (ADAS) and increasing levels of driver automation.

While autonomous vehicles and the oft-cited SAE levels of driving automation have received lots of industry coverage and commentary, the reality is that ADAS solutions are saving lives. This has been through enabling features such as automatic emergency braking (AEB), lane departure warning and forward collision warning (LDW and FCW, respectively), blind spot detection (BSD), and driver monitoring (DMS/OMS). Accelerating the deployment of semiconductor-enabled, cost-effective, and high-performance ADAS solutions must therefore be an industry priority.

Different Sensor Technologies and Their Implementation in ADAS

To reach these levels of automation and autonomy, ADAS uses multiple sensing modalities, including:

- Cameras, which deploy vision-based sensing.

- Radars, which leverage radio-frequency-based sensing.

- LiDARs, which deploy infrared-light-based sensing.

Cameras capture images of a vehicles’ surroundings and enable human-vision- and computer-vision-based sensing use cases such as surround view, LDW, AEB, BSD, and driver and occupant monitoring.

Camera-based sensing requires a processing pipeline that includes an image sensor, image signal processing (for color recovery, noise reduction, auto exposure, etc.), and software perception algorithms (both classical and AI-based) to process the camera data to identify objects such as other vehicles, traffic lights, roads signs and pedestrians. However, cameras have limited depth perception capabilities and poor inclement weather performance.

Conversely, radar performs well in low-light and poor weather conditions and can detect objects at both short (~1 m) and long (~200 m) distances. Radar has been extensively deployed for FCW, BSD, and adaptive cruise control (ACC).

Early radar deployments in automotive used pulsed-based Incoherent sensing. This is similar to the “pinged” radar depictions we so often see in the movies. Here, a radio pulse is emitted, and the time taken for the pulse to reflect back from an object determines the distance (also called time-of-flight, or ToF, detection).

As semiconductor integration and processing capability evolved, automakers quickly switched from Incoherent radar detection to Coherent detection. This is because of its advantages of longer range, interference resilience, lower transmit peak power, and the ability to extract instant per-point velocity information.

Frequency-modulated continuous-wave (FMCW) detection is the most popular Coherent technique deployed in today’s automotive radar solutions. It’s also the approach adopted by telecommunications some 20+ years ago due to its proven underlying benefits.

In addition to radar, light detection and ranging (LiDAR) is now deployed in vehicles for long-range (c.200 m) detection. At its most basic level, a LiDAR system emits photons from a light source and measure properties of the returned signal to determine object distance. Operating in the infrared spectrum, LiDAR uses much smaller wavelengths than radar—up to 2500X less in the case of a 200-THz (1550-µm wavelength) LiDAR and a 77-GHz (4-mm wavelength) radar. This confers a huge sensing resolution advantage for LiDAR over radar and hence its complementary deployment.

Consequently, automakers are looking at ways to use LiDAR to not only detect lost cargo or a tire (~15-cm height) at long range (on a highway, say), but also for short-range (<10 m) automated parking applications to accurately detect small obstacles (including pets or children).

As with radar’s historical journey, early deployments of LiDAR sensing in vehicles have been ToF-based. But Coherent LiDAR detection (especially FMCW-based) is now gaining the attention of automakers due to its key advantages over ToF detection.

Coherent detection’s amenability to silicon photonics integration of the optical front-end, and the growing availability of dedicated LiDAR processor systems-on-chips (SoCs), such as indie Semiconductors’ iND83301, will help drive down system costs, size, and power consumption and meet automakers’ high-performance requirements.

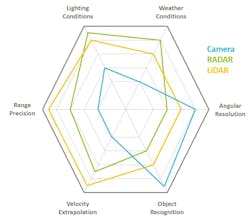

Figure 1 shows how each of sensing modalities have respective strengths. Automakers typically deploy multiple modalities to maximize the ability of ADAS functions to complement a driver’s environmental perception capabilities as well as improve system redundancy.

Sensors Integration into Advanced Vehicle Architecture

The data generated by the growing multitude of ADAS sensors must be transported and processed to create a perception of a vehicle’s environment. It can also warn the driver of potential danger, or even take an automated corrective action, such as emergency braking or steering actuation. But ADAS sensors sit within the framework of a vehicle’s electrical and electronic (E/E) architecture, meaning the implemented ADAS architecture must be considered within this wider context.

The increase in automotive semiconductor integration and processing performance has accelerated the three key automotive megatrends: ADAS, enhanced in-cabin user experience, and drivetrain electrification. As a result, the number of semiconductor-enabled electronic control units (ECUs) within a vehicle has exploded from tens to over one hundred today.

For automakers, this has created challenges in terms of E/E architecture design complexity, cost and weight of ECU wiring and power distribution, power and thermal constraints, vehicle software management, and supply-chain logistics.

To enable the functional and processing needs of the automotive megatrends, the legacy single-function ECU architectures are being replaced by higher levels of multifunction ECU consolidation and greater processing centralization through Domain (function specific), Zone (physically co-located functions), and Central Compute (a central “brain”) E/E architectures (Fig. 2). The exact definitions of these E/E architectures vary between industry stakeholders, but the broad trajectory to higher levels of ECU functional integration is consistent.

This evolution toward greater E/E architecture centralization poses fundamental questions for the ADAS sensing architectures:

- Should the sensor data and perception be fully processed at the vehicle’s edge, with each sensor’s perception “result” passed to downstream Domain, Zone, or Central Compute to actuate any mitigating safety actuation?

- Should raw sensor data be transported to the Central Compute for processing, perception, and actuation?

- Should some processing be performed at or near the vehicle’s edge, with further processing and perception performed in Domains, Zones, and Central Compute ahead of any needed actuation?

The somewhat unsatisfying answer, unfortunately, is that “it depends.”

Each automaker has different—and often, legacy—vehicle platforms it must support across vehicle classes, from entry and mid-size to premium and luxury models. The premise of a one-size-fits all Central Compute “brain” E/E architecture is appealing in principle and may suit select single-platform automakers (such as luxury model-only, or no-legacy, “clean-sheet” EV makers). Yet the reality is that most need to support several E/E architectures to address their commercial requirements.

Of course, automaker ECU consolidation and reuse of software across models will be crucial to maximize development and cost efficiencies. However, a central-brain-only architecture isn’t a panacea, nor will it be commercially practical for most automakers.

Leveraging Distributed Intelligence in ADAS Systems

As a result, E/E and ADAS architectures that support distributed intelligence will exist for the foreseeable future. This includes data processing and perception that spans across the vehicle’s sensor edge, and Domain, Zone, and Central Compute ECUs. Such distributed architectures, while not solving fully for software complexity and management, do ease data transport, ECU wiring, and power-distribution challenges. They also enable the optionality of ECUs and ADAS sensors to meet automaker vehicle model price points.

In the case of ADAS sensing, a distributed-intelligence architecture means that some data processing and perception is implemented at or near the sensor’s proximity. The resulting perception computation (for example, scene segmentation, object classification, or object detection) is then passed to the Domain, Zone, or Central Compute ECUs to be processed with the perception computations from other sensors for final perception and safety response actuation.

Such a perception architecture is often referred to as late fusion because each sensor has already performed some degree of perception. Late fusion contrasts with early fusion associated with Central Compute-only architectures where raw, unprocessed data from multiple sensor modalities is transported across a vehicle for aggregate processing in a central “brain” ECU.

One potential use case of the distributed-intelligence sensing architecture is the protection of vulnerable road users (VRUs), such as pedestrians and cyclists. Recent changes to new car acceptance programs, such as EuroNCAP, have introduced additional tests for VRUs, including in back-up and blind-spot scenarios.

Blind-spot radars and surround-view cameras are often physically co-located. As a result, automakers are looking for single SoC solutions that can process both radar and camera data and perform fusion of each pipeline’s decisions (i.e., labeled 2D points in the case of radar, and bounding boxes in the case of camera). This will help drive down ADAS sensing costs and enable low-latency edge decision-making.

The distributed-intelligence concept of sensor fusion at or near the edge can also extend to other ADAS use cases and sensor modalities, helping to bring the benefits of ADAS to the widest market.

The Ultimate Goal of ADAS— Road Safety

ADAS plays a crucial role within the automotive industry to reduce road fatalities and injuries. The capabilities of different sensor modalities complement each other, causing automakers to increasingly deploy multi-modal sensing to enhance a driver’s environmental perception and a vehicle’s safety response actions.

Sensor innovation and distributed intelligence in the context of evolving vehicle E/E architectures can help to proliferate ADAS technology across all vehicle classes. In the end, our roads will be safer for all road users.

Read more articles in the TechXchanges: Automotive Radar and LiDAR Technology.

About the Author

Chet Babla

SVP Strategic Marketing, indie Semiconductor

Chet Babla is indie Semiconductor’s Senior Vice President of Strategic Marketing, responsible for expanding indie’s Company’s Tier-1 and automotive OEM customer base as well as supporting product roadmap development.

Mr. Babla has worked in the technology industry for over 25 years in a variety of technical and commercial roles, starting his career as an analog chip designer. He most recently served as Vice President of Arm’s Automotive Line of Business, where he led a team focused on delivering the processing technology required for automotive applications including powertrain, digital cockpit, ADAS, and autonomous driving. Prior to Arm, he’s held multiple senior roles in the semiconductor industry and advised the UK government on its ICT trade and investment strategy.

He holds a first-class degree in Electrical & Electronic Engineering from The University of Huddersfield, UK, and has completed multiple executive leadership programs, including the Haas School of Business, University of California Berkeley.