TD-LTE And LTE-Advanced Networks Need Correct Time And Phase Data

Market research firm Infonetics forecasts the LTE equipment market will reach $17.5 billion in 2016. This explosive growth will cause growing pains as cellular carriers struggle to transform the largely circuit-switched network created for voice into an IP-based (Internet protocol), very fast packet-switched network capable of carrying video to millions of subscribers simultaneously.

According to 4G Americas, 266 LTE networks in 99 countries were operational as of February 2014. The initial LTE deployment wave was exclusively based on frequency division duplexing (FDD), where two different (“paired”) spectrum bands are needed: one for the transmission from the cell site to the mobile device, and the other for the transmission from the mobile device back to the cell site.

Going forward, more and more LTE networks will use time division duplexing (TDD) LTE technology, where the same spectrum band is used for both upstream and downstream transmission. LTE TDD is more flexible in allocating downstream and upstream bandwidth, but the biggest advantage is that it only needs one spectrum band instead of two.

Different spectrum bands have been made available in different countries for LTE FDD and LTE TDD. As of December 2013, 25 LTE TDD networks were in operation according to the Global Mobile Suppliers Association (GSA). China Mobile, the largest mobile operator in the world with around 650 million subscribers, has started to roll out its own LTE TDD network. Today, chipsets for mobile devices that support both FDD and TDD LTE versions are widely available, ensuring worldwide portability and roaming coverage.

LTE does not stop there. LTE-Advanced (LTE-A) is already in a testing phase and even in early deployments with some mobile operators in Korea. It boosts the speed available to mobile devices using techniques such as carrier aggregation (CA), which combines multiple spectrum bands to look like a single wider, higher-capacity band, and coordinated multi-point (CoMP) and multiple-input multiple-output (MIMO) transmission, which allows multiple antennas on the same or adjacent cell sites to simultaneously transmit data to mobile devices.

There are two important considerations for cell sites and their LTE backhaul networks. The first is the need for picocells in addition to the traditional macro cells (often also called basestations or cell towers). The second involves the different synchronization needs for LTE, particularly LTE TDD and LTE-A.

Today’s Requirements

LTE implementations require picocells to deliver highly increased bandwidth capacity compared to the capacity available from 3G networks to mobile devices. Although spectrum allocations for LTE are typically for much wider bands (on the order of 20 MHz) compared to 3G, there still is not enough capacity available for all users to receive the advertised data speeds, particularly in densely populated urban areas.

The solution is to deploy more but smaller cell sites, typically called small cells or picocells, that allocate the same spectrum to a smaller coverage area, delivering more capacity to each user. Outdoor picocells are small, low-cost devices placed on top of lampposts and traffic signals, as well as on the sides of office buildings. Also, picocells increasingly will be used for indoor coverage in large office buildings, inside malls and shopping centers, and inside sports and other event venues.

The most important difference from a network perspective for LTE TDD and LTE-A is the need for accurate phase and time synchronization of each cell site as well as between neighboring cell sites. Older (pre-LTE) wireless technologies only required an accurate frequency reference to each cell site, which could be delivered using the legacy SONET/SDH TDM-based backhaul network that was used for 2G and 3G wireless technologies.

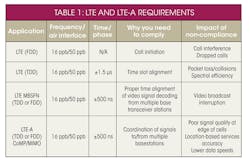

LTE is based entirely on packet-based network technologies that natively do not provide synchronization, though. Furthermore, LTE TDD and LTE-A require time and phase synchronization, in addition to an accurate frequency reference. The time and phase synchronization requirements for LTE TDD and LTE-A are very stringent. Some LTE-A modes like CoMP/MIMO and multicast-broadcast single-frequency network (MBSFN) require time synchronization down to ±500 ns (Table 1).

In the absence of a packet-based network synchronization technology, most mobile basestations that need time and phase synchronization today use the GPS/GNSS satellite-based global positioning system to acquire an all-important signal known as 1 pps, which is used to make the time-of-day (ToD) calculation for next-second rollover and to synthesize the fundamental source radio frequencies, as well as time and phase references.

While GPS/GNSS can deliver both frequency and time to cell sites, it comes with its own set of problems. GPS antennas are vulnerable to damage from storms and lightning, leading to daylong outages until craft can be dispatched to repair them. Many of the new (pico) cell sites required for providing coverage and network capacity for LTE and LTE-A will be located at street level or even indoors, where GPS reception is poor or non-existent.

There is also an increased awareness about the susceptibility of GPS antennas to jamming and spoofing. The Alliance for Telecommunications Industry Solutions (ATIS) in March 2014 filed a public policy letter with the Department of Homeland Security (DHS) highlighting increased GPS system vulnerability vis-à-vis providing accurate timing to mobile networks. ATIS is recommending further vulnerability and mitigation studies (see http://www.atis.org/legal/publicpolicy.asp).

1588’s Role

The solution to all of these problems is to recreate the reliable network-based synchronization that was available for 2G/3G TDM-based backhaul networks and further extend it from frequency to frequency and time/phase synchronization, but this time over today’s efficient, high-capacity packet-based IP/Ethernet network. The IEEE 1588 Precision Time Protocol, also often just called 1588 or PTP, can accomplish this.

Originally created to enable precision timing for the test and measurement community and within industrial automation systems, 1588 can deliver real-time applications with precise ToD information, time-stamped inputs, and scheduled and/or synchronized outputs for many systems, ranging from industrial process control to Smart Grid energy distribution, mobile networks, audio-video networks, and even automotive and transportation machine-to-machine (M2M) networking.

The 1588 protocol relies on time-stamped frames that are exchanged between a timing master clock and a timing slave clock, with intermediate boundary and/or transparent clocks (TCs) to maintain the time accuracy as the 1588 packets traverse the packet network (see “What’s Behind The IEEE 1588 Protocol?”). With all the different systems and applications for 1588 timing and their distinct requirements, the actual accuracy requirements are not covered by the 1588 standard itself, but by so-called “profiles” defined by industry-specific standards organizations.

The International Telecommunications Union (ITU), specifically ITU-T Study Group 15, is defining the “1588 telecom profile” for wireless networks. While the telecom profile for frequency delivery using 1588 packets has been defined for quite some time, the corresponding profile for phase and time delivery is still being developed. The first of those specs ratified in March 2014 are part of the ITU-T G.8271.1 and G.8273.2 phase and time clock specifications.

The G.8273.2 spec in particular identifies the maximum static and dynamic time error that a boundary clock in each network element in the backhaul network can introduce, so the overall network can deliver the accurate time synchronization to each cell site required for LTE TDD. G.8273.2 specifies two accuracy classes: a Class A that may not introduce more than 50-ns time error, and a more stringent Class B that has to minimize time errors to less than 20 ns.

Time errors in a boundary clock are introduced by a combination of time-stamping resolution, inaccuracy of the time-stamping points, local oscillator noise, the accuracy of the 1-pps signal delivered to the time-stamping points, and uncorrected systems asymmetries. Frequency masks have been defined for both dynamic and static time errors, with any errors below 0.1 Hz adding linearly to the total time error. Maximum time error specifications for other clocks like the transparent clock and the slave clock in the basestation itself are still under study. The ITU will ratify them soon.

Specifically, the ITU defined these new timing accuracy classes on a per network element basis so operators can easily mix and match equipment from different vendors and still be certain that timing synchronization budgets will be met before they install and commission their networks.

All network elements used in wireless networks including cell site gateways, switches, routers, and even microwave and millimeter-wave equipment will have to meet one of these timing accuracy classes. Otherwise, they will not meet the ITU-T requirements for delivering time and phase synchronization for wireless networks.

The entire timing budget can’t be allocated to the network equipment, though. The primary reference time clock (PRTC) itself has been allocated a 100-ns time error budget. Basestations also will introduce a time error that has not been defined yet, though we can assume that to be on the order of 50 to 100 ns as well.

Furthermore, we will have to consider that it will be hard for installers to cut upstream and downstream fibers to exactly the same length. Fiber splices usually include loops of a few feet of length for ease of installation. Those loops by themselves may introduce asymmetries up to 5 to 10 ns for each hop.

While the 1588 protocol theoretically could correct these static asymmetries, we would assume that measuring the exact fiber asymmetries at installation and working the results into the offset of the 1588 protocol for each link would be too cumbersome and costly. Therefore, these ~10-ns per link asymmetries must be built into the overall time error budget.

To provide an example how operators can use the new timing classes to fit their network synchronization needs, consider a practical example. Let’s take a network of 10 Class A routers between the PRTC and an LTE eNodeB basestation. Both the PRTC and eNodeB basestation contribute 100 ns to the time error budget, while each link contributes 60 ns to the budget (50 ns from the Class A routers and 10 ns from the fiber asymmetry budget).

So, the maximum total time error the network may exhibit will be 100 + 100 + (10 x 60) = 800 ns. The operator with such a network then will be able to meet the 1.5-μs LTE TDD synchronization requirement, but not the stricter 500-ns requirements for LTE-A. To meet the LTE-A requirements, this operator would have to use Class B routers instead, resulting in a maximum time error of 100 + 100 + (10 x 30) = 500 ns (see the figure).

These simple examples also illustrate why the ITU-T may define even more accurate timing classes beyond Class B in the future. China Mobile, for example, is pushing hard in the ITU to support 20 or even more hops between PRTC and the basestation, which would require a 10-ns class as well as only a 5-ns fiber asymmetry budget and possibly a tighter 50-ns specification at the eNodeB to achieve 100 + 50 + (20 x 15) = 450 ns maximum time error.

Once the ITU fully defines the telecom profile for phase and time delivery, wireless operators will have a powerful technology available inside their networks to provide the nanosecond-accurate time synchronization required for LTE TDD and later LTE-A networks. For traditional macro cell sites, most mobile operators are planning to use GPS as the primary time reference, with 1588 as a backup.

Outside the U.S., many operators are even planning to make 1588 their primary synchronization mechanism, with GPS only as backup. For LTE indoor and outdoor picocells that are largely greenfield deployments, most operators are planning to use 1588 exclusively, not the least because most picocells are deployed either at street level in urban canyons where GPS coverage is poor or inside large office buildings, malls, and venues for indoor coverage and capacity where there is no GPS coverage at all.

The Vitesse Way

Vitesse provides unique solutions to solve packet synchronization for wireless and many other networks with its VeriTime technology. VeriTime combines a best-in-class hardware-based architecture for 1588 frame generation, detection, and single-digit time-stamp accuracy with a PTP software suite for 1588 grand master clocks, slave clocks, and boundary and transparent clocks.

VeriTime technology is integrated into Vitesse SynchroPHY 10/100/1000Base-T/X Gigabit physical layers (PHYs), 1/10G Optical 10-Gigabit PHYs, 10G optical transport network/forward error correction (OTN/FEC) PHYs, Vitesse ViSAA Ethernet switches for carrier, enterprise, and industrial applications, Vitesse CEServices software packages, and Vitesse carrier-grade turnkey original design manufacturer (ODM) systems solutions.

VeriTime is the only solution in the market today that helps systems vendors design switches, routers, gateways, wireless basestations, indoor and outdoor picocells, and microwave, millimeter-wave, and passive optical network equipment that meet the stringent synchronization requirements of next-generation Time-Division LTE (TD-LTE) and LTE-A mobile networks. Most importantly, systems designed with Vitesse VeriTime fully meet the new timing accuracy class requirements currently being standardized by the ITU-T.

VeriTime borrows from a large toolbox of patented techniques, patent pending techniques, and trade secrets, such as:

• Single-digit accuracy time stamping engine with exceptional long-term stability

• Independent timing domains for 1588 and Synchronous Ethernet to avoid drift in holdover situations

• Sophisticated multi-protocol classification engines for 1588 frames in all relevant network protocol implementations, including multiprotocol label switching (MPLS)

• Distributed time stamping support in Vitesse PHYs and PTP software to enable time stamping directly at the port level, while seamlessly interworking with existing timing cards

• Efficient in-band collection and distribution of timestamps to eliminate CPU overhead or dedicated timestamp collection channels

• Distributed time-stamping support in Vitesse switches, PHYs, and PTP software to improve 1588 accuracy across microwave and millimeter-wave links as well as over passive optical networks

• Predictor algorithms to compensate for packet delay variations (PDVs) introduced by RF modems, OTN/FEC framers, and encryption engines.

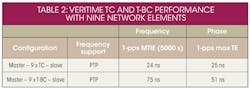

To demonstrate the capabilities of Vitesse VeriTime technology, we put together a network of nine switch/routers connecting a grand master clock sending out 1588 frames and an ordinary clock recovering timing (both frequency and time) from the network. These results have been published in multiple contributions to the ITU. Table 2 shows results for maximum time interval error (MTIE), relevant for frequency accuracy and stability, as well as maximum time error (relevant for time/phase synchronization) at the slave clock with respect to the grand master clock.

As illustrated, the maximum time error after going through all nine switch/routers is no more than 25 ns for transparent clocks and no more than 51 ns for boundary clocks using VeriTime PHYs or switches, pointing to per network element error of no more than 3 ns for transparent clock mode and no more than 7 ns for boundary clock mode. More details and test results are reported in the Vitesse whitepaper, “Precise Timing for Base Stations in the Evolution to LTE.”

In summary, mobile network operators are now asking for all network equipment as well as macrocells and picocells to support IEEE1588 boundary and transparent clock modes, with both adhering to maximum time errors of either 50 ns or 20 ns. The ITU-T has ratified these time error classes as part of the telecom profile for time and phase delivery, G.827x.

With these new specs, operators will be able to design networks with packet-based synchronization that can back up GPS/GNSS synchronization schemes for macrocells. They also will be able to completely eliminate GPS for indoor and outdoor picocell deployments. Vitesse’s VeriTime total hardware and software timing solution is the only solution today that can guarantee that network equipment vendors will be able to meet these new requirements with minimal incremental equipment cost.

Martin Nuss is the CTO and vice president, technology and strategy, of Vitesse Semiconductor. He also serves on the board of directors for the Alliance for Telecommunications Industry Solutions (ATIS). He is a fellow of the Optical Society of America and a member of IEEE as well. He has a doctorate in applied physics from the Technical University in Munich, Germany. He can be reached at [email protected].

About the Author

Martin Nuss

CTO and Vice President

Martin Nuss is the CTO and vice president, technology and strategy, of Vitesse Semiconductor. He also serves on the board of directors for the Alliance for Telecommunications Industry Solutions (ATIS). He is a fellow of the Optical Society of America and a member of IEEE as well. He has a doctorate in applied physics from the Technical University in Munich, Germany.