3-nm Optical DSP Meets AI's Need for Speed in Data Centers

In data centers, pluggable optical transceivers are critical for managing the massive flow of data required by AI, converting electrical signals into photons, slinging them between server racks, and translating them back into electricity.

At the heart of each of these optical transceivers is a high-performance digital signal processor (DSP). As AI training and inferencing grow increasingly bandwidth- and power-hungry, chipmakers are upgrading these optical DSPs to run faster and more efficiently.

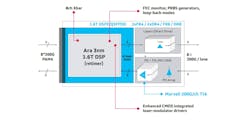

Marvell Technology introduced a PAM4 DSP that could reduce power by more than 20% for 1.6-Tb/s optical transceivers, due in part to the use of a more advanced 3-nm manufacturing process. The Ara chip integrates eight 200-Gb/s electrical lanes and eight 200-Gb/s optical channels, enabling up to 1.6 Tb/s of throughput in a compact, standard module form factor. According to the company, it also integrates the laser modulator drivers to reduce PCB design complexity, power consumption, and cost.

Based on its 200-Gb/s SerDes technology, Marvell said Ara is designed for octal small-form-factor pluggable (OSFP) and quad small-form-factor pluggable double density (QSFP-DD) modules, which are widely used in Ethernet, InfiniBand, and other AI compute fabrics.

“Ara sets a new industry standard by leveraging advanced 3-nm technology to deliver significant power reduction, driving the volume adoption of 1.6-Tb/s connectivity for AI infrastructure,” explained Xi Wang, Marvell's VP of product marketing for optical connectivity.

Stay Connected: The Role of Pluggable Optics in AI Data Centers

Marvell introduced the Ara chip as AI drives up the demand for high-bandwidth connectivity in AI data centers.

To train the most advanced large language models (LLMs), hyperscalers and other technology giants are building clusters of up to thousands of graphics processing units (GPUs) or other AI chips along with a massive amount of memory. As a result, these companies need faster and denser forms of connectivity that can transfer the huge amounts of data used by generative AI—both within server racks and between racks.

But slinging terabits of data per second between these columns of servers using electronic interconnects is impractical due to all of the power required to push signals down several meters of copper cable. Since electricity consumes more power the farther it must transfer data, it would also be expensive to keep everything cool. As a result, optical interconnects are widely used for rack-to-rack and server-to-switch connectivity in data centers, using pluggable transceivers that convert electrical signals into light, according to Marvell.

While moving data over light is more efficient than using electricity, the DSPs at the heart of these pluggable modules require a relatively large amount of power to run at high bandwidths. For instance, NVIDIA estimates that all optical transceivers in a data center containing 400,000 GPUs will burn through as much as 40 MW of power. Dissipating all of that heat before it takes a toll on the system's performance is not a trivial challenge.

By upgrading to the 3-nm process node and integrating the laser drivers directly on the chip, Marvell said the Ara DSP can deliver more than 20% power savings per pluggable module compared to its previous generation of optical DSPs.

Marvell said these improvements reduce thermal issues and system-level cooling costs, which are becoming a more significant technical challenge given that these modules need to fit into a similar form factor as a USB stick. Ara is designed to drive 800-Gb/s and 1.6-Tb/s ports on the front or "faceplate" of networking switches using standard PCB trace lengths, without requiring special arrangements for cooling or PCB redesigns to preserve signal integrity.

The pluggable transceiver market is growing fast, according to Dell’Oro Group. The market research firm expects shipments of 800-Gb/s and 1.6-Tb/s transceivers to grow at a pace of more than 35% per year through 2028.

Ara: Addressing the Need for High-Bandwidth AI Connectivity

Marvell said the energy efficiency of the Ara DSP gives system designers the ability to obtain high-bandwidth connectivity while remaining within tight power envelopes critical to today’s data centers.

The chip is designed to support high-density 200-Gb/s I/O interfaces within networking switches, network interface cards (NICs), and processors, while ensuring backward compatibility with prior generations. The PAM4 optical DSP supports a wide range of optical module formats, including 1.6T DR8, DR4.2, 2xFR4, FR8, and LR8, making it adaptable for many different data center topologies and AI compute node interconnects.

Ara features concatenated forward error correction (FEC) that meets the latest Ethernet and Infiniband standards, improving bit-error-rate (BER) tolerance under harsh link conditions. It also supports 8 × 8 any-to-any crossbar switching for routing flexibility with high-speed I/Os. Moreover, Marvell said it co-designed a transimpedance amplifier (TIA) chip to complement the Ara DSP, delivering a better signal-to-noise ratio and linearity for optical lanes slinging data at speeds of up to 200 Gb/s.

The Ara processor also features real-time diagnostics, including signal-to-noise ratio (SNR) monitoring, eye diagram analysis, and loopback capabilities for both the host and line-side interfaces.

Bob Wheeler, analyst-at-large for market research firm LightCounting, estimates the PAM4 DSPs at the heart of these optical interconnects will triple by 2029, reaching 127 million units per year. He said these optical DSPs will “remain the primary optical technology for connecting assets inside data centers for the foreseeable future.” He added that Marvell’s Ara chip indicates that “PAM4 technology continues to evolve to meet the challenges of AI infrastructure."

Given the rising demand for high-bandwidth connectivity in data centers, Ara has the potential to be a key building block of the AI boom. But other innovations in optical connectivity are also in the pipeline.

Marvell and many of its rivals across the semiconductor industry are also developing “co-packaged optics” technology that can be integrated on the same substrate as its networking switch chips, eliminating the need for pluggable optics.

The company also plans to co-package silicon photonics chiplets as GPUs and other AI chips, leveraging high-speed SerDes, die-to-die interfaces, and 2.5D and 3D advanced packaging technologies.

By placing optics directly inside the package, GPUs and other AI chips can connect to each other at higher bandwidths and over longer distances. As a result, AI chips in different server racks could communicate with each other with optimal latency and power dissipation, compared to the passive copper cables that link chips located in the same rack and copper traces used to connect them on the PCB.

Samples of the Ara PAM4 optical DSP are available to select customers, with full production expected later in 2025.

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.