11 Myths About Using Formal Verification

Members can download this article in PDF format.

What you’ll learn:

- How formal verification is able to find bugs before signoff.

- Formal verification’s ability to mathematically prove exhaustively that a chip design meets a set of assertions.

- Formal techniques are central to the successful design, verification, and implementation of today’s chips.

The advantages of formal verification are well-known and accepted in semiconductor development. This wasn’t always the case; a few decades ago, formal technology was widely regarded as an exotic technique requiring “magic” to be used successfully on a real project. Over this span, many success stories of truly scary bugs found before signoff have helped to raise awareness of—and confidence in—formal verification.

The ability to mathematically prove exhaustively that a chip design meets a set of assertions is a clear contrast with simulation, which can’t come up with proof of bug absence. If proof can’t be achieved due to legal design scenarios that violate the assertions, the formal tool presents these as counterexamples and provides information to help designers debug them. Users provide constraints that keep the formal analysis within legal bounds, ensuring that counterexamples are real failure scenarios that could occur in post-silicon chip usage.

This all sounds great, so why isn’t everyone running formal verification? It’s used successfully every day by thousands of individuals at hundreds of chip and system companies, but some designers and verification engineers are still reluctant. This may be partly due to some persistent myths about formal technology that make it seem too hard or too expensive. This article examines these myths and explains why they should not be cause for concern.

1. You need a PhD to use formal verification.

This myth was arguably true for first-generation formal tools, which were designed for academic purposes. They required learning an obscure mathematical notation to specify assertions and constraints. The tools needed lots of manual guidance, so most users were, in fact, professors and PhD students specializing in formal technology.

Consider the load value axiom for the RISC-V weak memory model. It says that for threads i, j, and k, if thread i performs a STORE operation followed by thread j performing another STORE, followed by the thread k performing a LOAD, then the value retrieved from the memory by the LOAD will be the latest value updated by the STORE. Formally, a mathematically precise notation can express this as shown in Figure 1. However, an average design or verification engineer may not be able to understand these notations, which are friendly to formal method PhDs but not otherwise.

Much has changed in recent years. Assertions and constraints are usually specified with SystemVerilog Assertions (SVA), a subset of the SystemVerilog language that designers and verification engineers already know and use. Formal tools have become smarter and more independent, and less reliant on user expertise. Many now offer visualization and better hints for debugging counterexamples or helping achieve full proofs. No PhD is required.

There’s also the whole category of formal applications (apps), which generally don’t require users to write any assertions at all. For example, a clock-domain-crossing (CDC) tool can automatically determine where crossings occur in a chip and what assertions must be proven to guarantee correct operation. The user only needs to provide some information on clocks, most of which is already available in the constraint files used by synthesis and layout tools.

2. Formal verification is hard because you need specifications exclusive to formal.

It’s not true that specifications are unnecessary for other forms of verification, such as simulation or emulation. The firmware and driver stack in SoC emulation is already providing the right environment to drive stimulus into the chip for testing; checkers rely on requirements to establish what needs to happen when the tests are run. Without specifications, verification engineers can’t write directed tests for simulation, the Universal Verification Methodology (UVM), or functional coverage.

Formal methods are perceptibly more sensitive to specifications as the effects of poorly defined requirements are felt more severely. Formal tests, specified as assertions, constraints, and covers, produce unexpected results because formal tools drive all possible combinations of stimulus patterns. This may result in driving spurious stimuli if the constraints captured from requirements aren’t accurate.

In many cases, the act of deriving requirements for formal verification from specifications can expose bugs. In fact, a good specification is a hidden bargain for successful formal verification (Fig. 2).

3. You can’t scale formal techniques to large designs.

This is another myth that was true for earlier generations of formal technology; users were limited to analyzing small design blocks. Today’s formal tools have far more capacity, and many are able to run in distributed mode on a server farm or the cloud. Formal techniques and methodologies have scaled up as well.

Designers and verification engineers routinely apply formal verification to large, complex subsystems, including verifying entire multi-threaded 64-bit processors end-to-end with formal. Figure 3 shows an example of a bug caught by the Axiomise abstraction-based solution in a highly parameterized network on chip (NoC) with over 1 billion gates (338 million flip-flops).

Formal apps may have even more capacity since they’re focused on a single task. CDC analysis, for example, is always run on the full chip to check the entire clock network.

4. Formal proofs take a long time to converge.

This may happen in some cases, especially when the formal test benches haven’t been naturally designed to be optimal in performance. However, in most cases, formal properties converge very quickly.

Naturally, the run-time of formal tools depends on design size, design complexity, and the number of assertions and constraints. There are several ways to manage the formal process to keep run-times reasonable. Running incrementally as the design grows and running in a distributed mode both help.

The Axiomise formal-verification training shows users how to apply a range of powerful abstractions to make the analysis mathematically simpler. An exhaustive analysis is, by its very nature, a big task, but users can do a lot to improve efficiency.

5. Formal techniques are only useful for building proofs.

This myth also derives from academic formal tools where the focus was entirely on achieving full proofs. While full proofs provide the utmost confidence in design correctness, formal verification adds value by finding tough, corner-case bugs such as the example in Figure 4.

The waveform in Figure 5 shows the bug caught in ibex RISC-V core using the Axiomise formal-verification solution. This bug will only ever appear in the design if the debug request arrived in the same clock cycle when the controller FSM was in DECODE state. The bug will not show up in any other state. The precise timing of the arrival of debug will make this kind of bug very hard to catch with dynamic simulation, where controllability of stimulus and exhaustive coverage would be a major challenge.

6. If you have run the simulation with 100% coverage, you don’t need formal techniques.

As noted earlier, formal verification is great at finding corner-case bugs missed by simulation or emulation. In addition, this myth rather overstates the value of coverage metrics. They are extremely valuable in identifying parts of the design not yet exercised, and there’s no chance of finding all bugs in such a case.

However, also as noted earlier, simulation can’t establish exhaustive mathematical proofs. Even 100% functional coverage doesn’t guarantee no bug escapes—it just confirms the exercise of the parts of the design covered by the selected metrics. The formal analysis will consider all possible behavior and is highly likely to find additional bugs.

7. Formal techniques are only useful for finding corner-case bugs.

Many formal users swear by formal for bug hunting, sometimes to such an extent that their management believes that formal is only good for bug hunting. One of the greatest benefits of formal is in establishing that bugs do not exist in the design with respect to the formally proven requirements.

Consider RISC-V, for example. A lot of the processors previously verified with simulation end up having bug escapes that are then caught by formal. Formal can prove beyond doubt that once bug fixes have been applied, there are no bugs remaining as formal properties prove on all reachable states of the design (Fig. 6).

It’s certainly true that there’s no better demonstration of formal power than finding a deep, scary bug that would have required a chip turn. A verification engineer saying “we never would have found that in the simulation” quickly makes believers of formal.

But formal verification can find all sorts of bugs, including those typically uncovered in simulation, more rapidly. For this reason, today’s chip projects often contain multiple blocks, some of them quite large, verified formally without any block-level simulation.

8. Once you have applied formal techniques, you don’t need to simulate.

Typically, every formal-verification environment uses constraints to describe the interfaces. These constraints need to be validated in simulation to check if they were modeled and interpreted correctly for formal verification.

Also, formal is usually applied earlier in the flows to get the maximum value for shift-left of verification. By the time the design has matured with more blocks being coded, it’s possible that some of the interface constraints may no longer be valid, so they must be re-validated in simulation.

Furthermore, simulation and formal are valuable in finding bugs related to hardware-software interactions that occur only in simulation or emulation when the software is running on embedded or host processors. Similarly, bugs along the analog-digital interface may only be found when running mixed-signal simulations.

9. Formal techniques don’t offer any coverage metrics, so it’s hard to know if you have done enough.

This is manifestly untrue since proofs provide one form of coverage metric. Knowing that 100% of the assertions in a design can never be violated is clearly a strong statement.

However, all modern tools now produce a code-coverage view in relation to proven asserts in formal showcasing (Fig. 7). It shows which lines of design code were activated and run during the formal proof.

Formal tools were used previously to evaluate code coverage in the absence of any checkers in formal. They could still provide insights into unreachable and dead code, possibly as a result of conflicting design code or configurations. Formal tools also are used extensively to prove that unreachable code-coverage holes in UVM environments may be always unreachable or may find a coverage gap in UVM.

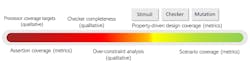

The six-dimensional coverage flow developed by Axiomise describes how coverage for formal can be computed both qualitatively and quantitatively (Fig. 8).

10. Simulation and formal verification can’t be combined.

As discussed earlier, the two verification approaches are complementary. Each can find certain types of bugs that the other likely will not. No chip project runs one without the other. Think of this as assuming interface assumptions to guarantee bug absence for blocks in formal verification and then validating assumptions in simulation to close the complete loop.

In addition, using formal to establish coverage gaps in simulation is a great example of combining the two technologies. Many project-management tools that track coverage results over time gather metrics from both simulation and formal verification to present a unified view of verification progress. This helps to convince the boss that the team is meeting the requirements of metrics-driven verification.

11. Formal techniques are only useful for functional verification.

The general concepts of assertions, constraints, exhaustive mathematical analysis, proofs, and counterexamples show up in areas of chip development beyond checking functional correctness.

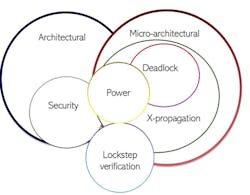

Today, formal tools are extensively deployed for verifying architectural requirements, CDCs, connectivity, power, deadlock, micro-architectural functional requirements, safety, security, and X-propagation (Fig. 9).

A recent example presented at DAC 2021 showed how formal verification could be used to find security vulnerabilities (confidentiality, integrity, and availability) in RISC-V cores and rank them with a vulnerability score. The greatest challenge with security is dealing with unknown attack scenarios. This is where formal really shines as it introduces all sorts of input stimuli in an attempt to be exhaustive, finding scenarios that designers would normally never consider.

The act of deploying formal forces designers and architects to think of exploiting vulnerabilities during early stages of architecture development, avoiding any ugly surprises downstream.

Formal techniques are central to the successful design, verification, and implementation of today’s chips. With the 11 myths dispelled, no one should hesitate for a second about embracing formal verification and other formal technology.