Today’s incredibly fine process technologies make it possible to integrate entire systems on a single chip—the so-called system-on-a-chip (SoC). However, the complexity of these processes means extraordinarily expensive mask sets: New developments like multiple patterning require multiple masks, essentially obsoleting single-mask solutions.

It’s been long said that 70% of the effort in bringing a chip to market is rooted in verification. That number will likely climb as single chips become capable of hosting a complete computing environment that can run software—all of which needs to be checked out before committing to a mask set.

Simulation has always played a central role in verification, but it serves best when proving out the cycle-by-cycle behavior of pieces of the circuit over some nominal number of cycles. Exercising a complete SoC, with hundreds of millions, or even billions, of gates running the tens of billions of cycles required to fully exercise a broad range of software and data scenarios, far outpaces what simulation can handle in a reasonable time. After verifying individual blocks and some aspects of the entire SoC through simulation, some other means is needed to verify the entire system as a whole before committing to developing mask sets and building first silicon.

Emulation is an increasingly important tool to address verification workloads that require extremely long tests—full-chip SoC simulations, software driver verification, booting Linux, etc. Many of these tests require the SoC to interact with a complex external environment.

Traditionally, in-circuit emulation (ICE) is used to create an environment that exposes a design under test (DUT) to realistic stimulus. But there are some drawbacks to ICE, giving rise to transaction-based emulation (TBE) as a more effective and flexible way of creating the powerful emulation environments for advanced SoC verification.

This file type includes high resolution graphics and schematics when applicable.

Traditional Emulation

Traditional ICE-based emulation involves two key elements: hardware implementation of the DUT on an emulator and physical connections to external data sources and devices. By implementing the design in actual hardware on an emulator, execution of the DUT is dramatically faster than through simulation. While simulators often execute large designs at rates as low as a few cycles per second, the latest emulators can run the design in the multi-megahertz range—a million times faster.

Meanwhile, for, say, a storage design that needs to handle SATA data, an actual SATA device running real-world data can be plugged into the emulator. The idea here is that a far greater range of realistic data scenarios can play out with this device as a data source versus what might be doable with some specific test vector set in simulation.

A major challenge, however, is the fact that 6-Gbit/s SATA data flows far faster than the emulated design can handle. This requires speed adaptors to buffer and “slow down” the rate of data input, so that it’s synchronized with the emulation speed. These ICE speed adaptors typically comprise individual boxes that would sit on a lab bench near the emulator. A well-stocked verification lab would need a speed adaptor and cabling for each protocol that might be required in testing.

While this setup works, it doesn’t scale well. In addition, someone must be physically present to configure the appropriate speed adaptors and cables for a given design being verified. This limits remote use of the emulator and makes it harder to share the emulator between different designs being tested simultaneously. If anything goes awry during execution, requiring a reset, someone has to be there to perform that task.

Debug can become a real challenge with these real-world sources. The data used for testing may not be generated deterministically, and the test scenario may not be reproducible. So if some data causes a problem, it becomes extraordinarily difficult to isolate the condition that caused the problem. The external environment connected to the speed adaptors and the DUT also aren’t controlled in synchrony. Therefore, if execution in the DUT stops at some breakpoint, the external environment won’t stop at the same time. Consequently, reproducing the test scenario becomes difficult, if not impossible.

While ICE remains an appropriate approach in some applications, these and other challenges are driving TBE’s rapidly growing adoption rate.

Transactions to the Rescue

TBE uses software-based, virtualized test environments on the emulator’s host computer for each data source and protocol being verified. With TBE, the test environment communicates with the DUT in the emulator, and its operation can be fully synchronized with the DUT. In essence, data sources are virtualized and moved into the host, eliminating the speed adaptors. This step solves two of the challenges associated with ICE: the need for physical access to the emulator and associated speed adaptors, and the non-reproducibility of test scenarios during debug.

These data sources require a communication vehicle between the host-based test environment and the DUT in the emulator. Data needed for test cases is sent from the host to the DUT; results are sent back from the DUT to the host. However, this data exchange creates the potential risk of a complete bandwidth meltdown across the communication channel.

The DUT in an emulator behaves exactly like the final design, because it implements the RTL that defines the design. That means it’s exercised by specific pins changing state. If a PCI interface is built into the design, for example, then the process of sending a data set into the DUT via PCI involves numerous cycles and a broad range of control and data pins. If the host test environment must specify all of the details for each pin and each clock cycle, the communication can be bogged down by the sheer volume of traffic.

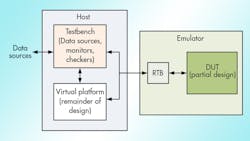

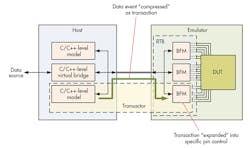

This is where the concept of the emulation transactor comes into play. An emulation transactor is verification IP (VIP) that abstracts the detailed behavior of a protocol (Fig. 1). If the goal is, for example, to write the data FFFFFFFF into the DUT, it’s far more efficient to communicate “Write FFFFFFFF” than translating it to the specific pin changes needed to implement the write operation. So a transactor deals in high-level transactions on the virtualized test environment side of its communication link, ignoring the details of which pins have to do what on which cycle.

This solves the problem of sending pin data from the host to the DUT on a clock-cycle-by-clock-cycle basis. Still, the DUT itself doesn’t understand transactions—it’s built to have explicitly controlled pins. So, after the transaction arrives, there’s a piece of the transactor—a bus-functional model (BFM) or full protocol IP—within the emulator that translates the high-level transaction into the low-level pin control implementing the transaction on the DUT. A transaction can be thought of as a compressed form of the command; at the very end, where the DUT starts, the command needs to be uncompressed into the pin-level data and timing that can drive the DUT. BFMs are often very complex RTL designs themselves. For example, BFMs provided with Synopsys ZeBu Server transactors are often based on Synopsys DesignWare IP cores.

The transactor’s host-side component can be created in any way desired, since it’s completely handled in software. The BFM portion, by contrast, must be synthesizable (typically synthesizable SystemVerilog), since it will be implemented in hardware within the emulator. Some emulators use a single pool of logic, shared between the DUT and the BFMs. Others, like the ZeBu Server emulator, reserve a dedicated set of logic for the transactors in a Reconfigurable Testbench (RTB), making it possible to easily update the transaction-based environment without recompiling the entire design.

Transactors have protocol-specific APIs that makes it possible to link or create virtual test environments. Because the transactor APIs are written in C/C++, sophisticated test scenarios and data-generation models can be built that closely reflect the traffic likely to occur on a real data link. APIs can also link to host-based virtual devices, such as a model of a rotating-disk storage device or a virtual HDMI display, minimizing the need for, and complexity of, the test bench itself. Additionally, because this traffic has been explicitly generated, it can be replicated when a problem occurs to help narrow down the search for the issue. If random data is actually desired, then the test environment can create it and still capture the test stimulus for use in debugging.

Transactors aren’t just about data generation; data needs to flow in the opposite direction as well. VIP on the host can act to verify that the DUT-generated low-level bit transactions constitute legitimate protocol interactions. In this case, the BFM “compresses” the pin-level results into a transaction and sends it back to a monitor or checker.

Monitors simply record selected activity for later inspection. Checkers go one step further and validate correctness, flagging errors as they occur. Since checkers are written for a specific protocol, messages and debug views can be created that are specific to the protocol, too.

For instance, with IPv4 networking data, a stream of bits can be identified and presented as individual packets, segregating headers from payload. A transactor is able to validate the particular packet for protocol compliance. By capturing the input and output packet information through the monitor, one can set up the ability to validate the correctness of the DUT’s data processing. Presenting the data and issues in the context of the protocol allows for easier, quicker diagnosis and fixing of design errors.

Finally, with test control, data sources, and data monitors/checkers all implemented as software within a host computer (plus the BFMs in the emulator itself), the entire setup can be networked for remote access. There are no more cables to plug and unplug, and speed adaptors needn’t be pulled out or returned to the cabinet. A single emulator can be reconfigured for a completely different design and test environment in a matter of minutes.

Combining Emulation with Simulation

TBE enables a single, unified environment for emulation. However, other verification techniques can be incorporated and operated in lock-step with the emulator. UVM allows cycle-accurate, circuit-level simulation and emulation to run together, using the direct programming interface (DPI) to manage communication and synchronization between the emulator and the simulator. In this model, the simulator and testbench load determines the pace of execution, since it runs much more slowly than the emulator.

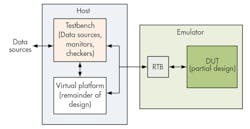

Virtual prototypes can be used as high-level, protocol-accurate simulators, though. When the DUT is verifying specific portions of a circuit, other parts of the design can be modeled in the virtual prototype (Fig. 2). This works on the assumption that only the part of the circuit in the emulator will be tested on a cycle-accurate basis. Everything else is just there to complete the circuit and provide the data sources and sinks seen by the DUT in the final chip. Such data needs to arrive and depart from the DUT on the appropriate cycle. But, what happens before data arrives at, or after it leaves, the DUT can be completely abstracted , meaning much quicker execution.

This allows for focused testing of the design blocks in question without getting bogged down in the rest of the chip’s details. The verification process can proceed more quickly, performing cycle-accurate testing only for the portion of the circuit in the DUT.

This model can be particularly useful when doing software-driven verification. a well-verified processor can run in the virtual prototype, executing software quickly while verifying other parts of the design in the emulator on a cycle-accurate basis.

Requirements for Successful TBE

As stated, TBE offers easier, faster assembly and execution of a sophisticated, high-performance verification environment on a complete billion-gate SoC. A number of key factors must be met, though, to be successful:

• The most obvious requirement is a high-performance emulator designed for TBE, with support for high bandwidth and low-latency communication between host and emulator.

• There must be a broad selection of transactors, virtual devices, and virtual bridges available for the chosen emulator. Not all transactors work on all emulators without manual intervention.

• A transactor’s synthesizable BFM often is a very complex RTL design on its own, and must be well-proven to ensure that debugging only takes place for design issues, not test infrastructure issues.

• At times, no transactor will be commercially available. This scenario requires a customized transactor; emulator vendors should provide tools that enable efficient creation of new, high-performance transactors.

• The test environment should be configurable using protocol-specific APIs that reflect the context of the protocol.

• The available monitors and checkers should display debug data and messages in a way that makes sense for the specific protocols they abstract.

• The emulator should be easily configurable in order to work with an external simulator – either a cycle-accurate simulator or virtual prototype.

It’s worth asking specific questions when researching the best approach to TBE environment assembly. Emulators and their associated models can represent a serious investment, so time spent thoroughly researching the options will pay off at the onset of circuit verification.

Summary

As the size and complexity of SoCs targeted for advanced process nodes grows dramatically, so too does the verification job (not to mention the cost of incomplete verification). Functions that once resided in different chips, all verified independently, must now be shown to work properly together before any of them can be committed to silicon. It’s all or nothing –a partially working circuit is not a usable circuit.

At the same time, product schedules and market pressures aren’t relenting, even though the job is becoming harder. If anything, more-compressed schedules mean more work in less time.

Older ICE-based emulation methodologies still have a place for verifying some designs, but they can become challenging to deploy for modern, complex SoCs with aggressive development timelines. Another option—transaction-based emulation—provides a scalable, virtualized verification methodology consistent with large design projects managed by geographically dispersed teams. Access to hosts and emulators can be handled over the network; no one need be physically present in the same room as an emulator to assemble a complete verification environment.

With mask sets at advanced process nodes running in the millions of dollars and the cost of schedule delay potentially even higher, there’s no room for mistakes. Just as the difficulty factor for verification jobs has risen, so too have the costs of failure. While everyone retains a healthy fear of a mask re-spin, TBE gives more complete verification more quickly, reducing the risk of errors. Properly applied, it can give a project manager much more confidence that the design will work as intended when cutting the masks.

Tom Borgstrom, director of marketing at Synopsys, is responsible for the ZeBu emulation solution. Before assuming his current role, he was director of strategic program management in Synopsys’ Verification Group. Prior to joining Synopsys, he held a variety of senior sales, marketing, and applications roles at TransEDA, Exemplar Logic, and CrossCheck Technology and worked as an ASIC design engineer at Matsushita. He received bachelor’s and master’s degrees in electrical engineering from Ohio State University.

About the Author

Tom Borgstrom

Director of Marketing

Tom Borgstrom is a director of marketing at Synopsys, where he is responsible for the ZeBu emulation solution. Before assuming his current role, he was director of strategic program management in Synopsys’ Verification Group. Prior to joining Synopsys, he held a variety of senior sales, marketing, and applications roles at TransEDA, Exemplar Logic, and CrossCheck Technology and worked as an ASIC design engineer at Matsushita. He received bachelor’s and master’s degrees in electrical engineering from the Ohio State University.