Nintendo 64: Breakthrough Design, Genuine Disruption

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Series: The Graphics Chip Chronicles

.. .. .. >> Introduction to this Series

.. .. .. << The History of the Integrated Graphics Controller

Silicon Graphics (SGI) was a leader and highly-respected workstation developer that rose to fame and fortune after it introduced a VLSI geometry processor in 1981. In the following years, SGI developed leading high-end graphics technologies. At that time, an ultra-high-performance workstation could cost more than $100,000.

Therefore, the idea of adapting such state-of-the-art technology to a consumer product like a video game console that sold for a few hundred dollars was considered bold, challenging, and crazy. Nonetheless, in 1992 and 1993, SGI founder and CEO Jim Clark met with Nintendo CEO Hiroshi Yamauchi to discuss just that—squeezing an SGI graphics system into a console. And thus, the idea of the Nintendo 64 was born.

Even the number, 64, referring to the number of bits, seemed outrageous. Most consoles at the time were struggling to shift from 8-bits to 32 bits. In the video game industry, 64-bits was more or less considered science fiction.

But against all odds, they did it. On November 24, 1995, at Nintendo’s annual Shoshinkai trade show, the company revealed the Nintendo 64 console. Then, in May 1996, at the E3 conference in Los Angeles, it showed the Nintendo 64 and announced it would be available in the United States starting in September.

It was an amazing amount of technology crammed into a small package and at an extremely low price of $250 (roughly $420 today).

This little gaming supercomputer would be considered feature rich today, and other than the clock speeds, would be a competitive device.

Nintendo 64 features:

- 64-bit custom MIPS R4300 CPU, clock speed of 93.75 MHz

- Rambus DRAM (4 Mbytes) with a maximum bandwidth of 4,500 Mbps

- Sound and graphics, and pixel drawing coprocessors at a clock speed of 62.5 MHz

- Resolutions in the range of 256 × 224 to 640

- The normal resolution is 320 × 240, 24bpp

- 32-bit RGBA frame buffer, with 21-bit color video output

- The graphics processor (RPC) includes:

- Z-buffer

- Anti-aliasing

- Texture mapping: tri-linear interpolated with mip maps, environmental mapping, and perspective correction

- Size: 10.23 inches by 48 inches by 2.87 inches

- Weight: 2.42 pounds

- The system comes with a multifunction 2D and 3D game controller, including digital and analog joysticks, and lots of different buttons.

- MIPS and RPC processors were manufactured by NEC on the 0.35µ process for Nintendo.

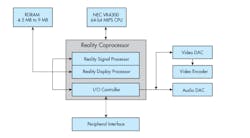

The architecture of the system consisted of two major chips: the main CPU and the Reality Coprocessor (RCP) designed by SGI. The following diagram shows the overall arrangement.

The main VR4300 CPU was a 64-bit microprocessor that ran at 93.75 MHz with 64-bit registers, data paths, and buffers to handle high-speed data movement within the chip. The wide data paths were particularly important for operations such as bit-stream decoding and matrix manipulation, core features in video and graphics processing. The VR4300 also supported double-precision floating-point computations for high-performance graphics. Large on-chip caches (16 KB instruction and 8 KB data) delivered high performance for interactive applications by reducing the need for frequent memory accesses. It was built using 0.35µ NEC process technology.

Within the RCP were two major sub-systems, the Reality Signal Processor (RSP) and the Reality Display Processor (RDP).

Also known as RSP, it contained:

- The Scalar Unit: A MIPS R400-based CPU that implemented a subset of the R400 instruction set.

- The Vector Unit: A co-processor that performed vector operations with thirty-two 128-bit registers. Each register was sliced in eight parts to operate eight 16-bit vectors at once (just like SIMD instructions on conventional GPUs).

- The System Control: Another co-processor that provided DMA functionality and controlled the neighbor display processor module.

To operate the RSP, the CPU stored in RAM a series of commands called a display list along with the data that would be manipulated. The RSP would read the list and apply the required operations on it. The available features included:

- Geometry transformations.

- Clipping and culling (removing unnecessary and unseen polygons).

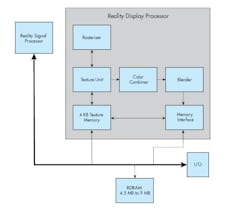

The RSP fed the Reality Display processor (RDP), which is illustrated in the following block diagram.

After the RSP finished processing polygon data, it sent rasterization commands to the RDP. These commands are either sent using a dedicated bus called XBUS or through main RAM.

The RDP was just another processor with fixed functionality that contained multiple engines used to apply textures over polygons and project them on a 2D bitmap. It could process either triangles or rectangles as primitives. The rectangles are useful for drawing sprites. The RDP’s rasterization pipeline contained the following blocks:

- A rasterizer that allocated the initial bitmap that served as a frame-buffer.

- A texture unit that applied textures to polygons using 4 KB of dedicated memory (called TMEM), allowing up to eight tiles to be used for texturing. It could also perform the following operations:

- 4-to-1 bilinear filtering for smoothing out textures.

- Perspective correction to improve the coordinate precision of the textures.

- A color combiner that mixed and interpolated multiples layers of colors (for instance, to apply shaders).

- A blender that mixed pixels against the current frame-buffer in order to apply translucency, anti-aliasing, fog, dithering, and z-buffering. That last feature was critical to efficiently cull unseen polygons from the camera viewpoint (replacing software-based polygon sorting methods which could drain a lot of CPU resources).

- A memory interface used by multiple blocks to read and write the current frame-buffer in RAM and/or fill the TMEM.

The RDP provided four modes of functioning, and each mode combined these blocks differently in order to optimize specific operations.

The RDP supported 16.8 million colors. The system could display resolutions from 320 × 240 up to 640 × 480 pixels. Most games tapping into the system’s high-resolution 640 × 480 mode required use of the Expansion Pak RAM upgrade.

The system had several advanced, high-end graphics capabilities, including:

- Real-time anti-aliasing—removes jagged edges from the objects, creating a smooth and realistic view as the player moves through a scene.

- Advanced texture mapping techniques—generate high-quality textures and retain the natural texture of every object in the scene, independent of how close the player is to the object.

- Real-time depth buffering—removes hidden surfaces during the real-time rendering process of a scene, allowing game developers to efficiently create 3D environments.

- Automatic load management—enables the objects in the scene to move smoothly and realistically, by automatically tuning the graphics processing.

The console came with a new three-grip controller that allowed 360-degree precision movement. A “3D stick” enabled players to identify any angle in 360 degrees, as well as control the speed of a character’s movement. Other new additions include the “C Buttons,” which could be used to change a player’s perspective, and a “Z Trigger,” for shooting games.

In addition, the controller featured a memory pack accessory which allowed players to use a special memory card to save game play information on their controller. This enabled players to take their game play data with them and play on other Nintendo 64 systems. Over 350,000 Nintendo 64s sold within days of its release.

Console Wars

The console market was highly contested then, as it is now. New companies were entering the market as older ones were being driven out. As a result, suppliers started a price war that almost ruined them all.

In August 1996, Nintendo announced plans to drop the price of the Nintendo 64 to under $200 before it launched in the US. The company was looking to match 32-bit systems from Sony and Sega head-to-head on pricing. Sony reacted by reducing prices on many of its video games to $39.99. Sega, on the other hand, refused to reduce the price of its console.

Then, half a year later, in May 1997, Nintendo announced a new, lower price of $149.95 for Nintendo 64. At the time, the company cited production efficiencies resulting from a planned uptick in global sales and favorable foreign exchange rates for the price drop. With Sony, Sega, and Nintendo all offering consoles for less than $150, it was not unreasonable to expect a sub-$100 sales bonanza for Christmas 1997.

By May 1998, there were only two players in the console market, the Sony PlayStation and the Nintendo 64. The Saturn system was still around—but not for long.

Then, in August 1999, Nintendo dropped the price of the Nintendo 64 to under $100 as the holiday market heated up.

Conclusion

Lots of advanced techniques and solutions incorporated in the Nintendo 64 have become the basis for modern 3D gaming. The hardware itself had a list of major features:

Here are some of the software features developers played around with that paved the way for modern game engines.

- Trilinear mipmapping, the one most often touted.

- Edge based anti-aliasing, which we have today as FXAA and MLAA.

- Basic real-time lighting, which implies the N64’s GPU is a hardware T&L GPU).

- But what stands out in my mind when it comes to the Reality Coprocessor (RCP) is that it was probably one of the first, if not the first, fully programmable GPUs. The processor ran on microcode which developers could tweak to fit their requirements. The problem was Nintendo didn’t release developer tools until late in the N64’s lifespan. But once they did, several companies (notably Rare and Factor5) pushed the system to its limits.

Other features that were leveraged by game studios included:

- Smart use of clipping. Nintendo favored the use of cartridges for their speed. With clipping, sections of the game world that remained out of the player’s sight would not be rendered until the player gets very close to them.

- The game Banjo Kazooie introduced a new way to push out large textures for detailed environments. One of the challenges was that it caused memory fragmentation, which means that even though technically enough memory was around, there was not enough contiguous memory to store something in. To solve the problem, the game ran a real-time memory de-fragger.

- Texture streaming. This was introduced by developer Factor5 for the game Indiana Jones and the Infernal Machine. This technology allowed them to stream textures being rendered, thus overcoming the 4 KB texture memory limit.

- Frame-buffer effects. This is used for effects like motion blur, shadow mapping, “cloaking,” and a technique that still amazes me: render to texture. With it, textures are created and updated at runtime.

- Level of detail (LoD). If a model is sufficiently far away, this technique is used to swap it out for a low-poly model.

The Nintendo 64 was truly ahead of its time, but unlike other pioneers the company did not suffer for bringing out such an advanced system. The Nintendo 64 did very well and drove the industry forward toward more realistic and high-performance computer graphics.

Epilogue

Nintendo may have spurred the development of the PlayStation. In the early 1990s, Nintendo partnered with Sony to develop a new CD-ROM console and attachment for the Super Nintendo system, resulting in a prototype fans called the Nintendo PlayStation.

However, Sony’s deal with Nintendo fell through. Sony ultimately decided to ditch Nintendo and launch the PlayStation on its own — a decision that would completely change the course of the video game industry. That ultimately lead to the birth of Sony’s massive PlayStation brand, and a major and long-term competitor to Nintendo.

In May 1999, Nintendo decided to use IBM’s 400-MHz, 128-bit PowerPC chip called Gekko in Nintendo’s new Dolphin game console.

Nintendo also said that the new system would use a new graphics chip designed by ArtX, which was founded in 1998 by ex-SGI/MIPS employees who developed the Nintendo 64 graphics processor.

Then, in May 2001, Nintendo announced the GameCube scheduled for launch later the same year. The GameCube featured the Flipper Chip from ATI (through ATI’s acquisition of ArtX). The integrated processor incorporated 2D and 3D graphics engines and a DSP for audio processing from Macronix. It also integrated all the system I/O functions, including CPU, system memory, joystick, optical disk, flash card, modem and video interfaces, and an on-chip high bandwidth frame buffer. IBM supplied the 485-MHz Gekko microprocessor.

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Series: The Graphics Chip Chronicles

.. .. .. >> Introduction to this Series

.. .. .. << The History of the Integrated Graphics Controller

About the Author

Jon Peddie

President

Dr. Jon Peddie heads up Tiburon, Calif.-based Jon Peddie Research. Peddie lectures at numerous conferences on topics pertaining to graphics technology and the emerging trends in digital media technology. He is the former president of Siggraph Pioneers, and is also the author of several books. Peddie was recently honored by the CAD Society with a lifetime achievement award. Peddie is a senior and lifetime member of IEEE (joined in 1963), and a former chair of the IEEE Super Computer Committee. Contact him at [email protected].