Engineering Simulation Exploits GPUs

What you'll learn:

- Why leading CAE software vendors are turning to GPUs.

- Trends in transitioning to GPUs for CAE workloads.

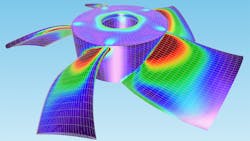

Once the domain of specialists with high-powered computers, computer-aided engineering (CAE) is becoming a workstation application for designers. Earlier this year, Jon Peddie Research conducted a series of interviews with leading CAE software vendors such as Altair, Ansys, Dassault Systèmes, Hexagon, and Siemens Digital Industries Software. JPR also worked with NVIDIA to understand how the industry is changing in response to GPU acceleration now available in many CAE applications and workflows. The results of those interviews are available in an eBook titled Accelerating and Advancing CAE.

The transition hasn’t been a quick one. GPUs were introduced at the end of 1999 in response to high demand from enthusiastic gamers who were fervently embracing 3D gaming. The first GPUs were expressly designed for games and game developers could simply write to the GPU’s built-in functions.

As application programming interfaces (APIs) evolved those functions multiplied exponentially. The effect was immediate—the number of games written for GPUs increased rapidly, games ran faster, and they became more beautiful.

With the benefit of hindsight, we now know the same transformation was on the way for engineering and scientific calculations, but a lot had to happen before the design industry was ready. All of the software had been built for CPUs and customers depended on the power of their systems’ CPUs to run complex, resource-heavy simulation and analysis software. They also were accustomed to analysis software being complex and taking a very long time to run.

Everything Goes Faster

As the pace of innovation accelerates, each successive generation of GPU gets new features that are useful for CAE, including hardware-accelerated matrix math and AI—memory is faster, bandwidths are higher.

Moreover, software tools for programming GPUs are proliferating. Introduced in 2007, NVIDIA’s CUDA enabled the development of specialized libraries for applications across the computing universe. AMD also has been working on open software approaches, as has Intel, which is introducing new high-powered GPUs to complement its CPUs.

In our interviews with the CAE companies, we were told that GPUs are outperforming CPUs by many multiples depending on the specific tasks. However, despite this obvious advantage, we also were told that some engineers worry about GPU-accelerated applications having to pay for the speed boost with accuracy. That hasn’t proved to be the case. Instead, developers and their customers are finding the results from GPU-accelerated calculations to be as accurate as those performed on CPU based solvers.

GPU acceleration is enabling workstations to do the jobs that were previously performed by high-performance-computing (HPC) machines. As a result, performing those jobs can be less expensive in terms of energy use and financial costs. The ability to perform more iterations less expensively and more sustainably enables more designers to take advantage of simulation earlier in the design process and have confidence in the results.

Industries don’t transition overnight, and the CAE industry is a particularly good example. Some of the products are based on very old code originally written for CPUs in the 1960s and 70s. How these companies take advantage of GPUs may vary.

Making the Transition

The companies we interviewed told us that they’re in the early stages of working with GPUs for CAE. They’ve been aware of the benefits of GPUs for a long time, but they’re even more aware of the pitfalls of moving too fast. Developers must consider the installed hardware base at their customer sites and what kind of problems they’re trying to solve.

Since their introduction, GPUs have been evolving to support all digital industries. They have incorporated more transistors, larger memory, faster bandwidths. We’ve seen the development of new types of accelerator cores such as NVIDIA's CUDA Cores and AMD’s Stream Processors, and Tensor cores have been added to accelerate AI/ML applications. Real-time ray-tracing cores have been introduced as has a plethora of developer software tools and libraries.

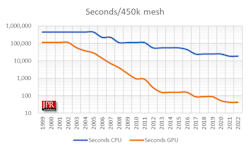

The net result is the GPU has leapt ahead of the CPU in reducing the time needed to process simulation meshes (see figure).

Some of the independent software vendors (ISVs) are sticking with CPUs, while some of the newer companies and new programs are all on GPUs. The most sensible approach in our opinion is a hybrid approach that lets the user employ whatever GPU capabilities they have.

There are several clear takeaways from this project. One is the increase in using GPU acceleration in established CAE products. We’re also seeing the development of new products written from the ground up to take advantage of GPUs. Sustainability has become an important consideration for developers and customers. And, finally, CAE has the potential to become a more integrated part of the design process, which leads to better designs and more sustainable products.

JPR’s Accelerating and Advancing CAE eBook is available at GraphicSpeak.com.

About the Author

Jon Peddie

President

Dr. Jon Peddie heads up Tiburon, Calif.-based Jon Peddie Research. Peddie lectures at numerous conferences on topics pertaining to graphics technology and the emerging trends in digital media technology. He is the former president of Siggraph Pioneers, and is also the author of several books. Peddie was recently honored by the CAD Society with a lifetime achievement award. Peddie is a senior and lifetime member of IEEE (joined in 1963), and a former chair of the IEEE Super Computer Committee. Contact him at [email protected].