AMD Announces Next-Gen GPUs and Software for AI

What you’ll learn:

- What advances were made with the fourth-gen AMD Instinct MI350?

- What’s new with ROCm 7?

- How AMD fares against the competition.

AMD’s latest press conference highlighted a family of new GPUs and software that target artificial-intelligence (AI) applications. These tools compete with NVIDIA and Intel as well as other AI-focused hardware vendors.

AMD’s Instinct MI350 GPGPU

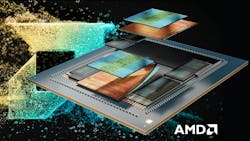

The AMD Instinct MI350 is the company’s fourth-generation Instinct architecture using a 3-nm process node and CoWoS-S chiplet packaging architecture (Fig. 1). The system interconnect is based on the AMD Infinity Fabric AP Interconnect.

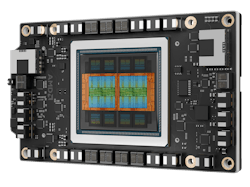

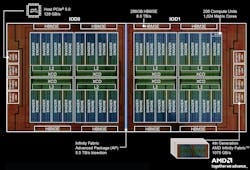

The chip packs in 185 billion transistors and incorporates eight stacks of high-bandwidth memory, HBM3E (Fig. 2). The architecture employs a N3P accelerator complex die (XCD) stacked on two N6 I/O base die (IOD). The XCD includes eight 32 AMD CDNA 4 compute units. In addition, the GPU can be divided into one to eight partitions with SR-IOV support.

The chip also adds support for smaller, AI-friendly floating-point formats, including FP4 and FP6. The more compact formats reduce large-language-model (LLM) size and increase performance of the models because smaller values can be manipulated more efficiently.

If models utilize the smaller floating-point values, then performance can be improved by a factor of four. The 288 GB of HBM3E can support LLMs with up to 520 billion parameters.

As part of the announcement, AMD is supporting the UBB8 industry-standard GPU node in both air-cooled and direct liquid-cooled versions (Fig. 3). The boards include eight AMD Instinct chips, which target large data centers. Total HBM3E memory in the system is 2.3 TB with a bandwidth of 64 TB/s. Floating-point performance scales from 161 PF for PF4 to 0.63 PF for FP64 values.

The eight chips are linked via 153-GB/s AMD Infinity Fabric. The 128-GB/s PCI Express (PCIe) Gen 5 links provide outside communication.

ROCm 7.0 Improvements

ROCm provides the underlying software support for AMD’s hardware (Fig. 4). It’s comparable to NVIDIA’s CUDA. One major difference is that ROCm is open source. It enables software to be written so that it can target different hardware platforms like CPUs, GPUs, DPUs, and compute clusters.

AMD ROCm Enterprise AI builds on ROCm 7 with support for Kubernetes and slurm with support for cluster provisioning and system telemetry.

AMD’s AI Environment

AMD’s Instinct and ROCm takes on a wide range of solutions from embedded applications that have a single CPU or GPU through cloud computing with disaggregated compute and storage. The three main components in higher-end systems include the company’s x86 EPYC “Turin” CPU, the Instinct MI350 Series GPU, and the AMD Pollara 400 NIC. The latter is designed to support low-latency, 400G Ethernet connectivity. The P4-based architecture supports ATS and RDMA as well as the new P4DMA.

AMD has large-scale data center solutions built on the latest GPU (Fig. 6). These are designed to deliver dense compute solutions based on the latest GPUs. In a forward-looking view, the company talked about the “Helios” AI-optimized rack solutions for 2026 delivery. It will use the next-generation CPU, GPU and NIC.

ROCm 7 works with the AMD Developer Cloud. This provides zero setup environment using Jupyter Notebooks and integration with GitHub. Preinstalled Docker containers support the latest AI tools.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.