Mixing Analog and Digital Spiking Neural Networks for Low-Power Sensors

What you’ll learn:

- What’s the difference between analog and digital spiking neural networks?

- Why mix analog and digital SNNs on one chip?

- Why SNNs are key to always-on machine-learning operation.

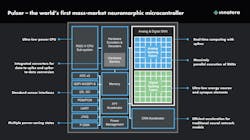

The Pulsar system-on-chip (SoC) developed by Innatera integrates multiple, low-power artificial-intelligence/machine-learning (AI/ML) spiking-neural-network (SNN) accelerators that target sensor-based solutions (Fig. 1). I spoke with Innatera’s CEO, Sumeet Kumar, about how this SoC can provide always-on (AON) neural-network operation in battery-power- or energy-harvesting-based applications.

AON operation and the ability to process all of the data locally offers significant advantages in latency, privacy, and performance compared to cloud-based AI/ML support and more power-hungry, non-SNN, edge-based solutions.

What’s the Difference Between Analog and Digital Spiking Neural Networks?

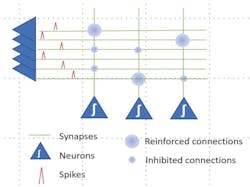

Spiking neural networks, also known as neuromorphic computing, are more closely related to biological neurons than conventional, digital deep neural networks (DNNs) like convolutional neural networks (CNNs). SNNs use time-based “spikes” as input, causing “neurons” to generate additional spikes depending on weights associated with the inputs (Fig. 2). This mimics the way real neurons operate versus CNNs that process all of the inputs in parallel.

SNNs can be implemented as analog or digital circuits. The main difference is how they’re implemented rather than their logical operation. Both utilize weights and trigger neurons through a multi-level array. The analog approach has the advantage of continuous operation and integration as well as very-low-power operation. The digital approach is more flexible and more suitable for some models.

Innatera’s Pulsar includes both analog and digital SNN acceleration, enabling developers to choose which will be used for a particular AI/ML SNN model.

Why Mix Analog and Digital SNNs on One Chip?

The company mixes multiple accelerators on the Pulsar. This includes the analog and digital SNN accelerators along with a CNN and fast Fourier transform (FFT). Each has its own advantage, allowing developers to utilize each depending on the application. The accelerators are optimized for particular aspects of an application; they may or may not be active at a particular time. For example, the very-low-power analog SNN can be used to track the sensor operation and start up the rest of the hardware when detecting certain conditions.

An example of how a mix of models might be used is a smart doorbell that determines when a person is in view. The sensor might be video, infrared, or radar. An SSN is proficient with this type of identification.

Why SNNs are Key to Always-on Machine Learning Operation

The Pulsar’s SNN efficiency is about two orders of magnitude better than implementing a similar model in a conventional, digital DNN. This reduction in power requirements radically changes how

The AI/ML accelerators are controlled by a 32-bit RISC-V processor with floating-point support, which would be similar to an Arm Cortex-M4F. It can handle AI/ML chores, but typically it manages data, communication, and system operation. The processor can sleep while the SNN is operational.

The Pulsar is available in a 2.8- × 2.6-mm wafer-level chip-scale package (WLCSP). Software support comes by way of Innatera’s Talamo suite, which is integrated with PyTorch. Developers can create and test models in PyTorch for implementation on the Pulsar. A simulator provides full-function emulation of the chip, enabling developers to test models on the analog and digital SSN as well as the CNN accelerator.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.