Demand for high-quality semiconductors in popular consumer products like smartphones and safety-critical components such as automotive anti-lock braking systems is at an all-time high. This unrelenting need for semiconductors of all types presents a challenge for leading integrated device manufacturers and fabless semiconductor companies to maintain high product yield levels without compromising quality. Incurring costly return material authorizations (RMAs) due to quality issues can have a long-term negative impact on customer relationships. If too many RMAs occur, product manufacturers may decide to design the problematic semiconductor chips out of future products and seek out other manufacturers.

The most effective method to increase quality and limit manufacturing-related RMAs is to reduce the number of escapes or devices that are passed in the testing phases but, in fact, are defective or marginal devices at best. Until the advent of real-time big data analytics, it was very difficult to reliably identify and correct escapes before the device was sent off to another location for packaging or shipping to a customer. The test data from the manufacturing floor was either not available in time or provided insufficient information to make a clear determination whether a part should be rejected.

Optimal+ Escape Prevention algorithms can correlate historical test data (Figure 1 left) to known RMAs (Figure 1 right) to identify outliers that can be prevented from being shipped into the supply chain in future production runs.

The various tests—from wafer sort to final test—must be diligently monitored since ATE is not 100% accurate. In addition, for many semiconductor companies, testing also can occur at many different geographic locations depending on the size and scale of their manufacturing operations. It is not unusual for wafer manufacturing to occur at a foundry in one country, wafer sort test in a different country, and final test or system-level test in yet a third country—leading to data fragmentation across the manufacturing landscape and numerous opportunities for escapes. Big data analytics can be tailored to significantly reduce escape occurrences by tracking performance at the level of a chip’s DNA across all ATE regardless of where they are located, to make a real-time assessment: Is good really good?

Historical perspective on semiconductor quality and escape

Traditionally, when semiconductor chips failed, companies typically assumed the problems occurred at the assembly stage. Due to the limited data available to them, most global semiconductor companies would defend their testing capabilities and assert that the ATE produced consistent fail-proof results. Simply, semiconductor companies widely agreed that the test results were flawless and not to be challenged.

Facing rising demand for higher quality devices with defective parts per million (DPPM) numbers close to zero, semiconductor companies were motivated to take a closer look at quality issues and minimize the number of defective devices. With the arrival of big data analytics, companies turned to these powerful tools to gather more actionable information earlier in the cycle to assess the likelihood of escapes and identify the chief culprits throughout the manufacturing process and across geographies.

These tools also collect data from the individual chip level up to the worldwide manufacturing process, creating a wealth of data to analyze and offering a holistic approach to problem-solving.

According to a customer study, nearly one-third of all failed devices are typically designated No Fault Found—the problem could not be reproduced, and the device seems OK. The other two-thirds of the device failures could be traced to the manufacturing process, owing to chips or escapes that were actually faulty. Using big data analytics, these escapes can be flagged and prevented before they make it into the supply chain, reducing costly RMAs and maximizing customer satisfaction.

Whether the problem is in the fab process or the test program or simply a test-equipment malfunction, many test escapes can be prevented by tracking and analyzing the DNA of each chip on every wafer from wafer sort to final test.

The same study also indicated that test-equipment malfunction is a significant contributor to chip escapes. Previously, semiconductor operation managers presumed, due to lack of contradictory data, that testers were fail-proof but realized through a deeper analysis of STDF that ATE could freeze, have dust in the prober, or fail for myriad other reasons, impacting the results. In addition, big data analytics also determined that excessive probing resulted in more false good devices and more escapes.

Semiconductor companies large and small have recognized these weaknesses in their testing process and the opportunity to improve the process with big data analytics. They have invested capital to purchase real-time big data analytics solutions for the purpose of reviewing companywide (including outsourced foundries) test data analytics to ensure quality and reduce escapes.

Why big data helps assess, detect, and minimize escapes

With big data solutions, terabytes of fragmented parametric test data now can be tracked, monitored, and analyzed 24/7.

The DNA of every chip processed across a global manufacturing supply chain can be collected and analyzed in one central location, enabling more effective decision-making. With this amount of knowledge in hand, semiconductor operations can begin to improve product quality based on more than just a single ATE result.

Many escape-causing issues occur very sporadically and are easily missed when using classic methods to detect them, such as statistical bin limits. Various issues (environmental, equipment-related, test program, or OS bugs) can cause escapes that are difficult to catch in time to take corrective action.

Today, semiconductor companies can assess why bad dice can slip through manufacturing test and land in consumer products. Big data solutions have identified many areas where bad dice managed to slip through testing operations. Here are just a few.

Too many touchdowns

Data analysis enables semiconductor manufacturers to determine the number of probes or touchdowns. Excessive touchdowns are a significant contributor to device failure since foundries may excessively probe to keep yield numbers high without considering the effect that too many touchdowns have on the long-term quality of the chips. Using Optimal+ Escape Prevention, customers can monitor the number of times a good die has been probed, quarantining any die that has been probed more than n times to prevent shipping potentially damaged devices to customers (Figure 2).

Human error

Human error is one of the main contributors for test escapes and RMAs. For example, an engineer may forget to adjust testing limits, and therefore, less stringent tests are administered to the current wafers, allowing many more questionable dice to pass inspection. Big data solutions catch these errors by delving into the test results and determining which chips were tested properly.

ATE freeze

ATE freeze occurs when measurements return the same or similar test results across several parts. This sporadic and hard-to-catch event can be caused by parts getting stuck in the tester or by tester hardware and software malfunctions. So, test results from previous chips are recorded on subsequent tests until the ATE resets itself. In one instance, a semiconductor production line allowed 2,200 units to go untested before detecting a freeze.

Partial test program execution

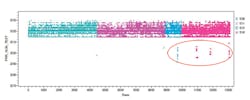

Occasionally, a tester may skip critical tests, enabling a part to be marked as good even though only part of the test program was actually executed. There are many potential causes of this problem, including complex test program flows, tester resource issues, tester OS bugs, and many more. The data analysis in Figure 3 shows dice initially labeled as good but with a low SPEC PRR (part results record) value detected by Optimal+, meaning they had less than the minimum number of required tests executed by the test program and were incorrectly considered to be good devices.

Using rules to minimize test time and escapes

By instituting automated algorithmic rules that check for the problems listed above and many more, semiconductor companies

can reduce RMAs by 50%, with negligible impact on product yield or overall test time.

In the absence of a big data solution, it’s nearly impossible to positively impact quality without significantly increasing manpower. While engineers can diligently monitor ATE and test results for issues, big data solutions can automate the process by instituting rules that run unattended 24/7. These algorithmic rules can quickly identify test issues such as ATE freezes, excessive probing, and loose testing rules while there still is time to take meaningful action to prevent test escapes.

Many issues such as environmental, equipment-related, test program, or OS bugs can cause a tester to skip critical tests, enabling a part to be marked as good even though only part of the test program actually was executed. With big data analytics, semiconductor companies now can create an automated rule to raise an alarm or even rebin if the chip is under-tested.

Conclusion: the real cost of escapes

What does an RMA cost a company? On average, RMAs cost semiconductor companies hundreds of thousands to millions of dollars each year. In addition to lost revenue, too many RMAs can damage a semiconductor supplier’s relationship with its customer and result in loss of business to another company and/or in negative contractual rights that may yield unfavorable contract terms for the next product revision. There’s enormous impact, risking significant amounts of lost revenue as customers may ultimately design a part out of their product if there are too many problems triggered by low-quality or defective parts. In an era of multiple-source supplier relationships, reducing RMAs is more important than ever. OEMs cannot tolerate low-quality suppliers and will go to one that offers superior product quality.

In the end, semiconductor companies must carefully assess their quality because their customers are demanding strict DPPM numbers previously reserved for the likes of automotive, safety-critical, and medical device companies. Today’s semiconductor customers expect their devices to be error-free, and they expect accountability from their semiconductor suppliers. Real-time big data solutions on the global manufacturing floor can address these important issues and keep semiconductor suppliers, customers, and end users happy.

About the author

Michael Schuldenfrei has more than 25 years of software and information technology experience and currently is the chief technology officer of Optimal+. During his nine-year tenure at the company, he served as vice president of R&D and chief architect before becoming CTO in 2012. [email protected]