Computational Storage Device Verification: A Challenging Task

The path to success of the solid-state drive (SSD) as the ultimate replacement for the hard-disk drive (HDD) has been littered by a multitude of technical obstacles that have daunted the engineering community for several years. And the challenges are not over.

Three bottlenecks continue to undermine and slow down the progress in SSD adoption. First, the storage media consisting of NAND flash fabric endures inherent quirks of its own. They include finite life expectancy, wear leveling, garbage collection, performance degradation over time, finicky reliability, random latency, and others that had to be addressed in the SSD controller via ingenious solutions.

Second, the bandwidth and latency of the host computer interface never met the SSD requirements to deliver its full potential. From PCIe 4 to PCIe 5, non-volatile memory express (NVMe), GPUDirect ,and CXL, the interface bandwidth and latency improvements while remarkable have always lagged behind the curve. Figure 1 charts improvements of compute engines, degradations in bandwidth per core, and increase in latency over time. The trends will continue for the foreseeable future.

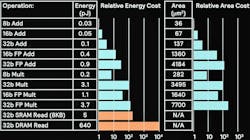

Third, the physics of data movement has constantly interfered with performance and power consumption targets. It may come as a surprise to realize that the movement of data between storage and compute engines consumes orders of magnitude more energy than the computation itself.

The table below enumerates power consumption of different types of computations and memory accesses. It shows that energy consumption is dominated by data movement. For example, an 8-bit fix-integer addition consumes 0.03 picojoules (pJ), but the same for an 8-bit floating-point burns 10 times more energy. Reading from an 8-kB SRAM, the consumption jumps to 5 pJ, i.e., 10X more power, and, far worse, reading from a large DRAM, power consumption swells by two orders of magnitude to 640 pJ.

Computational Storage Device

To reduce overall energy consumption in an SSD system, data movement between storage and compute engine must be drastically reduced. That’s the driving force behind the computational storage device (CDS) architecture.

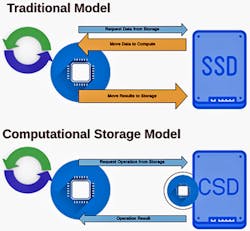

The CDS concept is rather simple: perform computations locally within the SSD and eliminate data transfers with the host computer (Fig. 2).

In the traditional model, the blue line on top shows the request data from the host to storage. Data gets read from the SSD and moves across the bus to the host. The host manipulates the data via local RAM or local cache and moves the result to the SSD. The two orange lines represent massive power consumption, significant performance degradation, and rather slow execution.

In the simplified model, a small processing element sits next to the storage. The request data from the host is executed locally by the processing element, data is locally manipulated, and the result sent back to the host. No data is exchanged between storage and host, thus saving power, increasing performance, and accelerating the execution.

Several applications benefit from the CSD, including image recognition, edge computing, AI/ML, real time analytics, data base query, and others (Fig. 3).

Database query is particularly interesting because it can be scanned, and the data processed locally, without using any PCIe bandwidth. For example, in the Transaction Processing Performance Council (TCP-H) benchmark—a popular way to evaluate the ability of a storage device to handle database queries—performance acceleration ranges from 100% to 300%.

CSD Implementations

The industry devised two CSD architectures, one making use of a dedicated field-programmable gate array (FPGA) and the other deploying an application processor (AP).

CSD Based on FPGAs

The adoption of an FPGA for local processing in an SSD has been spearheaded by Samsung with its SmartSSD. Attaching an FPGA to an SSD on the same board and configuring the programmable device with compute elements, such as Arm cores or DSPs, data can be processed locally, providing significant increase in performance.

The scheme can accelerate a plurality of applications from artificial intelligence/machine learning (AI/ML) to compression, database queries, and many others.

Adoption of an FPGA to accelerate SSD operations has advantages and drawbacks. The main benefit is improved performance, but it comes with strings attached. On the one hand, tools and software are lacking when it comes to dealing with the FPGAs. On the other, there’s an uncommon expertise in designing FPGAs. The FPGA has to be made file-aware of the activity inside the SSD to be able to retrieve and process files. That is, developers must create a system for file management, including reading, processing, and writing files back to the storage fabric.

CSD Based on Application Processors

A much simpler approach, used by companies like NGD Systems, calls for deploying an AP such as the Arm A53, in addition to a real-time processor to manage the host traffic and time-sensitive operations of the SSD. The AP typically includes Arm Cortex-A processors, and multicores are popular. The AP is a requirement to run Linux, landing the name of “on-drive Linux” to the approach.

Several advantages stem from running Linux. First, Linux is natively file-aware, and using Linux ensures the consistency of the file system. It also avoids the need to reinvent something that’s readily available. The deployment is straightforward through existing infrastructures. A wide range of development tools are already in place, and many applications and protocols are available. Further, Linux is served by a vast open-source developer community.

A CSD running Linux may appear to the host as a standard NVMe SSD when installed in a PCI port. Because it’s running Linux locally, the user can establish a secure-shell (SSH) connection into the drive and view it like any other host on the network. The CSD morphs into a network-attached, headless-server running inside an SSD. A wide range of applications that can directly manipulate files stored on the NAND is only limited by the imagination.

An early example of CSD, presented at the Flash Memory Summit in 2017, was “In-Situ Processing,” a data-ingestion scenario where a 0.5-PB data set was read into two CPUs. With a standard SSD, the reading took 4.5 hours, but with the multicore AP advantage of two CSDs, it took only 0.25 hours. Both had the same total aggregated bandwidth of 380 GB/s.

Examples are now found in a large range of applications that take advantage of compute “in-situ.”

As storage systems move to NVMe-over-fabrics (NVMe-oF) protocol designed to connect hosts to storage across a network fabric using the NVMe protocol, disaggregated storage will be even more important. With CSD, designers can disaggregate and move compute “in situ” to improve performance, lower power usage, and free up precious PCIe bandwidth for the rest of the system. While NVMe-oF reduces some of the storage network bottleneck, only CSD scales the needed compute resources with the storage capacity.

Performance Scalability of CSD

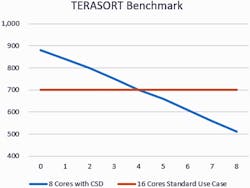

From a data standpoint, CSD scales linearly not only in capacity, but also in performance. Adding SSDs to servers in data centers only scales the amount of storage, not the performance that quickly hits a plateau. Since every CSD includes a processor, adding CSDs to servers in data centers linearly increases the total number of processors managing data and storage, and scales the performance.

Figure 4 charts the TERASORT benchmark and compares the deployment of SSDs with 16 cores versus CSDs with eight cores by increasing their numbers from 1 to 8. While the number of SSDs doesn’t change the performance, adding CSDs accelerates the performance. The cross point occurs at four units. If one CSD takes about 850 ms to run a TERASORT benchmark, eight CSDs run the same benchmark in about 500 ms.

CSD Verification

The presence of processors in CSDs greatly increased the hardware side as well as the embedded software side of designs. CSDs add an entire Linux stack and applications to the already complex firmware, making the verification task a challenging endeavor. In fact, verifying a CSD is similar to testing an entire computer.

If the CSD embodies an FPGA, the verification undertaking requires the use of FPGA tools—and FPGA hardware/software debug is not trivial.

A couple of years ago, SSD traditional verification approaches were derailed when the non-deterministic nature of the storage interfered with the hyperscale data-center requirements. New verification methods had to be conceived in the form of emulation-based virtual verification. The virtualization of the SSD allowed for pre-silicon performance and latency testing within 5% of actual silicon. VirtuaLAB from Mentor, a Siemens Business, is an example of the virtualization methodology.

Building on top of tools and expertise developed to support a networking verification methodology, a new set of tools were created for the verification of CSD designs from block level all the way to system level.

Starting with a PCIe/NVMe standard host interface setup, system-level verification includes six major parts:

1. Virtual NVMe/PCIe host running real-world applications on Quick EMUlator (QEMU) to implement host traffic.

2. Protocol Analyzer for visibility on all interfaces like NMVe, PCIe, and the NAND fabric.

3. Hycon software stack to boot Linux and run applications virtually with the ability to save the state of the system at any point, and restart from there as needed.

4. Emulation platform to emulate the CSD design under test (DUT) in pre-silicon with real-world traffic.

5. Soft models for memory interfaces like NAND, DDR4, and SPI NOR Codelink to run and debug the full system firmware at offline speeds around 50 MHz.

6. Codelink to run and debug the full system firmware at offline speeds around 50 MHz.

The entire environment provides a seamless hardware and software debug environment, with 100% visibility of all signals and software.

Conclusions

CSD systems are emerging as the must-have peripherals for intensive storage demands required by leading-edge industries such as AI/ML, 5G and self-driving vehicles. Their complexity challenges the traditional pre-silicon verification approach, increasing the risks of missing the critical time-to-market window.

A new verification method based on the virtualization of the testbed to drive a CSD DUT is necessary to meet the challenge. Through virtualization, complete system verification, including full firmware validation can be carried out at high speed to accelerate time-to-market, and possibly perform architectural explorations to create the optimal solution for a specific task.

Authors’ Note: VirtuaLab and Veloce are available from Mentor, a Siemens Business. More information can be found at https://bit.ly/3dF6nmC

Dr. Lauro Rizzatti is a verification consultant and industry expert on hardware emulation. Previously, Dr. Rizzatti held positions in management, product marketing, technical marketing and engineering.

Ben Whitehead has been in the storage industry developing verification solutions for nearly 20 years working for LSI Logic, Seagate and, most recently, managing SSD controller teams at Micron. His leadership with verification methodologies in storage technologies led him to his current position as a Storage Product Specialist at Mentor, a Siemens Business.

About the Author

Ben Whitehead

Storage Product Specialist, Mentor, a Siemens Business

Ben Whitehead has been in the storage industry developing verification solutions for nearly 20 years working for LSI Logic, Seagate and, most recently, managing SSD controller teams at Micron. His leadership with verification methodologies in storage technologies led him to his current position as a Storage Product Specialist at Mentor, a Siemens Business.