Working Toward a Standardized Haptics Coding Format

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Topic: System Design

.. .. .. >> Series: Touching on Haptics

What you’ll learn:

- What’s the rationale behind standardizing a haptics coding format?

- Key components of the Call for Proposals.

- What are MUSHRA tests?

At their 132nd meeting in October 2020, MPEG (Motion Picture Experts Group) issued a Draft Call for Proposals (CfP) on the Coded Representation of Haptics. In their press release announcing it, MPEG characterized the CfP as a “call for technologies that enable efficient representation and compression of time-dependent haptic signals and are suitable for the coding of timed haptic tracks that can be synchronized with audio and/or video media.”

In other words, MPEG was announcing its intention to standardize a haptics coding format and associated decoder. At the same meeting, the MPEG roadmap was updated to show “haptics” as one of the standards under development. These twin developments represent small but significant steps in the progress toward an MPEG haptics standard.

In this article, we take a closer look at the rationale behind standardizing a haptic coding format and highlight the salient parts of the CfP. Readers are directed to the CfP document1 itself for all the details.

Why an MPEG Haptics Standard?

Haptics provide an additional layer of entertainment and sensory immersion to the user. Therefore, the user experience and enjoyment of media content, be it in MP4 files or streams such as ATSC 3.0 broadcasts, streaming games, and mobile advertisements, can be significantly enhanced by the judicious addition of haptics to the audio/video content.

To that end, haptics has been proposed as a first-order media type, akin to audio and video, in the ISOBMFF standard (ISO/IEC 14496 Part 12). The ISOBMFF is a foundational MPEG standard that forms the basis of most media files being created and consumed in the world today. My previous article explains the significance and impact of standardizing haptics in the ISOBMFF.

Further, haptics also has been proposed as an addition to the MPEG-DASH standard (ISO/IEC 23009-1:2019) to signal the presence of haptics in the MP4 segments to DASH streaming clients. Lastly, the MPEG-I Phase 2 use cases have been augmented with haptics,2 resulting in a set of haptics-specific requirements for MPEG-I Phase 2.3 MPEG-I is the MPEG standard dealing with immersive media, including 360-degree video and XR (VR/AR) media. All of these proposals are in various stages of the MPEG standardization process.

These ongoing haptics standardization efforts highlight the need to abstract playback hardware from content-creation technologies, so that content creators can reliably and meaningfully incorporate haptics into the experiences intended for a broad set of consumers. A standardized coded representation of haptics will help in that regard.

The standard haptics coding format (and associated standardized decoder) will facilitate the incorporation of haptics into the ISOBMFF, MPEG-DASH, and MPEG-I standards. This will incentivize adopters of these standards, such as content creators and media/streaming content providers, to incorporate haptics into their offerings.

Two-Phased Approach

The detailed requirements identified in the MPEG-I Phase 2 Requirements3 were abstracted to two smaller sets to simplify the process of evaluating submissions as well as explicitly extract near-term standards-ready requirements (Basic Haptics) and those that represent advanced functionality (Advanced Haptics).

Requirements pertaining to Basic Haptics focus on the coding of time-dependent haptic signals and are suitable for coding of timed-haptic experiences that may be synchronized with audio and/or video media. These requirements are suitable for near-term usage and intended to enable efficient coding of haptics.

Requirements pertaining to Advanced Haptics focus on interactive haptic signals that are useful for coding fully immersive, interactive XR experiences. In general, Advanced Haptics are intended to support user-object interactions common in such experiences.

Basic Haptics are covered by the CfP (Phase 1) that’s the subject of this article. Advanced Haptics will be handled in the CfP (Phase 2) expected sometime in 2021.

CfP Details

Workflow

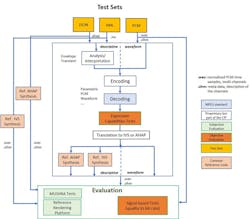

Figure 1 shows the haptics standardization workflow in this CfP from the input test signals to the evaluation. As is the norm with all MPEG standardization, the coded representation and decoder will be standardized. The encoding and rendering implementations are outside the scope of MPEG and best left to vendor differentiation. That said, a reference rendering platform will be needed to test and evaluate the candidate proposals.

Further, the test set will contain a combination of AHAP, IVS, and PCM + OHM haptic files. AHAP is the Apple Haptic and Audio Pattern4 JSON-based format, IVS is an Immersion-specific XML-based format, and OHM refers to the Object Haptic Metadata format that includes metadata such as the gain, location of the body where haptic sensors might be placed (e.g., haptic vests, belts, or gloves) in case of multi-track haptic systems, and other relevant information that describes the haptic signals in each channel. All of these formats are described in detail in Reference 5.

Respondents to the CfP are free to use any encoding mechanism of their choice, but they must be able to ingest test data in all three of these formats. As shown in Figure 1, respondents proposing a non-PCM coded representation must employ a consistent synthesis means to synthesize PCM data from the decoded representation.

Finally, PCM equivalents of all test signals will be used for the objective tests and as references for the subjective tests. The common reference synthesis will be made available to all proponents to ensure a level-playing field for all proponents (Fig. 1, again). All decoded signals will be evaluated using the Expressive Capabilities Tests to ensure that translation doesn’t mask the expressive capabilities of the proponent’s coding.

This workflow ensures that the final haptics coding standard:

- Supports PCM-based waveform inputs.

- Supports text-based descriptive inputs (e.g., JSON or XML).

- Has a unified architecture to the greatest extent possible.

Test Content

The objective was to include the kinds of haptic effects likely to be encountered by users in MPEG-I application scenarios supported by the requirements. This includes the sensations provided by vibrotactile feedback as well as open-loop directional force feedback (kinesthetic haptics). We will be using test signals extracted from content provided by Immersion, Apple, InterDigital, and Actronika.

Evaluation

Proponent submissions will be subjected to a three-pronged evaluation procedure that includes:

- Objective Signal-based Tests to measure distortion, if any, introduced into the PCM signal by the encoding

- Expressive Capabilities Tests to ensure that the coded representations can satisfy Phase 1 requirements through coded signaling in addition to PCM signaling

- Subjective Tests to enable human test subjects to rate the proponent submissions using a modified MUSHRA6 test framework on a reference rendering platform

The results of these three types of tests will be used to create a comprehensive, weighted figure of merit to determine the best performing proponent submission.

Subjective MUSHRA Tests

While the Objective Signal-based Tests and Expressive Capabilities Tests are essential, human beings are still the ultimate arbiters of whether the coding process has degraded haptic feedback quality. This is where subjective tests are useful, wherein trained test subjects provide a comparative rating of the proponent submissions over several test signals, rendered on a reference test platform.

The MUSHRA (MUltiple Stimuli with Hidden Reference and Anchor) test, defined in ITU-R BS.1534-3,6 is a well-known methodology for conducting audio-codec listening tests by human experts. It’s been used extensively in MPEG standardization for the subjective evaluation of audio codecs.

A modified version of MUSHRA will be used for the subjective evaluation of haptic-codec proponent submissions. We say “modified” because instead of using loudspeakers (as in the audio-codec tests), we will be using a custom-built mobile handheld device with a single haptic actuator embedded in it.

Test subjects will hold the device in one hand and interact with the test software running on the PC with their other hand. As each piece of test content is “played” on the PC, the subject feels the corresponding haptic effect through the handheld device and rates the experience on a scale of 0 to 100, according to the MUSHRA guidelines. Each trial of the test will contain the uncoded reference signal (both as open and hidden reference) as well as the coded representations of the same test signal from all of the proponents, presented in random order.

Subjects can experience each effect as many times as they want before they score it. Test subjects are effectively comparing the proponent submissions with each other and the reference signal during the test. As per MUSHRA rules, subjects will go through a training session to familiarize themselves with the test software, protocol, and handheld device.

Proponents will need to provide test signals at three different bitrates of 1 kb/s, 16 kb/s, and 32 kb/s (these bitrates are under active discussion; they could change in the Final CfP). While these bitrates might seem to be quite low compared to high-definition audio and video, they add up when you’re dealing with multi-track haptic systems (like haptic bodysuits) with several haptic channels. Scores are recorded for each proponent for all pieces of test content in each test condition, and the results are analyzed to arrive at a winner for each test condition.

Test-Signal Categorization for MUSHRA Tests

The following categorization will be used to divide the vibrotactile test signals (from all test content) for the MUSHRA tests:

- Short: A short effect with a simple envelope (e.g., ADSR), where the envelope is the salient characteristic of the effect. Could range anywhere from 20 to 1000 ms.

- Long: A long effect where the placement and envelopes of its subcomponents are the salient characteristics of the effect. Could range anywhere from 1000 to over 5000 ms.

There will be one set of MUSHRA tests for each category of effects, and one for each of the three bitrates listed above, for a total of six MUSHRA tests for vibrotactile haptics. The third set of MUSHRA tests for kinesthetic haptics will have one test for each of the three bitrates as well, for a grand total of nine MUSHRA tests.

Each MUSHRA test session will be limited to 30 minutes to prevent test subject fatigue. This means that some of the nine tests could require multiple sessions, depending on the number of test signals. Logistical details of the test sites, protocols, and test content extraction are all in progress.

Timeline

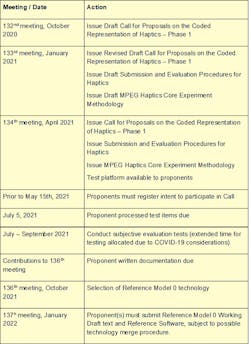

A timetable for Phase 1 of the CfP relative to specific MPEG meetings is given in Figure 2. The selected technology is called the Reference Model 0 (RM0) technology, as per MPEG convention.

Core Experiments

After the RM0 technology technical description and source code is available, a collaborative phase called the Core Experiments (CE) phase will start. This phase aims to improve on RM0 technology. CEs are MPEG’s way of further improving the technology selected at the end of a competitive evaluation, using the WG's collective expertise. In this phase, the entire WG works collaboratively to fine-tune and tweak the RM0 technology by proposing enhancements and modifications.

After each proposed modification is approved by WG consensus, the system will be subject to the same testing mechanisms outlined above to ensure that the modification hasn’t caused any regression in performance or functionality. Once all CEs are completed, the WG will conduct a formal verification test and generate a report that characterizes the technology's performance. This rigorous process, subject to the consensus of MPEG experts, ensures that the technology is finally ready to progress in the standardization process.

Looking Ahead

At the time of this writing, test platform validation and test signal generation are underway. We expect the Final CfP to be issued at the 134th MPEG meeting in April 2021. We look forward to receiving many proponent submissions and a robust competitive evaluation process. Stay tuned for a future article on the results of the Phase 1 CfP.

References

1. Draft Call for Proposals on the Coded Representation of Haptics – Phase 1

3. Requirements for MPEG-I Phase 2

4. Apple Haptic and Audio Pattern (AHAP) format

5. Draft Encoder Input Format for MPEG Haptics

6. ITU-R Recommendation BS.1534-3, “Method for the Subjective Assessment of Intermediate Sound Quality (MUSHRA),” International Telecommunications Union, Geneva, Switzerland, 2015.

>> Electronic Design Resources

.. >> Library: Article Series

.. .. >> Topic: System Design

.. .. .. >> Series: Touching on Haptics

About the Author

Yeshwant Muthusamy

Senior Director, Standards, Immersion Corp.

Yeshwant Muthusamy is a technologist by trade and geek by nature. He has spent the last 26+ years in the fields of speech recognition, natural language processing, and automatic language identification, among other areas of AI, with IoT and AR/VR being more recent areas of interest. Close to 20 years of that has been spent in various international SDOs (like MPEG, ATSC, Khronos, 3GPP, ITU-T, JCP, ETSI) on media and speech standardization efforts. The transition from speech and audio to haptics has been a relatively seamless and natural one (just another one of the human senses), although at times it feels like he is drinking from multiple firehoses as he gets up to speed on the intricacies of the haptic technology stack. Yeshwant is excited to be leading Immersion’s standardization and ecosystem strategy and he looks forward to working with the haptics community.