Build Your Own Sensor-Fusion Solution for Indoor Navigation

What you’ll learn:

- How to build an indoor navigation solution, using sensor fusion.

- How the sensor-fusion system’s architecture is organized, with tasks partitioned between the CPU and DSP.

Sensors are most effective when they work together—combining data from multiple sources—in what’s called “sensor fusion.”

One application where this holds true is indoor navigation, using our cell phones. GPS won’t work indoors, and beacon-based navigation will only function in areas with beacon infrastructure. Instead, an effective approach is simultaneous localization and mapping (SLAM). SLAM can work any place that provides indoor maps, which are likely to be available in many areas such as office buildings or shopping malls.

SLAM also is valuable for augmented reality and virtual reality (AR/VR), adjusting the scene to the user’s location and where they’re looking, and for drones and robots in collision avoidance, among many other applications. The SLAM market is expected to grow to $465M by 2023 at a healthy 36% CAGR.

So how should you build an indoor navigation solution using SLAM and sensor fusion?

An Out-of-the-Box Implementation

Building your solution can be as easy as integrating optimized software modules or as customized as you want. I’ll start by describing how you would put together and test an out-of-the-box solution based on CEVA’s SensPro sensor hub DSP hardware, together with our SLAM and MotionEngine software modules to condition and manage motion input.

We need a camera and inertial measurement sensors (IMUs), a host CPU, and a DSP processor. We’ll use the CPU to host the MotionEngine and SLAM framework, and a DSP to offload heavy algorithmic SLAM tasks.

To simplify explanation, I’ll start with OrbSLAM, a widely used open-source implementation. This performs three major functions:

- Tracking: Handles visual frame-to-frame registration and localizes a new frame on the current map.

- Mapping: Adds points to the map and optimizes locally by creating and solving a complex set of linear equations.

- Loop closure: Optimizes globally by correcting at points where you return to a point you’ve already been. This is accomplished through solving a huge set of linear equations.

Some of these functions can run very effectively inside the host app on a CPU core, such as the control and management functions unique to your application. On the other hand, the computational horsepower required means that some must run on the DSP to be practical or for competitive advantage.

For example, tracking might manage just one frame per second (fps) on a CPU, where feature extraction can burn 40% of the algorithm runtime. In contrast, a DSP implementation can achieve 30 fps, a resolution that will be important for fine-grained correlation between video and the movement sensor, or IMU.

There are easily understood reasons for this advantage. A DSP implementation provides very high parallelism and fixed/floating-point support, critical in tracking and in mapping. This is complemented by a special instruction set to accelerate feature extraction. An easy link between the host and the DSP lets you use the DSP as an accelerator to offload intensive computations to the SensPro DSP hardware.

Fusing IMUs with Vision

In this solution, CEVA provides two important components to handle sensor data: visual SLAM using the CEVA-SLAM SDK, and the CEVA MotionEngine software that solves IMU input for three of the six degrees of motion freedom.

Then, fusing the IMU and video information depends on an iterative algorithm that’s typically customized to suit application requirements. This last step correlates visual data with motion data to produce refined localization and mapping estimates. CEVA provides proven visual SLAM and the IMU MotionEngine as a solid foundation for fusion algorithm development. As mentioned previously, the algorithmically intensive functions making up most of this computation will run fastest on a DSP, such as CEVA’s SensPro2 platform.

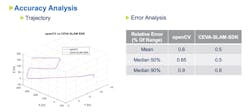

Once you’ve built the prototype platform, it will, of course, require testing. Several SLAM datasets are available to help: Kitti is one example, EuroC is another. In the figure, we show an accuracy comparison between the OpenCV implementation and our CEVA-SLAM SDK implementation. You will want to do similar analyses on your product.

As mentioned previously, there are multiple ways to build a SLAM platform. For example, you don’t need to start from OrbSLAM, or you could mix in your own preferred or differentiated algorithms. These possibilities are readily supported in the SensPro sensor hub DSP.

About the Author

Dan Mackrants

Vision Business Unit, CEVA

Dan Mackrants has been leading the Vision Group within the Vision Business Unit at CEVA since January 2020. Prior to this, Dan was leading the Computer Vision & SLAM Teams. He brings more than 10 years of experience in the high-tech industry in both algorithms and embedded development, and managerial roles. Before joining CEVA, Dan served as a team leader at Samsung research center in the field of Computer Vision algorithms.

Dan holds a M.Sc. for Electrical Engineering, and a B.Sc. in Physics and Electrical Engineering from the Tel-Aviv University in Israel.