This file type includes high resolution graphics and schematics when applicable.

At 6:30 a.m., the alarm on your smartphone tells you that it’s time to start your day. As you get ready for work, you say the magic words and your phone reads off the day’s meetings and appointments, and then starts your coffee maker. After getting the reminder, you respond with “Call Mom” to connect and wish her a happy birthday. Just like that, your morning routine is complete and you didn’t have to touch your phone! It was always listening, always processing.

The potential of always-on processing can make your morning routine—and so many other aspects of life—smoother and easier. Mobile systems, wearables, and many other Internet of Things (IoT) applications require such technology—where some compute resources in the system are always on in order to process audio, visual, or other sensor data. To extend the usability of these battery-powered devices, compute resources that are optimized for the always-on tasks should be used while other resources in the system remain off until they’re really needed.

Indeed, a new generation of products is redefining the man-machine interface, and delivering user experiences that are richer and much more compelling than we would have imagined even five years ago. The challenge for designers is: How can I continue to delight customers while striking the right balance between responsiveness, cost, and energy consumption? In this article, we will discuss how developing an always-on architecture with a methodology called cognitive layering can help designers achieve that optimal balance.

Designing an Energy-Efficient, Always-On Architecture

For a given IoT application, an SoC consists of multiple analog components, such as radios, low-noise amplifiers (RX), power-management units, and integrated power amplifiers, as well as multiple digital components like processors, DSPs, on-chip memory, digital baseband hardware blocks, and rich sensor I/Os.

Sensors—including microphones, cameras, accelerometers, gyroscopes, and temperature and pressure gauges—are at the core of smart, connected, always-on devices. The sensors collect data that’s then digitized and subsequently sent to the SoC for analysis and interpretation.

An always-on architecture can be developed with a variety of techniques to reduce energy consumption in the IoT application:

• Power, clock, and data gating

• Sensor fusion algorithms that determine device context and intelligently manage system power based on it

• Optimization for certain “hot” functions, such as:

• Reducing cycles and lowering megahertz at the instruction-set level

• Bypassing the general on-chip bus at the interconnect level

• Localizing traffic in memory partitioning

• Accelerating communications standards and performance for voice algorithms, encryption, and the like

The cognitive layering methodology, in particular, provides an ideal approach to achieve an optimal power/performance balance. Let’s discuss this methodology in more detail.

Cognitive Layering: Offloading the Host Processor

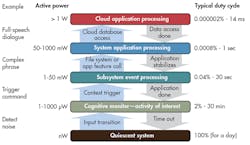

The cognitive-layering methodology partitions a task into layers or states that can be addressed by an appropriately optimized processing engine. The technique offloads the host processor by moving some tasks into lower-power always-on processors that are optimized for those tasks. As you can see in Figure 1, which depicts a voice-triggering application, each layer has just enough processing to support the level of alertness required by the system at that moment. With this approach, you can see improvements in latency, energy, and throughput.1

The lowest step in the Figure 1 flow is low-energy noise detection, which consumes just nanowatts of power. Noise detection triggers a series of system actions up the processing chain, from detection of a trigger command to recognition of a phrase and its interpretation in the context of the current application. Each action requires a slightly higher level of energy consumption than its predecessor. The highest energy activity—full speech dialogue via a cloud service—taps into multiple watts of power to access and interact with remote servers and databases.

In the lower layers, optimized processing engines designed for the particular computing and interface requirements of these layers take the place of the less-efficient general-purpose processors. Compared to their general-purpose counterparts, the optimized processors can provide higher performance, shorter response latency, and lower energy consumption in an always-on system.

By keeping as much of the system turned off as possible, cognitive layering moves computation closer to the data and keeps the overall system at the lowest activity level required to complete the task at hand. Cognitive layering can drive performance and energy-consumption improvements in a variety of IoT application areas, from inertial navigation to computer vision to local wireless communications.

What Makes a Good Always-on Processor?

When it comes to IoT applications, the sheer breadth of processing requirements dictates that a “one size fits all” approach will not work for always-on processors. As indicated by the cognitive-layering methodology, small, low-power, highly specialized processors can offload the lower-level cognitive functions from the host processor to optimize energy consumption.

For example, a DSP optimized for low-power voice triggering would hand over processing of more intensive audio-codec functions to a DSP designed for that purpose. In turn, that audio-codec DSP would hand over processing of user and network interaction to an applications processor designed for that purpose.

So, while the host applications processor could run all of the algorithms, choosing a more optimized processor results in a much more energy-efficient design. In our voice-triggering example, you can designate a low-power always-on DSP to support voice trigger as well as low-energy functions such as sensor processing and low-resolution image processing. As Figure 2 depicts, IoT applications like wearables call for more complex signal processing with ultra-low energy.

Here’s a useful checklist of criteria for a processor to support always-on applications:

• Efficient instruction set with low cycle count for critical algorithms

• Ability to perform both the DSP processing as well as the control tasks

• Low-power-consumption design

• Small size, ideally configurable to exclude logic that’s not needed

• Scalable performance over the required range of functionality

• Efficient floating-point option for sensor processing

Flexible DSP for IoT, Wearables, Wireless Apps

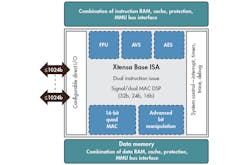

Cadence’s Tensilica Fusion DSP is an example of a single, scalable DSP that meets the requirements for low-energy, always-on functions. It includes optional Instruction Set Architecture (ISA) extensions to accelerate multiple wireless protocols and floating-point operations. The DSP combines an enhanced 32-bit Xtensa control processor with DSP features and flexible algorithm-specific acceleration. As an IoT device designer, you can pick the options you need to produce a Tensilica Fusion DSP that can be smaller, higher performance, and consume less energy than a single, “one-size-fits-all” processor.

Configurable elements in the Tensilica Fusion DSP (Fig. 3) include:

• Single-precision floating-point unit, where floating-point instructions are issued concurrently with 64-bit load/store. This speeds software development of algorithms created in MATLAB or standard C code.

• Audio/voice/speech (AVS), which shares software compatibility with the Tensilica HiFi DSP and is supported by an ecosystem of more than 140 HiFi audio/voice software packages

• 16-bit Quad MAC, which further accelerates communications standards like Bluetooth Low Energy and Wi-Fi, along with voice codec/recognition algorithms

• Encryption acceleration for Bluetooth Low Energy/Wi-Fi AES-128 wireless operations

• Advanced bit manipulation, which accelerates implementations of baseband MAC and PHY

• Flexible memory architecture that works with caches and/or local memories of various sizes

Summary

Always-on technology is becoming increasingly prevalent in many aspects of our lives. The challenge in designing successful always-on products lies in striking the right balance between responsiveness, cost, and energy consumption.

A cognitive-layering methodology backed by a configurable, low-energy, always-on DSP can help you achieve that balance. By offloading always-on functionality to a low-energy processor, your system’s primary processor can then be reserved for bigger processing tasks. The result is an optimized design consuming minimal energy for a particular task, without sacrificing performance or functionality.

Reference:

1. White paper, “Keeping Always-On Systems On for Low-Energy Internet-of-Things Applications,” by Gerard Andrews and Larry Przywara

About the Author

Neil Robinson

Product Director

Neil Robinson is product marketing director at Cadence, responsible for promoting Tensilica processors into all markets. Previously he was business development manager for Pixelworks and held various positions for ARM, including marketing manager for consumer entertainment, storage, and printers; business development manager for embedded software applications; field application engineer; and project manager for processor and ASIC hardware developments. He received a BSc in physics from Imperial College, University of London.