We already had augmented reality (AR) and virtual reality (VR), and now Intel brings us merged reality (MR). The company’s CEO, Brian Krzanich, recently revealed the wireless, head-mounted display (HMD) that is part of Intel’s Project Alloy (Fig. 1). It is a combination that includes power, wireless communication, VR glasses, and a pair of RealSense cameras that face out to provide positioning information as well as video streams. The HMD allows an application to merge video from the real world into a virtual world, whereas AR adds limited video to a user's normal view of the world. All three have their place, although Intel is betting that MR will become the next big thing.

Intel's RealSense cameras provide 3D range information and video. They have been used on devices such as robots and quadcopters to locate obstacles. This type of 3D camera has also been used in gaming to track gamers. The cameras used on this new system are placed so they can provide a view that the user would normally see if they were not wearing the HMD. This information is sent wirelessly to the host that runs the VR application. This information can be utilized in a variety of ways, including incorporation into the VR environment presented by the user. This includes adding a view of the person standing in front of the wearer of the HMD in the demo at the 2016 Intel Developers Forum (IDF). Intel's Craig Raymond did a demo where he used a physical dollar bill with a virtual lathe to do virtual carving.

Another alternative that is possible with the system is to replace an existing object or obstacle, like a door, with a VR-rendered door (as in a VR game). This would allow a user to use an existing space with obstacles versus conventional VR operation, which requires users to be limited in some fashion. Project Alloy is a work in progress; Intel is planning on open-sourcing the hardware and software APIs.

More RealSense

The latest RealSense cameras improve 3D resolution (double the previous amount) and frame rates. They can also operate in sunlight, although there are still some limitations.

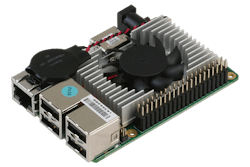

The RealSense cameras used on Project Alloy HMD were just part of the RealSense demos and announcements at IDF. The Euclid (Fig. 2) is a mobile sensing system that includes a RealSense camera—an Atom-based processor with wireless support. It includes its own battery and runs Ubuntu Linux that also runs the Robot Operating System (ROS). ROS runs on a host of operating systems and hardware platforms.

ROS is designed to be a distributed system, and it already supports RealSense cameras as well as many other 3D camera systems. Euclid reduces the size of the sensor system and provides a platform that will be very handy for a host of applications, ranging from a robot sensor to a workspace monitor.

Intel had another demo that could have used Euclid. In the demo, a user wears safety glasses with a RealSense sensor built-in plus wireless communication to a host that tracks the 3D video information. In the example, the user was to place bolts into an airplane door, with the system detecting the types of bolts to make sure they were in the correct holes. Audio feedback was provided to indicate if there was a problem. Euclid could perform this type of function.

The difference is that Euclid is a self-contained device. The IDF demo included a robot that did not have any sensors built in, but it did have a wireless link to the Euclid module. This allowed the robot to perform the typical "follow me" scenario where the robot system recognized a user and followed them around.

Until now, the Real Sense cameras were available as USB devices with their own housing. The RealSense Camera 400 (Fig. 3) is a module that can be included in embedded applications, providing a significantly smaller footprint. Applications range for RealSense-equipped laptops to robots and quadcopters.

The RealSense R200 (Fig. 4) was announced last year. Its compact design allows it to be mounted on the back of a tablet or similar device. It is being used for augmented reality applications that need depth perception in addition to the typical video stream provided by rear-facing cameras.

The UP board has a 1.4-GHz, quad-core Intel Atom x5-8350 with up to 4 Gbytes of DRAM and 32 Gbytes of eMMC flash memory. The 40-pin IO connector is compatible with Raspberry Pi2, although not all boards will be compatible with the UP board. The connector is handled by an Altera Max V CPLD. The system also has 6 USB 2.0 ports, a USB 3.0 OTG port, Gbit Ethernet, HDMI out, DSI, eDP, and MIPI-CSI interfaces.

Also announced was the Intel RealSense Camera ZR300 that is part of the Intel RealSense Smartphone Developer Kit. The RealSense Camera ZR300 includes a high-precision accelerometer and gyroscope. There is an 8-Mpixel, wide field-of-view camera for motion and feature tracking, along with a 2-Mpixel front-facing camera. It is designed for low power consumption and use in mobile devices.

Intel's commitment to RealSense is significant. It complements Intel's processor and wireless technology. It remains to be seen if its idea of MR will complement or compete with AR and VR applications, but this is only the starting point. The technology will continue to improve, but it is at a point where it can be used to experiment with and deploy applications.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.