The Evolution of Shaders—And What It Says About Moore’s Law

What you'll learn:

- Timeline of shader development.

- The role of shaders in NVIDIA's GeForce series.

- The move to Tensor cores, leading to AI implementation.

NVIDIA was the first company to offer a fully integrated graphics processor for PCs in mid-1999. The company called it a graphic processing unit, or GPU, and the acronym stuck.

The primary differentiator of NVIDIA’s pioneering GeForce 256 was its hardware transform and lighting (T&L) engine, which offloaded geometry calculations from the CPU. The T&L engine then fed four fixed pixel pipelines, each capable of applying a single texture at a time.

But it would take several more years for the shader era to start. NVIDIA introduced its first GPU incorporating programmable shaders with the GeForce 3 series of GPUs in early 2001 — a significant advancement over the fixed-function pipelines in the GeForce 256s. The GeForce 3 featured four pixel shaders and a vertex shader.

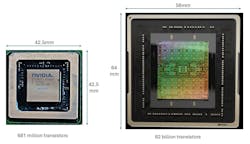

Since then, 24 years later, the programmable shader architecture has expanded to enable more shaders per GPU, while the shaders themselves have become faster and relatively smaller. The GeForce 3 was based on a 150-nm process, enabling 57 million transistors to be crammed onto the processor die, code-named NV20, which had a die area of 128 mm2. Today’s Blackwell B100 GPU features 92 billion transistors, incorporating 24,064 shaders in a 750-mm2 die.

Differentiators: Clock Frequency and Shader Count

After the GPU was introduced, NVIDIA started branching out into different PC segments, including workstation, mobile, and computational markets. However, gaming remained the primary focus. From 2002 to 2005, the main differentiator for graphics add-in boards (AIBs) came down to the clock frequency of the GPUs inside them.

With the launch of the gaming-focused GeForce 7 series and later the GeForce 8 series, NVIDIA started distinguishing GPUs by shader count across the entry-level, mid-range, and high-end tier. Between mid-2005 and mid-2007, NVIDIA introduced 26 versions of the GeForce 7 Series, with pixel shader counts ranging from two to 24 and a variety of vertex shader configurations. It was a chaotic period as NVIDIA attempted to fill every conceivable market niche.

In April 2007, NVIDIA introduced a unified shader architecture with the GeForce 8600 series. Unified shaders eliminated the fixed-function separation between vertex and pixel shaders, enabling Direct3D 10 to dynamically allocate shader resources to whatever workload needed them. It was a breakthrough that helped usher in the start of the unified shader era — an approach that all major GPU makers would eventually adopt.

From that point onward, NVIDIA and its competitors began differentiating gaming GPUs by shader count across various market segments: value (or entry level), low end (or mainstream), mid-range (or high end), and high end (also referred to as the “enthusiast” tier).

The value segment would eventually be overtaken by increasingly powerful integrated GPUs (iGPUs), which came to the fore between 2011 and 2015. Ironically, AMD — one of the pioneers of the integrated GPUs, which it called APUs — was the last major vendor to exit the value segment.

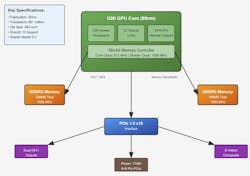

Between 2007 and 2025, shader counts increased dramatically — from 16 (low end) and 128 (high end) to 3,840 (entry level) and 21,760 (high end). This growth rate far exceeds Moore’s Law. It’s long been cited by GPU vendors to support their claim of delivering performance gains faster than Moore’s Law, which, by the way, is not dead. Piling all of these shaders into a single GPU changes the block diagram of the processor significantly.

Volta GPU Ushers in Tensor Cores

NVIDIA introduced tensor cores as part of its Volta GPU architecture in 2017. This early version of the technology debuted in the Tesla V100, a GPU designed specifically for machine-learning and artificial-intelligence applications.

In the wake of the Volta GPU, tensor cores became a standard feature in NVIDIA’s GeForce RTX family of gaming GPUs. They formed a core building block of the first RTX cards, based on the Turing architecture and released in 2018—marking the beginning of the AI GPU era.

AI workloads will use every available shader — and still ask for more. In dedicated AI GPUs, such as NVIDIA’s Blackwell and AMD’s MI300 series, shader counts have reached 16,896 and 16,384, respectively. These GPUs also include specialized AI cores: 528 for NVIDIA and 1,024 for AMD.

Despite all of the technological advances in hardware, the price of graphics boards hasn’t increased to the same degree. In 2007, a high-end enthusiast AIB sold for around $600 to $800. By 2025, the high-end RTX 5090 was priced at $1,900 — just 150% more over 18 years. For context, the U.S. inflation rate from 2007 to 2025 is estimated to be approximately 55%. That means a graphics board that cost $800 in April 2007 would cost around $1,255 today, while offering close to 10X more shaders per GPU.

More bang for your buck. It’s Moore’s Law in action.

About the Author

Jon Peddie

President

Dr. Jon Peddie heads up Tiburon, Calif.-based Jon Peddie Research. Peddie lectures at numerous conferences on topics pertaining to graphics technology and the emerging trends in digital media technology. He is the former president of Siggraph Pioneers, and is also the author of several books. Peddie was recently honored by the CAD Society with a lifetime achievement award. Peddie is a senior and lifetime member of IEEE (joined in 1963), and a former chair of the IEEE Super Computer Committee. Contact him at [email protected].