Immersion Cooling (Part 2): Rethinking Thermal and Mechanical Behavior

What you’ll learn:

- Observations from early immersion cooling deployments.

- Opportunities for immersion-specific hardware innovation.

- Redefining industry standards for immersion reliability.

In Part 1, we took a look at immersion cooling and redefining reliability standards. Part 2 examines thermal and mechanical design aspects.

Even basic assumptions about thermal behavior need to shift when it comes to liquid cooling in the data center. Component temperature rise limits, often defined relative to ambient air (e.g., a 30°C rise), don’t directly translate because the central factor remains the component's junction temperature (Tj).

Fluids transfer heat away from surfaces more efficiently, allowing for potentially higher bulk fluid temperatures (whether 40°C, 50°C, or higher). However, there’s currently no industry consensus on standardized safe operating fluid temperature thresholds that guarantee acceptable Tj across diverse hardware.

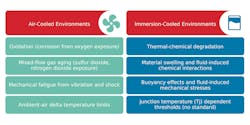

Mechanical stresses behave differently as well. In fluid, vibration and shock are dampened, masking traditional fatigue patterns while introducing new mechanical considerations, such as buoyancy effects on components or stresses from fluid dynamics, which were never accounted for by air-based models.

And while accelerated aging tables have long guided reliability predictions in air, no equivalent tables exist yet for immersion. Without them, manufacturers are forced to extrapolate durability from incomplete or mismatched data. It’s a risky proposition as immersion cooling moves from pilot projects to production-scale deployment.

Air-based standards no longer align with the chemical and mechanical failure modes that dominate in immersion environments (Fig. 1). The need for fluid-specific reliability models is no longer theoretical. It’s a growing gap that must be closed to support the next generation of high-performance data centers.

Observations from Early Immersion Cooling Deployments

Although interest in immersion cooling is accelerating, most current deployments rely on adaptations of air-cooled hardware rather than purpose-built layouts. Many early deployments simply immerse air-cooled servers into dielectric fluids—what I call "dunking and crossing your fingers.” Compatibility issues immediately surface with this approach, and ityields little usable reliability data for long-term standards development.

Without purpose-built immersion hardware, it remains difficult to isolate true failure mechanisms or build reliable aging models based on field experience. Many early systems, designed around air-cooling assumptions, are inadvertently overbuilt for fluid operation—hiding potential reliability risks and leaving efficiency gains untapped.

Electrical and Interconnect Challenges in Immersion

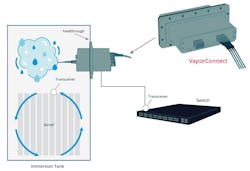

Purpose-built immersion hardware is still rare, limiting the availability of meaningful real-world data (Fig. 2). This has slowed efforts to refine reliability frameworks based on performance in the field instead of lab-based assumptions.

Electrical challenges are also emerging. Because dielectric fluids have a higher dielectric constant (Dk) and dielectric loss (Df) than air, high-speed connectors experience increased signal attenuation and impedance shifts, especially at higher frequencies. If unaddressed, these effects degrade system bandwidth and throughput, making specialized interconnects a central requirement for next-generation plans.

Opportunities for Immersion-Specific Hardware Innovation

At the same time, immersion environments open considerable new opportunities. Recent testing has shown that power connectors designed initially for air cooling can carry over 150% of their rated current when fully submerged. Future immersion-specific setups could reduce copper mass by as much as half while still meeting demanding thermal and electrical requirements, supporting more compact, efficient configurations.

With true immersion-optimized frameworks, the industry can rethink foundational aspects of system design, including rack density, fluid-based thermal management, and long-term mechanical durability. Purpose-built systems will enable smaller form factors and improved thermal headroom, while reinforcing resilience against new chemical and mechanical stresses introduced by fluid environments.

Adapting Accelerated Life Testing and Introducing New Strategies

As immersion cooling moves toward broader adoption, reliability testing methods must evolve to reflect the realities of fluid-based environments.

Accelerated life testing (ALT) remains a core methodology, but its application to immersion cooling requires adaptation. As traditional aging models fall short, the industry is beginning to apply physics-of-failure (PoF) principles—identifying how materials, structures, and electrical pathways degrade under real immersion conditions—to redefine reliability from the ground up.

Conventional acceleration models, such as Arrhenius for thermal aging, may need revisions to account for chemical reaction kinetics and fluid-material interactions, potentially altering standard acceleration factors. Immersion environments also demand complementary strategies. Test-to-failure (TTF) techniques are becoming more important for identifying risks such as material swelling, chemical softening of seals, and gradual shifts in electrical properties.

Integrating Combined Stress Factors for Realistic Testing

Effective reliability testing now demands integrating multiple concurrent stress factors. Thermal cycling, mechanical loading, chemical degradation, and electrical signal shifts must be evaluated together to fully characterize system behavior over time. Frameworks based on oxidation aging and thermal stress relaxation (once sufficient for air cooling) no longer apply.

>>Check out Part 1 of this series, and the TechXchange for similar articles and videos

New models—likely grounded in PoF approaches—must account for thermal-chemical degradation kinetics, fluid-material compatibility, and the unique mechanical loads introduced by immersion, such as buoyancy and dynamic fluid forces.

Advancing Immersion Testing Through Industry Collaboration

By applying focused immersion workstreams, the Open Compute Project (OCP) is bringing together server OEMs, immersion fluid developers, connector manufacturers, and hyperscale operators to build shared reliability benchmarks. Rather than rely on fragmented efforts, these collaborations aim to establish a common foundation for evaluating immersion-specific challenges.

Exploring the Role of HALT in Immersion Environments

Highly accelerated life testing (HALT), while not yet widely applied to immersion, holds promise for exposing design vulnerabilities unique to submerged systems. Adapting HALT for immersion introduces new challenges, particularly in applying extreme combined stresses—thermal, vibrational, and chemical—directly within a fluid medium. Traditional dry-air HALT methods may pass hardware that later fails under fluid exposure, highlighting the need for immersion-based stress testing.

Building a Complete Immersion Reliability Cycle

Short-term immersion evaluations, often lasting only a few days, can miss critical failure mechanisms that emerge only after prolonged fluid exposure. Effective accelerated protocols must replicate the cumulative chemical, thermal, and mechanical impacts that build up over extended immersion periods—not just short-duration tests.

Various structured approaches specifically focus on immersion environments. A testing matrix should span three crucial phases: dry-in-air baselines, fully immersed operation, and post-immersion (wet-in-air) performance. Capturing this full cycle is essential because components may absorb fluid during immersion, altering their mechanical strength and electrical behavior even after drying.

In some cases, the "wet-in-air" condition creates the highest risk, as trapped fluid residues, particularly within porous materials, can compromise dielectric strength or accelerate localized corrosion when components are re-exposed to air.

Redefining Industry Standards for Immersion Reliability

Initial priorities include addressing chemical degradation of materials and signal integrity shifts that occur under fluid exposure. At the same time, OCP contributors are reassessing traditional accelerated aging models, recognizing that air-based assumptions no longer reliably predict degradation patterns in immersion systems.

Through the guidance of OCP, Molex has taken an active leadership role by contributing immersion-focused reliability proposals—including a draft testing methodology submitted to EIA in Fall 2024—to help shape emerging industry standards. These proposals outline structured methodologies for accelerated testing, mechanical validation under fluid conditions, and fluid-material compatibility assessments at the component level. They create a technical framework to support broader standardization efforts.

Other industries provide useful parallels. In automotive reliability testing, for example, contaminant exposure is often deliberately introduced to simulate long-term field degradation. A similar approach could strengthen immersion validation by deliberately introducing known chemical-degradation catalysts, accelerating the emergence of failure mechanisms typically seen over multi-year operational cycles.

Without alignment around fluid-specific reliability frameworks, immersion testing risks fracturing across proprietary methods, creating interoperability challenges and delaying adoption. By investing early in shared methodologies, Molex and similar companies are helping to build predictable reliability pathways and scalable immersion-cooled systems. Industry collaborations will only help to unify these efforts (Fig. 3).

Accelerating Toward Immersion Reliability

Over the next two to three years, the trajectory of immersion cooling will hinge on the industry's ability to formalize fluid-specific reliability standards, expand laboratory infrastructure, and capture real-world performance data from early deployments.

One immediate priority is codifying standardized reliability frameworks—grounded in physics-of-failure principles—that address how materials, mechanical structures, and electrical systems behave under fluid exposure.

Just as critically, collecting real-world failure data from early immersion deployments will be essential to validate laboratory acceleration models and refine long-term reliability predictions. Establishing clear testing protocols for accelerated life analysis, fluid-material compatibility validation, and post-immersion failure assessments will be key to generating consistent, comparable data across suppliers.

Expanding access to immersion-compatible test environments, such as fluid-aging rigs, multi-stressor platforms, environmental chambers, and advanced material characterization systems, is equally vital to closing existing data gaps. Without the ability to apply combined chemical, thermal, mechanical, and electrical stresses in realistic conditions, durability predictions will remain uncertain, hindering large-scale deployment.

The potential benefits of immersion cooling are substantial. Higher rack densities and improved thermal headroom both align with the evolving demands of AI-driven and high-performance computing architectures.

Formalizing standards and expanding immersion-specific testing capabilities will therefore be essential to unify reliability practices, streamline component validation, and support broader adoption at scale.

It’s important to recognize that we need to close these technical gaps now, while the immersion ecosystem is still taking shape, since it will define the future of high-performance computing. By establishing clear standards and building rigorous fluid-specific testing frameworks, the industry can unlock the full potential of immersion cooling and reshape the data center landscape for decades to come.

>>Check out Part 1 of this series, and the TechXchange for similar articles and videos

About the Author

Dennis Breen

Senior Principal Engineer, Molex

Dennis Breen is the Senior Principal Engineer for Molex with more than three decades of expertise in designing innovative connectors and electromechanical devices. Successfully attaining several patents, Breen has made significant contributions to the field, particularly in high-speed data interconnects.