Set-Top Boxes Evolve from Media-Consumption Device to Smart-Home Hub

Change is overtaking the set-top box. Don’t worry: It still merits its place next to our television sets. But a confluence of three powerful forces bears down on the familiar little box, transmuting it into something new and remarkable in both function and architecture. The locus of this transformation is the SoC at the box’s heart, where the forces demand machine-learning acceleration at an unprecedented price/performance point.

Three Forces in Play

What are the three forces pushing the set-top box into an evolutionary process? The first operates on the box’s human interface. The second roils the approach to data security, not just for the set-top box but for the entire content-delivery network. And the third alters how the box processes visual data.

The change in human interface will be the most evident one for end users. Now that consumers are on casual speaking terms with Amazon’s Echo and Google’s mini, they will have no patience for on-screen menus or push-to-talk speech commands. We’re even losing patience with wake words like Alexa, Hey, and OK.

Soon we shall expect a human interface to absorb context, recognizing speakers, their location, and their intent, so that the system accurately recognizes when it is (and isn’t) being addressed. And we shall also expect the system to use this contextual information in new ways, anticipating our needs and moods to adjust our entertainment and our smart home accordingly.

Today’s voice-command systems can already do a small subset of these functions. But due to the lack of local computing performance and memory in today’s set-top boxes, they must upload the data they collect to the cloud for interpretation. Already there are privacy concerns, as users realize smartphones and speakers are windows into their homes. In some cases where such an uplink crosses a national border, it may be illegal. Enter our second force: the drive for data protection.

But now add more data: additional microphones, cameras integrated into the device (as in, for example, Portal TV), and perhaps even more video from security cameras, to achieve a multi-modal user interface. Taken together, this data set is far too personal to expose outside the home. Without heavy compression, which can erase the subtle cues required by the intuitive human interface, there’s not even the upstream bandwidth to push all of this data back to the cloud. The local device must have the capacity to analyze the data at the edge in the device itself. And today, that means the ability to run very fast machine-learning inference models.

Which brings us to the third force: The overwhelming adoption of 4K Ultra-High Definition displays in the developed world (recent estimates say 40% of U.S. households have a 4K TV) is causing a crisis in downstream bandwidth. Content providers are only offering a small amount of 4K source material. And system operators simply don’t have the bandwidth to provide endless high-quality 4K streams at once. Many systems can offer no more than 5 to 6 Mb/s per user during peak demand. Even though HEVC/AV1 codecs can do amazingly well at low bit rates, a content stream compressed from a 4K source down to 6 Mb/s doesn’t make for a pleasant viewing experience.

Consequently, system operators have been encoding 1080p sources rather than 4K. This produces a better viewing experience at low bit rates, but even the best HEVC decoder can’t recover data for the 4K display that wasn’t in the 1080p source.

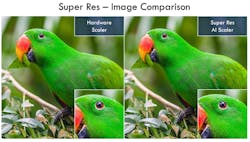

A codec can’t do that, but companies such as Synaptics have shown that it’s possible with a machine-learning model. By classifying portions of the image, the model can infer their original appearance and generate appropriate additional pixels, such as the iridescent ribbing of a feather or the true sharpness of a knife edge that aren’t present in the source (Fig. 1). This isn’t interpolation, but intent-based image generation—much as a skilled painter might roughly sketch an outdoor scene and then add detail later in their studio.

Pixel generation of this nature is a powerful method for image enhancement. But it requires the machine-learning model to process the output of the HEVC/AV1 decoder, frame by frame, in real time. That raises two issues: First, while the required computational speed is possible with a modern, high-performance GPU, it would be far beyond the cost or power budgets of a set-top box. Second, the deep-learning model would be handling third-party copyrighted data in viewable form, so it would have to be impenetrably secure.

An AI Challenge

The converging forces applied toward set-top boxes by multi-modal user interfaces, increased security, and image enhancement all drive toward the same conclusion—the need for a powerful, flexible, and secure inference-acceleration engine in the set-top SoC. At first glance this might seem a hopeless request. GPUs have the speed for these tasks, but don’t come close to expected set-top box cost or power limits. They’re invaluable in a data center for training machine-learning models, but they’re out of place for inferencing at the network edge.

A more hopeful example comes from smartphones. The emerging generation of handsets includes an inferencing accelerator IP block in the devices’ SoCs. The promising aspect is that these blocks meet the cost and power requirements of their environment. But the performance of these accelerators, limited by those stringent requirements and by memory bandwidth, still falls well short of what it takes to perform image enhancement on 30 to 50 frames/s in real time.

A Solution

One example of an SoC that helps solve the set-top box AI acceleration challenge is Synaptics’ VS680. It enables the box to become the nerve center for a multi-modal interface between humans and smart homes.

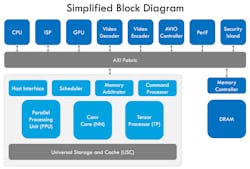

The platform includes all of the expected blocks for an advanced set-top SoC (Fig. 2). But the key innovations center on an entirely new IP block: An AI inferencing accelerator optimized for the requirements of the set-top environment and designed to meet the new challenges of multimodal human interfaces, enhanced security, and real-time image enhancement.

Clearly, the first challenge in these areas is performance (Fig. 3). For the user interface, the SoC must perform a battery of diverse tasks—primary-speaker isolation from multiple microphone channels, speech recognition and pairing, object classification from multiple cameras, and face recognition—all in real time. All of these tasks are necessary to detect commands from the correct person and to identify the necessary context for an adaptive human interface.

It took three innovations to achieve the necessary performance within the set-top constraints. First, a massively parallel architecture organized around machine-learning network data flows—not around the needs of graphics rendering is used. This allows far more efficient handling of data during inference computation, across a wide variety of model types.

Second, the most intensive computations (convolutions) are performed in INT8 rather than in FP32 format, drastically reducing hardware and energy requirements for each individual calculation. This optimization has been repeatedly shown to have very little impact on classification accuracy.

Third, the accelerator is equipped abundant memory bandwidth. A sizable on-chip neural processing unit (NPU) cache is combined with a 64-bit, very high-speed LPDDR4 (3733) interface to DRAM. This allows for simultaneous inference acceleration and video decoding and graphics rendering with DRAM bandwidth to spare.

The next challenge is security. The conventional SoC architecture, which is centered on a CPU core, permits far too many ways for a malicious actor to access protected data. Conventional set-top SoCs have gone to great lengths to keep the CPU from gaining access to the video buffer, where content resides in an unprotected form. When adding an inferencing accelerator, which must also handle unscrambled data, it becomes necessary to ensure that not only the video buffer, but all of the internal memory instances used by the accelerator, are unreadable by the CPU. Synaptics’ SyKURE technology achieves this isolation.

Overall, the system performs frame-by-frame image enhancement in real time with full 4K output at up to 50 frames/s.

A Comprehensive Environment

Introducing the added dimension of AI acceleration raises an important new issue. A machine-learning accelerator is useless without trained machine-learning models and the development environments necessary to customize, maintain, and augment them. To that end, Synaptics offers a variety of trained inference models. It also supports the VS680 in the TensorFlow Lite and ONNX neural-network development environments, making the accelerator a genuinely open design (Fig. 4).

Secure, AI-enhanced SoCs can bridge the gap from the fundamentally closed world of the set-top box into the open-ended future of the perceptive, adaptive human-interface hub. They will give developers the opportunity to create tomorrow’s human interface, relying on machine learning to transform the box’s sensor inputs into a perception of the users’ wants and needs. By adjusting and retraining the inference models—without hardware changes—they can stay current with new fashions in perceptive user-interface design and with advances in video presentation.

Gaurav Arora is Vice President of System Architecture and AI/ML Technologies at Synaptics.

About the Author

Gaurav Arora

Vice President, System Architecture and AI/ML Technologies, Synaptics

Gaurav Arora is currently leading the Smart Home Systems-on-a-Chip Product and Solutions Architecture team for Synaptics’ IoT Division, spearheading product and technology direction in video/vision AI/ML frameworks and models, device security, and system architecture. He conceptualized and built a framework for AI/ML secure inferencing for the company’s SoCs, which is now trademarked under the SyNAP name.

Arora holds a BA in electronics and communications engineering from the Birla Institute of Technology (India), an MSEE from the University of Maryland, College Park, and an MBA from the Stern School of Business, New York University. He holds more than 10 U.S. patents.