Not All Emulators Are Created Equal

For a verification technology that wasn’t well known outside of the largest semiconductor designs until a decade ago, hardware-emulation adoption has grown considerably over the past few years. Semiconductor companies from Silicon Valley to China are making it a mandatory tool in their verification toolboxes.

Why this sudden increase in hardware-emulation deployment within the design-verification flow?

Hardware emulators are specialized computing engines designed to perform specific tasks, including the functional verification of semiconductor designs and associated verification challenges. They do so at speeds unmet by any software-based verification engine with no penalty in verification accuracy.

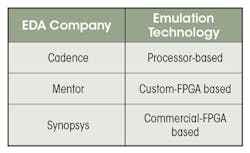

However, not all emulators are created equal. Currently, the major hardware-emulation suppliers deliver three different hardware-emulation technologies, architectures, and implementations, making any “apples-to-apples” comparison rather difficult (see table).

Each emulation provider uses a different approach to its technology. (Source: Authors)

A case in point is the parameter called clock frequency. A straight comparison may lead to a wrong conclusion if not properly defined as shown below. In general, hardware-emulation performance is measured by speed, throughput, and latency, three parameters often poorly understood or not grasped at all. Let’s discuss them in detail.

Emulation Clock versus Design Clock

Speed or speed of execution relates to the emulator’s clock frequency. While it’s true that the higher the clock frequency, the faster the execution, the reality is that no two emulators handle clocks in the same way.

Any modern system-on-chip (SoC) design includes a variety of clocks of different frequencies, one being the fastest design clock. It would be reasonable to connect the fastest design clock to the emulator clock. Unfortunately, this is not so.

Depending on the emulation technology and architecture, the ratio between the emulator clock and the design clock may be one-to-one, or maybe two-to-one, or even higher. Obviously, if the clock ratio in emulator A is one-to-one, but two-to-one in emulator B, assuming the same frequency for both emulation clocks, the first emulator processes the design at twice the speed of emulator B (see figure).

The differences are evident between the emulation clock versus design clock ratios of emulators from two competing vendors. On the top is a two-to-one ratio;on the bottom is a one-to-one ratio. (Source: Lauro Rizzatti)

Emulation clock speed is usually reported by the emulator, and therefore well understood and easy to compare. Based on the above, it’s important to make sure what clocks are compared.

Design Clock in Presence of Design Memories

You may wonder what memories have to do with clocks. In an emulation system, they may do a lot.

In field-programmable gate-array (FPGA) prototyping systems, design memories are implemented with physical memories functionally equivalent to those used in the final design.

In any emulation system, memories (including their controllers) are implemented via soft models that map onto programmable resources in the emulator. The approach provides flexibility and support for multi-user and remote access that’s hard to do with physical memories. Modeling a memory controller with multi-port access, multi-data rates, and a complex read/write scheme may require multiple emulation clock cycles within one design clock cycle to execute the entire memory cycle.

The net consequence is a drop in the design clock frequency, which may be significant. Again, this issue depends on the implementation of memories used by the emulator system.

Wall Clock Time and Throughput

Raw emulator clock speed isn’t indicative of how long it takes to download the design onto the emulator, run tests, collect debugging data, understand the problem and get an answer to the problem from the beginning to the end. This time is captured by the wall-clock time and it’s comprehensive of useful cycles where design activity is processed and the overhead cycles not directly associated with performing design computations.

Throughput, instead, focuses only on useful cycles. It encapsulates the time it takes to run a number of useful cycles.

Throughput can be measured by dividing the number of useful cycles in an emulation session by the total wall clock time leading to a number similar to the clock speed. If the session doesn’t have any overhead cycle, the throughput is equal to the clock speed, assuming the ratio of emulation and design clock is one-to-one, or 50% slower if the ratio is two-to-one. On the other hand, if the testbench spends half of the time processing information, the throughput gets divided by half.

Let’s review some typical issues and their impact on throughput.

Design Setup

An emulation session begins with downloading the compiled design object onto the emulator and configuring its programmable resources to mimic design behavior. The footprint of a large design may reach into the terabyte range. This large amount of data is transferred to the emulator via a PCIe 3.0 interface that may support a theoretical maximum transfer rate of up to 20 GB/s on 16 lanes. Typically, it will be less, often much less than that. The loading/configuring may take several minutes, depending on the emulation architecture and implementation.

The effect of the loading/configuring overhead on the wall-clock time varies with the length of the test, and isn’t related to clock speed. For a short test, it may be relevant. For long test, it may be rather small, and it happens only once during an emulation session.

Startup Time

Situations arise where the actual testing occurs after a long startup overhead, including some kind of initialization. For example, in running application-level testing, the design may get into an advanced state that possibly involves booting an O/S. Startup times should be factored in relation to the actual useful test execution work.

Software versus Hardware Testbench Environment

Throughput can be greatly impacted by the behavior of a software testbench environment if there are cycles where the testbench is active and the design is not. Depending on the communication infrastructure supported by the emulator, and the management of the flow of information between software and hardware, various levels of time can be spent associated with testbench computation or data exchange in which the design isn’t doing useful computation. Namely, it’s sitting idle or performing something other than computation. This type of testbench overhead is important for throughput.

An inefficient testbench environment supporting emulator A with a faster design clock may have significantly lower throughput than emulator B with a slower design clock, but supported by an efficient testbench environment. Depending on the specific implementation of the software testbench environment, the drop in throughput could reach five to 10 times that of the emulation clock speed.

Visibility Data Collection

Throughput can be affected due to performing jobs heavily dominated by visibility data collection. For example, when carrying out power estimation, a great deal of time is spent executing power calculations.

In general, when performing voluminous data upload, the problem is dominated by the time it takes to extract and process data from the system compared with the time spent by the emulator for actual design computation. This is another situation where throughput is important and clock speed less so.

In this case, the specific implementation of the data-collection mechanism may deleteriously affect the throughput with a drop of 10 times that of the emulation clock speed.

In in-circuit-emulation (ICE) testing, the physical target system and the emulator advance synchronously on a clock-by-clock cycle basis with no impact on the throughput due to the testbench environment.

Throughput versus Clock Speed

Getting good throughput is a function of an efficient communication channel exchanging data between the workstation and the emulator, and efficient software processing of that data.

Clock speed is often an interesting parameter and one that’s easy to measure, but not directly related to the more important wall-clock time and throughput. Oftentimes, clock speed isn’t the dominant factor in wall-clock time and throughput.

Emulation throughput isn’t easy to measure, and vendors don’t provide any data. It may happen that an emulator with a higher design clock speed may have lower throughput than an emulator running at a lower design clock speed. It may be an order of magnitude lower, and the only way to assess it is via a benchmark.

Latency

According to Wikipedia, “Latency is the time elapsed between the cause and the effect of some physical change in the system being observed. Latency is physically a consequence of the limited velocity with which any physical interaction can propagate.”

In emulation, latency plays a role when deployed in a software test environment; not as much in ICE mode.

Latency in a Software Test Environment

Latency takes into account the time interval between sending a message from the emulator to the software test environment and receiving a response generated by the software. It’s important when reactive interactions occur between the emulator and the software. That is, when activities in software force the emulator to stall and wait for answers before continuing with its design computation.

Here are few examples.

Low latency is important when modeling a big memory in software. To read from a software-based memory, the emulator sends a message to the software and stalls until receiving the content of the memory.

Low latency is important in a universal-verification-methodology (UVM) testbench environment when functions need answers from the emulator, forcing the emulator to wait for them.

The impact of the latency on run-time depends on the efficiency of the testbench or of the software interacting with the emulator. When possible, one way to alleviate or eliminate the impact is to use data streaming. In data streaming, the emulator doesn’t wait for the software, nor is the software waiting for the emulator. Instead, the data is sent continuously and, when needed, it’s immediately consumed.

Software interactions aren’t cycle-oriented. They’re loosely coupled where messages get sent back and forth. A message may be sent out of the emulator to the software testbench and it comes back later. Normally, the emulator clock doesn’t slow down at its normal rate to account for it.

Latency in an ICE Environment

Latency is typically used when the emulator is decoupled from its test stimulus and has to be resynchronized when they’re interacting.

In an ICE environment, the target and the emulator run coupled together on a cycle-by-cycle basis because ICE interactions are cycle-oriented. In this context, latency takes into account the delay of signals propagating through the ICE cables. If the cables are long, the delay may be noticeable and require the clock speed to slow down. By contrast, managing latency in a software test environment isn’t done by changing the clock speed.

Conclusion

Hardware-emulation performance is captured by three parameters: clock speed, throughput, and latency. Clock speed, typically reported by the emulator, is well understood, with the caveat that emulation clock and design clock may differ. Throughput is mostly misinterpreted and often ignored in favor of clock speed. In fact, it is the most important performance parameter when the emulator is deployed within a software test environment and may make a world of difference when comparing competing emulators. Finally, latency plays a negative role with software testbenches, and different emulators typically have different latencies.

About the Author

Lauro Rizzatti

Business Advisor to VSORA

Lauro Rizzatti is a business advisor to VSORA, an innovative startup offering silicon IP solutions and silicon chips, and a noted verification consultant and industry expert on hardware emulation. Previously, he held positions in management, product marketing, technical marketing and engineering.

Jean-Marie Brunet

Senior Director of Product Management and Engineering, Scalable Verification Solutions Division, Siemens EDA

Jean-Marie Brunet is the senior director of product management and engineering for the Scalable Verification Solutions Division at Siemens EDA. He has served for over 20 years in application engineering, marketing, and management roles in the EDA industry, and has held IC design and design management positions at STMicroelectronics, Cadence, and Micron among others. Jean-Marie holds a Master's degree in Electrical Engineering from I.S.E.N Electronic Engineering School in Lille, France.