Download this article in PDF format.

Machine-learning (ML) technology is radically changing how robots work and dramatically extending their capabilities. The latest crop of ML technologies is still in its infancy, but it looks like we’re at the end of the beginning with respect to robots. Much more looms on the horizon.

ML is just one aspect of improved robotics. Robotics has demanding computational requirements, and that’s being helped by improvements in multicore processing power. Changes in sensor technology and even motor control have made an impact, too. Robots are being ringed with sensors to provide a robot with more context of its environment.

Thanks to all of these improvements, robots can tackle new tasks, work more closely with people, and become practical in a wider range of environments. For example, ABB’s YuMi “you and me” IRB 14000 robot (Fig. 1) is a collaborative, dual-arm, small parts assembly robot. It has flexible hands and a camera-based part-location system. Cameras are embedded in the grippers.

1. ABB’s YuMi IRB 14000 is a collaborative, dual-arm, small parts assembly robot.

Safety functionality built into YuMi makes it possible to remove barriers to collaboration—there’s no longer a need for fencing and cages (Underwriters Laboratory ISO 13849-1 PL, or performance level, b Cat b). Its lightweight arms, designed to have no pinch points, have a floating plastic casing that can also be padded. The software can detect collisions and stop movement within milliseconds. All of the wiring for the self-contained system is internal.

Locus Robotics’ LocusBot (Fig. 2) is another example of a mobile robot designed to work collaboratively with humans. The wheeled robot can move autonomously through a warehouse. Its tablet interface runs the LocusApp that allows users to interact with the robot. The tablet has a built-in scanner to easily identify an item’s bar code. Robots are linked wirelessly to the LocusServer.

2. Locus Robotics’ LocusBot is designed to work collaboratively with humans.

The LocusBot weighs in at 100 pounds, has a payload up to 100 pounds, and can move at speeds up to 1.4 m/s. The battery charges in less than an hour, providing a 14-hour runtime.

More Sensors

Robots are using more sensors and different types of sensors to evaluate their environment. The YuMi includes motor feedback and cameras in addition to movement sensors. The LocusBot incorporates range sensors in its base.

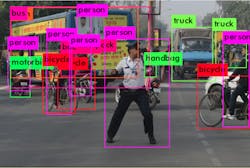

3. Machine learning is allowing robots to track many different types of objects using cameras.

The ability to track many different types of objects using cameras is also becoming important, not only to robots, but autonomous vehicles that need to coexist safely with people (Fig. 3). Smaller, lower-cost sensors enable developers to place better sensors in more locations to provide a robot with a wider field of view than people.

High-resolution camera sensors like OmniVision Technologies’ 24-Mpixel OV24B series chip (Fig. 4) help bring visual information to machine-learning tools. The OV24B is built on OmniVision’s PureCel Plus stacked-die technology. It can deliver full resolution at 30 frames per second (fps). It can also do 4-cell binning with a 6-Mpixel resolution at 60 fps, 4K2K video at 60 fps, 1080p video at 120 fps, or 720p video at 240 fps.

4. OmniVision’s OV24B 24-Mpixel camera sensor brings visual information to machine-learning tools.

The OV24B sensor family has on-chip re-mosaic, 2x2 microlens phase-detection auto-focus (PDAF) support. It also offers very good low-light performance. Just what a high-end smartphone or high-performance robot needs.

Multiple sensors of the same type are becoming the norm, such as the four-camera systems that provide surround view in many cars. Dual cameras can provide parallel support to do 3D imaging. Another approach is to use 3D cameras like Intel’s RealSense (Fig. 5). These cameras actually include a 2D sensor in addition to the 3D sensor. This allows one system to provide range information as well as a video stream that can be used by machine-learning systems to identify objects.

5. Intel’s RealSense cameras provide 3D position information in addition to conventional 2D image streams.

The RealSense depth modules use infrared, time-of-flight (ToF) sensing to provide 3D information. They also have 1080p video camera sensors. The standard versions use USB 3.0 connections; modules without the case are available separately. They work best for short- to mid-range indoor applications.

LiDAR and radar work well indoors and outdoors. The need for such sensors in cars and autonomous vehicles has driven down their cost and led to enhancements in performance. Autonomous vehicles are essentially robots, so one might say these sensors are being designed for robotic applications. In any case, they’re equally applicable to other robotic applications as well. Likewise, they’re getting smaller.

Texas Instruments’ mmWave sensors (Fig. 6) are available for short- and long-range operation. These radar systems aren’t affected by lighting and other optical issues. The short-range versions are useful for applications like mobile robots or even detecting when people are in a room. They’re superior to many motion detectors that only indicate the presence or distance to an object rather than movement direction. This ability is useful for a self-opening door in knowing whether a person is coming toward the door or passing in front of it. Robots can also use this type of information.

6. The AWR1642 EVM module is Texas Instruments’ mmWave radar reference design. The tiny array on the right is the radar antenna matrix.

Seeing Machine-Learning Improvements

One area of research that’s having a major impact on robotics is convolutional-neural-network (CNN) and deep-neural-network (DNN) inference. It allows an application to utilize inputs from the new cameras and 3D sensors to identify objects. These tasks are computationally heavy, and there’s lots of data involved in both training and inference. For most robots these days, ML’s main usage will involve inference.

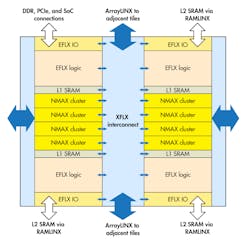

Companies like Habana with its Goya chip and Flex Logix with its NMAX architecture (Fig. 7) are working to reduce the cost and power requirements of using high-performance ML inferencing. The Goya HL-1000 processor can process 15,000 images/s with a 1.3-ms latency, while dissipating only 100 W of power, for the ResNet-50 with a small batch size.

7. Flex Logix’s NMAX is one machine-learning hardware platform designed to allow robots to analyze sensor information from a variety of source.

Small batch sizes are important for inferencing—changing the weights and activation information that’s necessary for large ML models is time-consuming. Making this change quickly and keeping this information local will be the key to success for ML chips. The NMAX architecture has L1 RAM close to the computing elements plus an L2 memory between that and the off-chip DRAM. A high-speed network fabric links everything together.

FPGA companies are also tailoring their solutions for machine learning. Xilinx’s Adaptive Compute Acceleration Platform (ACAP) reworks the FPGA into a more customized compute engine surrounded by accelerators and mated with real-time and application processors. ACAP also has multiple high-speed interconnects linking various components within the system, including neural-network accelerators.

DSPs and GPUs also continue to crunch ML inference chores. For instance, NVIDIA’s Jetson Xavier can deliver 32 TOPS of performance (Fig. 8). This latest Jetson shares the same basic architecture as the NVIDIA T4 that’s being used for training as well as cloud inference tasks. The Xavier module packs in 512 of the latest NVIDIA cores. It also has its own 64-bit ARMv8 processor complex, a 7-way VLIW vision accelerator, and a pair of NVIDIA Deep Learning Accelerators (NVDLAs), in addition to 16 CSI-2 video input lanes plus eight SLVS-EC lanes. It can deliver 20 INT8 TOPS using only 30 W.

8. NVIDIA’s Jetson Xavier can deliver 32 TOPS of performance for machine-learning chores.

Another emerging technology worth mentioning is CCIX (pronounced “see 6”). The Cache Coherent Interconnect for Accelerators is built on PCI Express hardware with its own low-latency, cache-coherent protocol designed to link processors with ML hardware accelerators as well as other accelerators. CCIX is so new that nothing except FPGAs can implement it, but that will be changing over the next couple years.

Machine Learning from Experience and Experimentation

Using inference engines trained in the cloud or on local servers is where it’s at now, but ML research continues. On that front, self-learning is currently a hot topic, and yet another change in how robots will be built and programmed. Such research is often based in neural networks as well, though it looks to be a way to address some of the harder robotic challenges, from handling fabrics to selecting and picking up objects—much along the lines of what Google Research has been doing (Fig. 9).

9. Google let these robotic arms learn to pick up objects.

Google Research turned a hoard of robotic arms loose on a tray of unsuspecting gadgets, foam doohickeys, and Legos to see if they could figure out what to do with them. The 3D cameras were overlooking the tray and arm. It’s only one of hundreds of research projects around the world that have taken off because of the availability of hardware, sensors, and ML software.

Still, physical devices are expensive and take up space. They can also be slow. On the other hand, simulation doesn’t take up space and scales to available computing resources. The challenge is the supporting framework. This is where NVIDIA’s Isaac robot simulation environment comes into play. Isaac is built around an enhanced version of Epic Games' Unreal Engine 4 and the Physx physics engine.

Isaac is tuned for cobots and conventional robots, but simulation is also playing a big part in that other major robotic application—self-driving cars. These typically simulate a much larger environment, with many objects requiring heavy-duty computing and storage environments typically found in cloud computing.

What’s changing the way robotics works are all of these technological advances. Individually they’re useful, but combining them has radically changed how robots are designed, trained, and employed. We’re just at the starting point and that’s rather exciting. However, we should not forget issues such as security and safety in the rush to build the next great robot.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.